1. Introduction

Measures of lexical frequency have played an important role in research on morphological processing. For example, word frequency and base frequency effects on acoustic duration have been used to draw inferences about how complex words are stored in the mental lexicon (e.g., N. K. Caselli, Caselli, M. K., & Cohen-Goldberg, 2016). These frequency measures seem to be accepted in the literature also as important predictors of acoustic duration, as a number of studies have demonstrated higher frequency to be associated with more phonetic reduction (see, e.g., A. Bell et al., 2003; J. L. Bybee, 2000; Gahl, 2008; Jurafsky, Bell, Gregory, & Raymond, 2001; Jurafsky, 2003; Losiewicz, 1995; Pluymaekers, Ernestus, & Baayen, 2005a, 2005b). In accordance with the idea that “[w]hat you do often, you do better and faster” (Divjak, 2019, p. 1), more frequent words are more quickly produced and thus more likely to be shortened.1

However, a third possible frequency measure has been suggested which so far has produced rather mixed results: the relative frequency of a complex word compared to its base word (Hay, 2001, 2003). Relative frequency supposedly taps into morphological segmentability. If speakers encounter a base word often compared to its derivative, the derivative is more easily decomposed into its morphological constituents and is more likely to be processed compositionally. It is expected that words which are more easily segmentable, i.e., words with a stronger morphological boundary, are less likely to be phonetically reduced (Hay, 2001, 2003). However, relative frequency effects on acoustic duration have proven to be notoriously inconsistent. On the one hand, relative frequency effects on duration emerge in some studies under some conditions for some affixes (Hay, 2003, 2007; Plag & Ben Hedia, 2018); on the other, research has also yielded many null effects (Ben Hedia & Plag, 2017; Plag & Ben Hedia, 2018; Pluymaekers et al., 2005b; Schuppler, van Dommelen, Koreman, & Ernestus, 2012; Zimmerer, Scharinger, & Reetz, 2014; see also Hanique & Ernestus, 2012 for a critical review of the findings of earlier studies).

One potential reason for this seemingly inexplicable inconsistency is the prosodic structure of complex words. Plag and Ben Hedia (2018) speculate that it might be more difficult for a relative frequency effect to emerge in affixes that are phonologically integrated into the prosodic word of the derivative, compared to those that form independent prosodic words (Raffelsiefen, 1999, 2007). This is because pre-boundary lengthening caused by a stronger word-internal prosodic boundary might counteract potential reduction effects. While some previous evidence suggests that “morphological effects on fine phonetic detail cannot always be accounted for by prosodic structure” (Pluymaekers, Ernestus, Baayen, & Booij, 2010, p. 523; also see Plag, Homann, & Kunter, 2017, p. 210), there is also evidence in favor of an effect of prosodic word boundaries in complex words on duration. For example, Sproat and Fujimura (1993) show that English /l/ is longer and more likely to be realized as [ɫ] before compound-internal boundaries, which are comparatively strong, than before affix boundaries, which are comparatively weak, or than within simplex words. Auer (2002) shows that final devoicing of /b/ in German suffixed words is characterized by a plosive release which is longer in derivatives with a word-internal prosodic word boundary than in derivatives with no such boundary. Sugahara and Turk (2009) find that segments in base-final rhymes of English affixed words preceding a stronger prosodic boundary are lengthened. Bergmann (2018) demonstrates that segments straddling a boundary in infrequent German derivatives are lengthened when these derivatives feature prosodic word-forming suffixes, compared to when they feature integrating suffixes. These findings suggest that the prosodic word structure of complex words impacts on their durational patterning and needs to be considered when investigating segmentability effects.

In addition to the suspicion that reduction effects due to segmentability might be counteracted by effects of prosodic word structure, there are also two further problems of previous research which need to be addressed. One problem is that earlier studies often only looked at few affix categories individually. This makes it difficult to compare studies (with often different methodologies) across affixes. The second problem is that different studies investigated different domains of durational variation. For example, some studies investigated the duration of the affix, while others looked at the deletion of individual segments. This renders a comparison of studies even more difficult.

The present study addresses these issues. In a broad empirical study, we test how prosodic word structure and relative frequency influence duration, and how the two factors might interact. We do this by making use of three different speech corpora of English. We model the durations of words featuring eight different affixes each, looking at both affix durations and base durations. We show that, counter to what was hypothesized by Plag and Ben Hedia (2018), prosodic word structure is not a gatekeeper for relative frequency effects. Second, we demonstrate that across a large number of affixes, relative frequency effects still do not emerge consistently. Third, we show that in addition to the fact that prosodic word structure cannot explain the absence of relative frequency effects, prosodic structure as such also often fails to explain durational differences.

The paper is structured as follows. Section 2 discusses the role of morphological segmentability, relative frequency, and prosodic structure with regard to the duration of complex words, and develops the specific hypotheses tested in this study. This is followed by a description of our methodology. In Section 4 we present our results. We close with a discussion of the implications of our findings.

2. Segmentability and relative frequency

Morphological segmentability2 refers to the degree to which speakers can decompose a complex word into its morphological constituents. Instead of categorizing words as being either simplex (monomorphemic) or complex (multimorphemic), it is widely assumed that there are different degrees of morphological complexity on a gradient scale: Words can be more complex or less complex; morphological boundaries may be stronger or weaker. The strength of a morphological boundary in a given word can be gauged, for example, on the basis of semantic transparency, type of base, various frequential measures, and the degree of phonetic-phonological integration across that boundary.

Consider the verbs dislike and discard. Both words are considered morphologically complex, as we can identify the bases like and card, which are prefixed with dis-. However, it seems that one of them is more complex than the other: Dislike appears to be more easily decomposable than discard. One reason for this is because dislike is semantically more transparent than discard. Its meaning ‘to not like’ is more straightforwardly compositional, while discard ‘to cast aside’ is semantically more opaque and idiosyncratic.

One way of operationalizing morphological segmentability is through lexical frequency (Hay, 2001, 2003). Derivatives which occur frequently compared to their base are assumed to be less segmentable than derivatives whose base is more frequent than the derived form. If the derivative is more frequent than its base, it is more difficult for speakers to decompose the word into its constituents because they encounter the derivative more often than its individual parts. The derivative will be perceived as a more simplex word. If, on the other hand, the base is more frequent than the derivative, speakers more likely or quickly recognize the base as a constituent and therefore more likely perceive the derivative to be complex. The examples dislike and discard above have shown how morphological segmentability is closely related to the idea of semantic transparency. Hay (2001) demonstrated that unlike the absolute frequency of the derivative, the relative frequency of base and derived form is a good predictor for semantic transparency. Relative frequency has therefore been considered an important operationalization of segmentability.

However, semantic and frequential properties are not the only correlates of morphological segmentability. It has been proposed that semantic opacity, and hence morphological segmentability, correlates with phonological opacity. Hay (2001, 2003), in what is now known as the segmentability hypothesis, suggests that morphological segmentability plays a crucial role in phonetic reduction: More segmentable words are less easily reduced (also see M. J. Bell, Ben Hedia, & Plag, 2020; Ben Hedia, 2019; Ben Hedia & Plag, 2017; Bergmann, 2018). This hypothesis relies on the idea that in derivatives which are infrequent compared to their base, phonetic segments must be more fully realized to facilitate morpheme recognition for lexical access, since the derivative’s meaning needs to be computed from its constituents. The rationale is that in words with strong boundaries, it might be important to fully produce individual segments to make it easier for the listener to recognize the constituents. In simplex words, in contrast, individual segments are less important for recognition. This idea relies on findings that non-morphemic phonemes are more likely to be deleted than morphemic ones (see Guy, 1980, 1991; Labov, 1989; MacKenzie & Tamminga, 2021). Thus, segments in words which are perceived as more simplex (and therefore likely accessed as wholes) should be more vulnerable to reduction than segments in words which are more complex or decomposable, and hence produced as multi-morphemic. In short, the more meaningful a unit, the less easily can it be reduced. Note, however, that this premise is not always supported by the data (see our discussion in Section 5).

The segmentability hypothesis thus relies on assumptions that belong to two classes of explanations for reduction. The first of these classes is what Jaeger and Buz (2017) call a communicative account, i.e., a listener-oriented explanation for reduction. Here we can fit the idea that speakers want to make constituent recognition easy for the listener. The second of these classes is the production ease account, which is speaker-oriented and relies on processing and memory demands (cf. Jaeger & Buz, 2017). It is this approach that fits best with the idea that words are accessed in different ways, or different stages of difficulty, and that this affects acoustic reduction. Both assumptions of the segmentability hypothesis are, however, dependent on online reduction effects. This makes the segmentability hypothesis contrast with the third class of explanations, the representational account, which argues that reduction is encoded offline in linguistic representations (Jaeger & Buz, 2017). It is at present unclear which one of these classes of explanations comes closest to the truth, but several discussions suggest a complex interaction of the three dynamics (Arnold & Watson, 2015; Clopper & Turnbull, 2018; Jaeger & Buz, 2017).

Based on the rationale outlined above, we can formulate the hypothesis that the higher the morphological segmentability (i.e., the higher the relative frequency as calculated by dividing base frequency by derivative frequency), the less reduction is to be expected, i.e., the longer will be the duration of the word. Crucially, such protection against reduction is expected to occur in all domains, i.e., both in the affix and in the base, since both constituents need to be recognized in more complex words. Both the affix and the base (and consequently the whole word) are expected to vary in duration due to relative frequency. The following hypothesis follows from these considerations:

- Hseg1:

- The higher the relative frequency of a derivative (i.e., the more segmentable a derivative is), the longer will be its affix duration and base duration.

2.1. Relative frequency effects on duration: Previous studies

While many studies have investigated the effects of other segmentability-related measures on various response variables, only a handful of studies have focused on the effect of relative frequency on duration specifically. Taken together, they produce a very incongruent picture.

Let us start with the studies that find, as predicted by Hseg1, a positive effect of relative frequency on duration (Hay, 2003, 2007; Plag & Ben Hedia, 2018; Zuraw, Lin, Yang, & Peperkamp, 2020). The flagship study on durational effects of segmentability is Hay (2003). She finds relative frequency effects on the deletion of segments investigating the suffix -ly in English. In an experimental study, she had six undergraduate students read out -ly-affixed words containing a base-final /t/ (e.g., swiftly) embedded in carrier sentences. Relative frequencies were categorized into bins by arranging 20 words into four frequency-matched paradigms (consisting of five words each). She finds that base-final /t/ in highly segmentable words (like abstractly) is more likely to be fully realized than in low-segmentability words (like exactly). In a follow-up study, Hay (2007) finds a relative frequency effect on affix duration for the prefix un- in English. She conducted a corpus study on the ONZE corpus, analyzing 359 affixed and 310 monomorphemic forms containing the string un-. She finds that the un- string is more likely to be reduced in monomorphemic words than in more complex words. She also finds that un- is more reduced in words containing a ‘legal’ phonotactic transition from the affix-final nasal to the base-initial onset (i.e., a transition that also occurs in monomorphemic words), as opposed to those containing an ‘illegal’ transition (i.e., a transition that never occurs in monomorphemic words). Phonotactic transition probabilities can be seen as another correlate of morphological segmentability and will be controlled for in our study.

More recently, Plag and Ben Hedia (2018) find relative frequency effects for two of their four investigated affixes. In a study of the Switchboard corpus, relative frequency affects the affix duration of un- and dis- in the expected direction. The more segmentable a word derived with these prefixes, the longer the prefix becomes. And finally, Zuraw et al. (2020) find in a production experiment with 16 speakers of American English varieties that increasing word frequency and base frequency variables do reduce and promote aspiration, respectively. While they fail to find some effects for different ways of calculating relative frequency, they also observe that voice onset time becomes longer for infrequent words when their base frequency increases. In general, they interpret the results as overall providing support for the segmentability hypothesis.

Let us now move to the studies that find the opposite of what Hseg1 predicts, i.e., a negative effect of relative frequency on duration (Pluymaekers et al., 2005b; Schuppler et al., 2012). Pluymaekers et al. (2005b) investigated frequency effects for four Dutch affixes in the Corpus of Spoken Dutch, also including relative frequency in their models. For ge-, they observe an effect counter to what Hay (2001, 2003) predicts. In their data, less segmentable words are associated with longer durations for ge-. The authors hypothesize that this could be because speakers are more likely to place stress on the first syllable in words they perceive as monomorphemic, given that Dutch monomorphemic words are usually stressed on the first syllable.

Similarly, Schuppler et al. (2012) find a relative frequency effect in the opposite direction (i.e., unexpected following Hay, 2001, 2003) on the presence or absence of the suffix -t in Dutch. In a study on the ECSD corpus, they analyze 2110 tokens ending in the Dutch suffix -t and find relative frequency to be significantly correlated with the likelihood of [t] presence. However, contrary to the segmentability hypothesis, [t] is more likely to be deleted in words which are more segmentable. They hypothesize that this may have been due to differences in both setup and language. First, they measured reduction on the affix instead of on the base-final segment, like Hay did. Second, the uncertainty in choosing from the morphological paradigm was greater in their study because speakers had to decide between different suffixes. This supposedly gives the /t/ a greater information load and therefore a strengthened realization.

Finally, let us briefly review the studies that found null effects of relative frequency on duration (Ben Hedia & Plag, 2017; Plag & Ben Hedia, 2018; Pluymaekers et al., 2005b; Zimmerer et al., 2014; Zuraw et al., 2020). Apart from the one unexpected effect mentioned above, Pluymaekers et al. (2005b) largely fail to find relative frequency effects on duration. The affixes ont-, ver-, and -lijk all do not yield any significant effect of relative frequency. Likewise, Zimmerer et al. (2014) do not find a relative frequency effect on /t/-deletion in German. They constructed a new corpus from recordings of ten German native speakers who were instructed to produce paradigms for specific verbs given a set of pronouns. In this data set, relative frequency does not reach significance as a predictor for the deletion of morphemic /t/ in second- and third-person singular verbs. Further, Ben Hedia and Plag (2017) fail to find a relative frequency effect for the English prefixes un- and in-. In a study of the Switchboard corpus, they do find that double and singleton nasal durations at affix boundaries in words with un- are longer than in words with negative in-, which in turn are longer than in words with locative in-. This mirrors a descending hierarchy of segmentability. However, relative frequency as a gradient segmentability measure of individual words does not reach significance in any of their regression models. Similarly, Plag and Ben Hedia (2018) fail to observe relative frequency effects on affix duration for in- and -ly.

Finally, Zuraw et al. (2020) fail to find a categorical relative frequency effect on the aspiration of base-initial /t/ after the English prefixes dis- and mis-. They categorically coded for each word whether the word or its stem has a higher frequency. This variable did not yield a significant effect on /t/-aspiration. This may be related to their operationalization of relative frequency, as they binned the relative frequency distinction into two distinct categories instead of four as in Hay (2003), or instead of including it as a continuous variable. Moreover, an interaction of frequency (operationalized as the number of movies a word appears in) and the word’s base frequency, which is another possible way to measure relative frequency, does not reach significance when predicting the likelihood of /t/ aspiration.

In sum, it is apparent that relative frequency does not always affect acoustic duration. The question remains why relative frequency produces such an incoherent picture, and to what extent it is a reliable measure as a morphological predictor of acoustic duration. This gap has been noted before, and there have been calls for research investigating when the phonetics of a derivative are influenced by its relative frequency and when they are not (Arndt-Lappe & Ernestus, 2020, p. 199). One idea is that the difference in the emergence of relative frequency effects between affixes might arise because the affixes differ in their prosodic structure. It has been speculated (Plag & Ben Hedia, 2018) that prosodic word integration might inhibit segmentability effects. This will be discussed in the following section.

2.2. The prosodic structure of complex words

As they found relative frequency effects for un- and dis-, but not for in- and -ly, Plag and Ben Hedia (2018, p. 112) hypothesize that these mixed results may arise because of prosodic integration. The affixes un- and dis- are considered to form prosodic words and thus to not integrate into the prosodic word of their hosts, while prosodic word status is less clear for in- and -ly. The question arises if their different prosodic word status might provide an explanation for why the affix categories differ with regard to where segmentability effects can emerge.

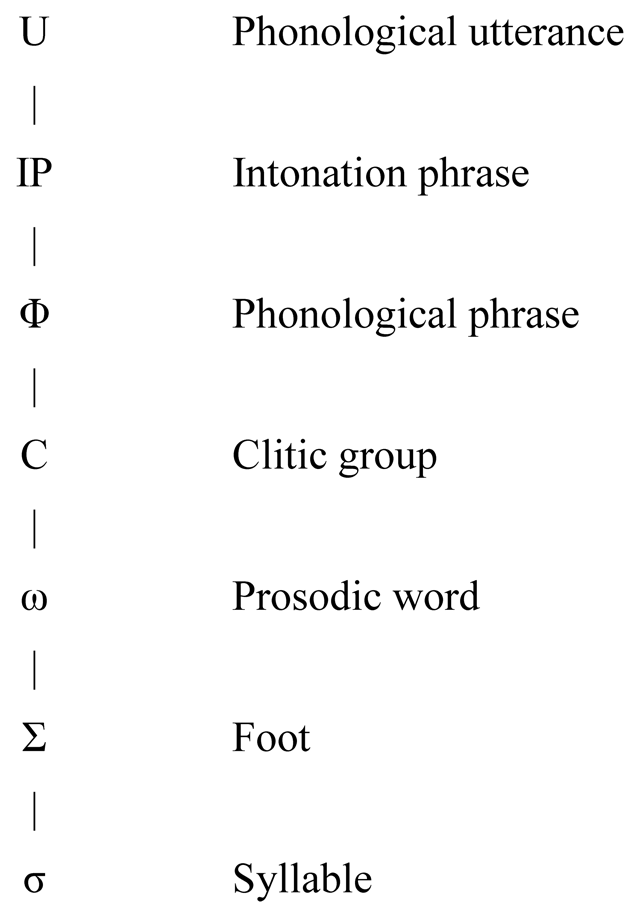

In the prosodic hierarchy (Figure 1), the prosodic word (represented by lowercase omega ω) is considered to be a constituent above the foot level and below the phonological phrase level (Hall, 1999; Hildebrandt, 2015; Nespor & Vogel, 2007). There are several diagnostics that can be used to justify postulating such a constituent, such as violations of syllabic constraints (onset or coda conditions, violation of the law of initials, ambisyllabicity), stress, vowel changes, or semantic criteria (Hildebrandt, 2015; Raffelsiefen, 1999, 2007). The prosodic word is assumed to be the lowest constituent in the hierarchy that reflects morphological information, making it a key player in the transfer of morphological structure to phonetic output.

The prosodic hierarchy, adapted from Hall (1999, p. 9) and Nespor and Vogel (2007, p. 11).

Within the hierarchy, researchers assume a number of constraints, one of which is especially important for the relationship between affix structure and prosodic word structure. Raffelsiefen (2007) suggests that the boundaries of grammatical categories must align with prosodic word boundaries, a constraint referred to as GP-ALIGNMENT. Crucially for our purposes, it follows from this constraint that whenever there are prosodic boundaries, they must coincide with morphological boundaries at the exact same place. This constraint, however, can be dominated by other constraints (such as, e.g., a constraint requiring syllables to have onsets). Because of this, if there are no prosodic boundaries, this does not necessarily mean that there are no morphological boundaries (Raffelsiefen, 2007, p. 212). In other words, a morpheme cannot include multiple prosodic words, but a prosodic word can include multiple morphemes. This means that in our study, there might be cases where an affix is a prosodic word on its own, as well as cases where the affix is just part of a larger prosodic word.

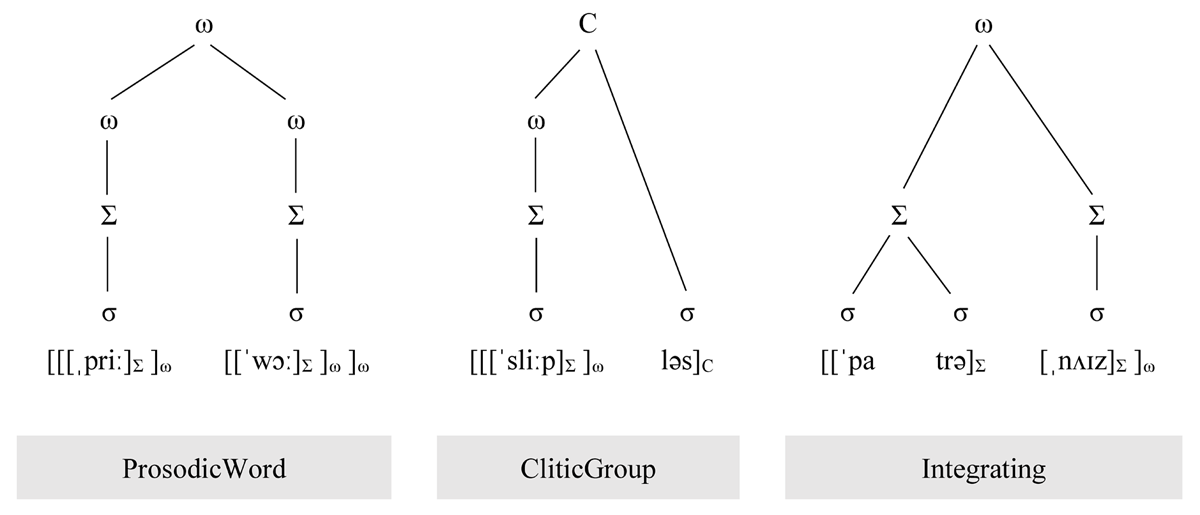

From these constraints, Raffelsiefen (1999) deduces three possible scenarios that describe how an affix can integrate into prosodic word structure. The three structure trees with example words are given in Figure 2. First, an affix can form a prosodic word on its own (the ProsodicWord case). This is, for example, the case with the affix pre-. In the word pre-war, both syllables are stressed and heavy, hence both form a foot as well as a prosodic word each. Second, an affix might form a clitic group with the prosodic word of the stem (the CliticGroup case). An example of a clitic group affix is -less. In the word sleepless, the suffix is unstressed, hence it cannot form a prosodic word. Yet, the suffix does not fully integrate into the host prosodic word either, as the base-final plosive is not resyllabified into the affix syllable, despite /pl/ being a possible onset in English. Third, there are affixes that fully integrate into the prosodic word of their host (the Integrating case). This is the case with -ize. In a word like patronize, the base-final coda nasal resyllabifies into the onset of the derivative-final syllable. The whole derivative is therefore considered to be one prosodic word. In sum, we can see that from left to right, there is an increasing degree of prosodic word integration. Standalone prosodic word affixes are least integrated, while integrating affixes are most integrated. The question remains which potential consequences these structural differences have for acoustic duration.

Three types of prosodic word integration for affixes (following Raffelsiefen, 1999), given as trees as well as in bracket notation. ProsodicWord: In this category, affixes form prosodic words on their own. CliticGroup: Here, affixes form a clitic group with their base. Integrating: In this case, affixes integrate into the prosodic word of their host base. Note that we use C to refer to the phonological domain of the clitic group, following the standard notation from the literature, whereas we use CliticGroup to refer to the category of derivatives in which such clitic group structure can be found.

One of the most important and well-known effects of (prosodic) constituent structure on acoustic duration is pre-boundary lengthening. Before a structural boundary, such as a phrase boundary or word boundary, phonetic material is lengthened, i.e., increases in duration. This phenomenon has long since been described in many different studies (see, e.g., Beckman & Pierrehumbert, 1986; Campbell, 1990; Edwards & Beckman, 1988; Klatt, 1975; Vaissière, 1983; Wightman, Shattuck-Hufnagel, Ostendorf, & Price, 1992) and is now considered to be a robust and reliable predictor of duration. Specifically, it has also been demonstrated to occur in complex words with different prosodic structure (Auer, 2002; Bergmann, 2018; Sproat & Fujimura, 1993; Sugahara & Turk, 2009). In the literature, the amount of lengthening is said to be dependent on two factors: the boundary strength and the position of the boundary type in the prosodic hierarchy.

First, how much a string of segments is lengthened depends on the amount, or strength, of the boundaries. The more constituents begin or end between two segments, the stronger the boundary, and the stronger the pre-boundary lengthening. This means that boundaries add up to form stronger boundaries. For example, the internal foot boundary in pre-war is stronger than the internal foot boundary in sleepless (Figure 2), because pre-war [[[ˌpriː]Σ]ω[[ˈwɔː]Σ]ω]ω features a double foot boundary (one foot ends after the affix and provides a closing boundary, a second foot begins and provides an opening boundary), whereas sleepless [[[ˈsliːp]Σ]ωləs]C just features a closing foot boundary after the base and no second foot. How strong a given boundary is, and consequently how much lengthening occurs before it, thus depends on the number of boundaries that co-occur together.

Second, the amount of lengthening depends on the position of the constituent providing the boundary in the prosodic hierarchy. Higher-level boundaries should be associated with stronger lengthening effects than lower-level boundaries. For example, segments before a prosodic word boundary are expected to be more lengthened than segments before a foot boundary, but less lengthened than segments before, e.g., a clitic group boundary. If the two boundaries in question belong to the same level in the prosodic hierarchy (like a prosodic word contained in another prosodic word), we would still expect the higher-level boundary in the tree to have the stronger lengthening effect than the lower-level boundary contained in the higher-level constituent. Based on these two factors governing prosodically induced lengthening, we can formulate predictions about how duration should vary between our affixes affiliated with different prosodic word categories, as well as predictions about how prosodic word structure could interact with segmentability.

Coming back to the question of how the prosodic structure outlined above might interfere with morphological segmentability, we can hypothesize that morphological segmentability effects on duration might be counteracted by a strong prosodic boundary: According to the segmentability hypothesis, barely segmentable words should be more reduced than highly segmentable words, but if a barely segmentable word has a stronger internal prosodic word boundary, the preceding domain might be protected against reduction. In other words, pre-boundary lengthening effects introduced by prosody might cancel out reduction effects which low segmentability would have allowed for. We can complement our segmentability hypothesis (repeated here for convenience) with this idea as follows:

- Hseg1:

- The higher the relative frequency of a derivative (i.e., the more segmentable a derivative is), the longer will be its affix duration and base duration.

- Hseg2:

- The more prosodically integrated an affix is (the weaker the prosodic boundary), the less likely can higher relative frequency protect the derivative against reduction.

Second, prosodic word structure should have an effect on duration in general, independent of segmentability. It is important to think carefully about which domains should be more lengthened or less lengthened in specific prosodic affix categories since these are nested with prefix or suffix status. For example, many English prefixes are considered to form prosodic words, whereas English suffixes never form prosodic words of their own (Raffelsiefen, 1999). Since the phenomenon of pre-boundary lengthening assumes lengthening only for the domain preceding the boundary in question, which prosodic category we look at will determine which domain (base or affix) we expect to be affected, depending on whether the affix is a prefix or a suffix.3 We can formulate the following predictions for the contrasts between the categories, depending on whether we compare the domains affix and base within a prosodic category, or the prosodic categories within a domain:

Comparing bases and affixes

- HPW:

- ProsodicWord bases should be more lengthened than ProsodicWord affixes. This is because ProsodicWord affixes are prefixes and therefore the base will be subject to word-final lengthening, with the second base-final pword boundary in a word like [[[ˌpriː]Σ]ω[[ˈwɔː]Σ]ω]ω ranking higher than the two internal pword boundaries.

- HINT:

- Integrating affixes should be more lengthened than Integrating bases. Integrating affixes are mostly suffixes, so the suffix will be subject most strongly to word-final lengthening, with the final pword boundary outranking the internal foot boundaries, as illustrated in [[ˈpatrə]Σ[ˌnʌɪz]Σ]ω.

- HCG:

- CliticGroup affixes should be more lengthened than CliticGroup bases. CliticGroup affixes are generally suffixes and lack a foot boundary and prosodic word boundary compared to the bases, as seen in [[[ˈsliːp]Σ]ωləs]C. However, the word-final C boundary ranks higher than the internal pword and foot boundaries, so word-final lengthening should be dominant.

Comparing prosodic categories

- Hpros1:

- ProsodicWord bases should be more lengthened than Integrating bases. This is because ProsodicWord bases end with one foot boundary and two pword boundaries and occur word-finally ([[[ˌpriː]Σ]ω[[ˈwɔː]Σ]ω]ω), whereas Integrating bases end with just two foot boundaries and mostly occur word-internally ([[ˈpatrə]Σ[ˌnʌɪz]Σ]ω).

- Hpros2:

- Integrating affixes (generally suffixes) should be more lengthened than ProsodicWord affixes (prefixes) due to word-final lengthening. The ProsodicWord prefix has two more boundaries provided by the derivative alone (compare [[[ˌpriː]Σ]ω[[ˈwɔː]Σ]ω]ω and [[ˈpatrə]Σ[ˌnʌɪz]Σ]ω), but since the Integrating affix occurs word-finally, it will be followed by at least the same amount of potentially stronger boundaries belonging to whatever word or phrase comes next.

- Hpros3:

- CliticGroup affixes should be more lengthened than Integrating affixes. This is because the word-final C boundary of CliticGroup affixes, as seen in [[[ˈsliːp]Σ]ωləs]C, outranks the pword and foot boundaries of Integrating affixes, as seen in [[ˈpatrə]Σ[ˌnʌɪz]Σ]ω.

- Hpros4:

- CliticGroup bases should be more lengthened than Integrating bases. The CliticGroup internal morphological boundary coincides with just one single foot boundary in words like [[[ˈsliːp]Σ]ωləs]C instead of a double foot boundary like for the Integrating bases in words like [[ˈpatrə]Σ[ˌnʌɪz]Σ]ω; however, the CliticGroup bases feature a higher-ranking internal pword boundary, which the Integrating bases do not.

- Hpros5:

- CliticGroup affixes (generally suffixes) should be more lengthened than ProsodicWord affixes (prefixes). CliticGroup affixes lack foot boundaries and pword boundaries compared to the ProsodicWord affixes (compare [[[ˈsliːp]Σ]ωləs]C and [[[ˌpriː]Σ]ω[[ˈwɔː]Σ]ω]ω), but CliticGroup affixes are mostly suffixes and are therefore subject to the higher-ranking word-final C boundary lengthening compared to the ProsodicWord prefixes.

- Hpros6:

- ProsodicWord bases should be more lengthened than CliticGroup bases due to word-final lengthening and because they feature an additional pword boundary compared to the CliticGroup bases (compare [[[ˌpriː]Σ]ω[[ˈwɔː]Σ]ω]ω and [[[ˈsliːp]Σ]ωləs]C).

We can summarize these predictions as follows (Table 1). We use the > sign to mean ‘should be more lengthened than’:

Summary of predictions for prosodic category effects on duration. The right arrow sign between categories means that the left-hand element is expected to be more lengthened than the right-hand element.

| Comparing bases and affixes | ||

|---|---|---|

| Integrating | CliticGroup | ProsodicWord |

| affix > base | affix > base | base > affix |

| Comparing prosodic categories | ||

| bases | affixes | |

| ProsodicWord > CliticGroup > Integrating | CliticGroup > Integrating > ProsodicWord | |

It has become clear that an empirical investigation is called for that investigates segmentability and prosodic word effects on duration simultaneously. It is further necessary to conduct a study which examines more affixes at the same time, so we can better compare when effects do and do not emerge in the same conditions. We need to assess whether relative frequency is a useful predictor for duration, and under which prosodic circumstances it is not. The aim of the present study is to account for this gap by testing the hypotheses outlined above, as well as by aiming for a larger-scale investigation than previous studies.

3. Methodology

We used three different corpora from two varieties of English, each corpus study including eight affixes each, and measuring both affix durations and base durations as responses. There are at least three advantages to such a wide corpus approach. First, we are able to analyze a lot of data, without having to carry out large-scale experiments in different places. Second, the type of data is conversational speech produced outside of a lab context. Note that ‘conversational speech’ does not necessarily exclude lab speech—conversational speech can also be elicited, and many alleged drawbacks of experimental speech can be alleviated with careful design of experimental setup and stimuli (Xu, 2010). However, there is hardly a way to come closer to a reflection of everyday use of language than by using spontaneous speech outside of the experimental context, without any input by the researcher. It has been argued (e.g., B. V. Tucker & Ernestus, 2016) that research on speech production in particular needs to shift its focus to spontaneous speech to be able to draw valid conclusions about language processing. And third, much psycholinguistic research has been conducted on experimental data that has often not been elicited to guarantee spontaneousness or casualness. However, it is generally important to use a diversity of methods in linguistic research: Spontaneous and non-spontaneous speech both come with their advantages and disadvantages and might yield different results in the investigation of linguistic phenomena. This paper thus contributes to the diversification of psycholinguistic research by using a corpus approach.

The affixes investigated in the present study are listed in Table 2, together with their corpus study affiliation and their assumed prosodic category. The prosodic classification is based on Raffelsiefen (1999, 2007) and, whenever she does not explicitly mention the affix in question, on our application of her criteria for determining prosodic word status (i.e., syllable and foot structure, stress, resyllabification). Note that the selected affix categories are the same across the QuakeBox and ONZE corpora, but partly different for the Audio BNC. This is because after initial sampling from the Audio BNC, some of the affix categories did not yield enough tokens in the New Zealand corpora and were therefore replaced by others.

Investigated affixes in the three corpus studies, sorted by prosodic word category.

| ProsodicWord | CliticGroup | Integrating | |

|---|---|---|---|

| Audio BNC | dis-, in-, pre-, un- | -ness, -less | -ation, -ize |

| QuakeBox corpus | dis-, un-, re- | -ness, -ment | -ation, -able, -ity |

| ONZE corpus | dis-, un-, re- | -ness, -ment | -ation, -able, -ity |

Let us briefly have a look at some of the characteristics of these affixes (following Bauer, Lieber, & Plag, 2013; Plag, 2018). The ProsodicWord affixes are dis-, in-, pre-, un-, and re-. These affixes have in common that they are all prefixes and are characterized by relatively clear semantics: dis-, in-, and un- have a clear negative meaning, while re- is clearly iterative and pre- can carry spatial or temporal meaning. They mostly take free morphemes as bases and their derivatives tend to be rather transparent. They vary in their productivity, with pre- and un- being considered very productive, and dis-, in- and re- somewhat productive. They are generally assumed to be secondarily stressed, although there is some variability, with unstressed or main-stressed attestations. Most of the prefixes are not obligatorily subject to phonological alternation except in-, which assimilates to the following base-initial onset (irregular, illegal, impossible). None of the affixes cause resyllabification. It has to be noted that the prefixes dis- and in- are considered to be prosodic words only most of the time, i.e., in semantically transparent derivatives with words as bases. In opaque derivatives with bound roots, they are expected to integrate into the host prosodic word. However, this is irrelevant for our purposes, as we excluded all derivatives whose base is not a word in order to be able to properly calculate the base frequency, which is needed for relative frequency (see dataset description in Section 3.1). All our dis- and in-affixed words are therefore associated with the ProsodicWord structure.

The CliticGroup affixes are the suffixes -ness, -less, and -ment. Their semantics are generally a little less straightforward than those of the ProsodicWord affixes. While -less has a clear privative meaning, the meanings of -ness, which can denote abstract states, traits, or properties, and -ment, which can denote processes, actions, or results, are not always predictable. Like the ProsodicWord affixes, however, the CliticGroup affixes mostly take free bases and produce mostly transparent derivatives. Both -ness and -less are considered to be highly productive, whereas -ment used to be productive but has lost some of that capability in contemporary English varieties. The CliticGroup affixes are never stressed, not subject to phonological alternations, and not involved in resyllabification processes.

Lastly, the Integrating affixes are the suffixes -ation, -ize, -able and -ity. Compared to the other affixes, their semantics are rather multifaceted, covering a wide range of meanings each. For example, -ize can have locative, ornative, causative, resultative, inchoative, performative or similative meaning, -ation denotes events, states, locations, products, or means, -able is used to express capability, liability, or quality, and -ity denotes properties, states, qualities, sometimes with a ‘nuance of pomposity’ compared to -ness (Bauer et al., 2013, p. 248), and has various idiosyncratic derivatives, too. Their bases are mostly free morphemes, but all of them can attach to bound roots as well. They are mostly transparent, but overall less so compared to the affixes in the other two prosodic categories. The most productive of them by far is -able, whereas -ity is more restricted in its application. Integrating affixes can cause differences in the stress patterns of the derivatives, either being mostly secondarily (-ize) or primarily (-ation) stressed themselves, or capable of causing stress shifts and other phonological alternations within their bases (-able, -ation, and -ity). Resyllabification is commonplace among all of them, making them clear cases of the Integrating category. Having categorized the affixes in this way, let us now turn to the data we used to represent these categories.

3.1. Corpora and datasets

The three corpora we used are the Audio BNC (Coleman, Baghai-Ravary, Pybus, & Grau, 2012), the QuakeBox corpus (Walsh et al., 2013), and the ONZE corpus (Gordon, Maclagan, & Hay, 2007). Finding enough tokens for each affix category in spoken corpora is often a problem, but these three corpora are large enough to yield a sufficient number of observations per category.

The Audio BNC (Coleman et al., 2012) is the largest of the three corpora. It consists of both monologues and dialogues from different speech genres of a number of British English varieties, and contains about 7.5 million words. We extracted the data via its web interface (Hoffmann & Arndt-Lappe, 2021; Hoffmann & Evert, 2018). The QuakeBox corpus (Walsh et al., 2013) consists of mainly monologues spoken by inhabitants of Christchurch, New Zealand, who tell the interviewer about their experiences surrounding the 2010–2011 Canterbury earthquakes. The data was extracted via the LaBB-CAT interface (Fromont, 2003–2020; Fromont & Hay, 2012). At the time of extraction, the corpus contained about 800,000 tokens, or 86 hours of speech spoken by 758 participants. Lastly, the ONZE corpus (Gordon et al., 2007) consists of three collections of recordings from different New Zealand English varieties, the historical Mobile Unit (with speakers born between 1851–1910), the later Intermediate Archive (with speakers born between 1890–1930), and the contemporary Canterbury Corpus (with speakers born between 1930–1984). As with the QuakeBox corpus, LaBB-CAT was used for data extraction. At the time of extraction, all subcorpora contained about 3.3 million tokens, or 392 hours of speech spoken by 1,589 participants.

Wordlists, recordings, and textgrids were obtained by entering query strings into the corpora. These query strings searched for all word tokens that begin (for prefixes) or end (for suffixes) in the orthographic and phonological representation of each of the investigated affix categories. The wordlists were cleaned manually, excluding words which were monomorphemic (e.g., bless, pregnant, station), whose semantics or base were unclear (e.g., harness, predator, dissertation), or which were proper names or titles (e.g., Guinness, Stenness, Stromness). We only included derivatives with words as bases, not bound roots, meaning that the bases had to be attested as independent words with a related sense to the derivative. This is important because we need the frequency of the base word outside of the derivative in order to calculate relative frequency.4 The existence of such bases was determined by consulting pertinent dictionaries such as the Oxford English Dictionary Online (2020), as well as web attestations. Following Pluymaekers et al. (2005b), we included all word forms of a given type (e.g., discover, discovered, discovering etc.).

The three corpora come phonetically aligned by an automatic forced aligner. We extracted the recordings and textgrids twice, (1) including just the affixed word, and (2) including the affixed word plus one additional second of speech before and one additional second after it. The larger interval was used to calculate the covariate speech rate (see Section 3.2.2.) and will be referred to in the following as utterance. In addition to the audio and text files, we extracted a spreadsheet of the tokens including corresponding meta-information and variables which had already been coded in the corpora.

Before starting the acoustic analysis, manual inspection of all items was necessary to exclude items that were not suitable for further analysis. This was done by visually and acoustically inspecting the items in the speech analysis software Praat (Boersma & Weenik, 2001). Items were excluded that fulfilled one or more of the following criteria: The textgrid was a duplicate or corrupted for technical reasons, the target word was not spoken or inaudible due to background noise, the target word was interrupted by other acoustic material, laughing, or pauses, the target word was sung instead of spoken, the target word was not properly segmented or incorrectly aligned to the recording. In cases where the alignment did not seem satisfactory, we specifically examined three boundaries in order to decide whether to exclude the item: the word-initial boundary, the word-final boundary, and the boundary between base and affix. We considered an observation to be correctly aligned if none of these boundaries would have to be shifted to the left or right under application of the segmentation criteria in the pertinent phonetic literature (cf. Ladefoged & Johnson, 2011; Machač & Skarnitzl, 2009). Following Machač and Skarnitzl (2009, pp. 25–26), we considered the shape of the sound wave to be the most important cue, followed by the spectrogram, followed by listening. We also excluded derivatives with geminates (e.g., openness, unnecessary), since in these cases it is usually impossible to determine the acoustic boundary between base and affix (also see Ben Hedia, 2019).

We further reduced the datasets to only those word types and speakers for which there are more than three observations (see note on random effects for word and speaker in Section 3.3.). An overview of the resulting datasets is given in Table 3.

Overview of types and tokens sorted by corpus and affix category.

| Audio BNC | QuakeBox | ONZE | ||||

|---|---|---|---|---|---|---|

| Tokens | Types | Tokens | Types | Tokens | Types | |

| -ness | 300 | 34 | 106 | 13 | 62 | 7 |

| -less | 144 | 21 | ||||

| pre- | 40 | 10 | ||||

| -ize | 365 | 27 | ||||

| -ation | 3370 | 193 | 375 | 36 | 772 | 76 |

| dis- | 503 | 61 | 112 | 19 | 159 | 25 |

| un- | 594 | 73 | 203 | 26 | 224 | 23 |

| in- | 248 | 26 | ||||

| -able | 150 | 19 | 207 | 19 | ||

| -ity | 532 | 21 | 323 | 24 | ||

| -ment | 287 | 24 | 541 | 35 | ||

| re- | 279 | 24 | 250 | 36 | ||

3.2. Variables

Acoustic duration may be affected by a number of variables other than relative frequency and prosodic boundaries, and it is vital to control for as much variation as possible. In order to be sure to remove potential durational differences that arise simply because of the number and type of segments in a given word, we not only introduce important covariates, but also modify the response variable by calculating the duration difference to the expected item duration instead of the absolute duration. The following sections outline all of our variables in detail.

3.2.1. Response variable

Duration Difference

For each word token in our data set we calculated as dependent variable two durational measurements, one for the affix, one for the base. This gives us two observations per word token. Each measurement was paired with the value affix or base in an additional variable (called type of morpheme) that coded whether the measurement was for the affix or for the base. This coding had the advantage that one can look at base durations and affix durations in a single statistical model.

One important problem in analyzing spontaneous speech is that which words are spoken is uncontrolled for phonological and segmental makeup. This problem is particularly pertinent for the present study, as our datasets feature different affixes whose derivatives vary in word length. To mitigate potential durational differences that arise simply because of the number and type of segments in each word, we refrained from using absolute observed duration as our response variable. Instead, we derived our duration measurement in the following way.

First, we measured the absolute acoustic duration of the word in milliseconds from the textgrid files with the help of scripts written in Python. Second, we calculated the mean duration of each segment in a large corpus (Walsh et al., 2013) and computed for each word the sum of the mean durations of its segments.5 This sum of the mean segment durations is also known as ‘baseline duration,’ a measure which has been successfully used as a covariate in other corpus-based studies (e.g., Caselli et al., 2016; Engemann & Plag, 2021; Gahl, Yao, & Johnson, 2012; Sóskuthy & Hay, 2017). It would now be possible to subtract this baseline duration from the observed duration, giving us a new variable that represents only the difference in duration to what is expected based on segmental makeup. However, we found that this difference is not constant across longer and shorter words. Instead, the longer the word is on average, the smaller the difference between the baseline duration and the observed duration. In a third and final step, we therefore fitted a simple linear regression model predicting observed duration as a function of baseline duration. The residuals of this model represent our response variable. Using this method, we factor in the non-constant relationship between baseline duration and observed duration. We named this response variable duration difference, as it encodes the difference between the observed duration and a duration that is expected on the basis of the segmental makeup.

3.2.2. Predictor variables

Relative Frequency

We extracted the word frequency, i.e., the frequency of the derivative, and the base frequency, i.e., the frequency of the derivative’s base, from the Corpus of Contemporary American English (COCA, Davies, 2008), with the help of the corpus tool Coquery (Kunter, 2016).

Derived words are often rare words (see, e.g., Plag, Dalton-Puffer, & Baayen, 1999). For this reason, very large corpora are necessary to obtain frequency values for derived words. We chose COCA because this corpus is much larger than the BNC or the New Zealand corpora themselves, and therefore had a much higher chance of the words and their bases being sufficiently attested. We prioritized covering a bigger frequency range with more tokens. Moreover, we consider it important to use the same frequencies consistently across the three studies. As corpora in different varieties have different sizes, it is difficult to compare effects of frequency for sets of words of which many have very low frequencies, or do not occur at all in one or two of the three corpora. Note that while COCA covers a different group of English varieties than the three audio corpora, these three corpora already differ in the varieties they cover. We therefore cannot avoid using frequency values from varieties which are not covered in at least one of the three corpora we analyze.

We calculated relative frequency by dividing base frequency by word frequency. Calculated this way, the higher the relative frequency, the more segmentable the item is assumed to be. Following standard procedures, we log-transformed relative frequency before it entered the models to improve its skewed distribution. We added a constant of +1 to the variables in order to be able to take the log of the zero frequency of non-attested derivatives and bases (cf. Baayen, 2008).

Prosodic Category

This predictor variable was categorically coded for each affix by assigning the value PW (ProsodicWord), CG (CliticGroup), or INT (Integrating) to each observation containing the pertinent affix (see Section 2.2. and Raffelsiefen, 1999, 2007 for the classification criteria).

Type of Morpheme

As described in Section 3.2.1., there are two observations for each word token, one with the pertinent measurements of the affix, the other with the pertinent measurements of the base. The resulting variable, type of morpheme, can thus take two values, base or affix.

Speech Rate

How fast we speak naturally influences the duration any produced linguistic unit will have. speech rate can be operationalized as the number of syllables a speaker produces in a given time interval (see, e.g., Plag et al., 2017; Pluymaekers et al., 2005b). We divided the number of syllables in the utterance by the duration of the utterance. As explained in Section 3.1., utterance was defined as the window containing the target word plus one second before and one second after it. We considered this window to be a good compromise between a maximally local speech rate which just includes the adjacent segments, but allows the target item to have much influence, and a maximally global speech rate, which includes larger stretches of speech but is vulnerable to changing speech rates during this larger window. The utterance duration and the number of syllables in the utterance window were extracted from the textgrids with a Python script. Pauses (i.e., periods of silence where no syllables were annotated) were not factored into the total duration of the utterance, nor into the total syllable count. We expect that the higher the speech rate (i.e., the more syllables are produced within the utterance window), the shorter the duration of base and affix should become.

Number of Syllables

Researchers have observed a compression effect where segments are reduced if they are followed by more syllables (Lindblom, 1963; Nooteboom, 1972). If we modeled absolute durations, we would expect a higher number of syllables to lead to longer durations, because more syllables mean more phonetic material. However, we already control for the number of segments and thereby indirectly for the number of syllables in our response variable duration difference. We therefore expect number of syllables to be negatively correlated with duration due to the compression effect. The number of syllables in a given derivative was extracted with Python from the textgrids and converted into a categorical variable.

Bigram Frequency

bigram frequency refers to the frequency of the target derivative occurring together with the word following it in COCA (Davies, 2008). It has been found that the degree of acoustic reduction can be influenced by the predictability conditioned on the following context (see, e.g., A. Bell, Brenier, Gregory, Girand, & Jurafsky, 2009; Pluymaekers et al., 2005a; Torreira & Ernestus, 2009). We thus expect that the higher the bigram frequency, the shorter the duration. Following standard procedures, we log-transformed bigram frequency before it entered the models to improve its skewed distribution. We added a constant of +1 to the variables in order to be able to take the log of non-attested bigrams (cf. Baayen, 2008).

Biphone Probability

The variable biphone probability refers to the sum of all biphone probabilities (the likelihood of two phonemes occurring together in English) in a given target derivative. It has been found that segments are more likely to be reduced or deleted when they are highly probable given their context (see, e.g., Edwards, Beckman, & Munson, 2004; Munson, 2001; Turnbull, 2018; also see Hay, 2007 on effects of the legality of the phoneme transition on reduction). There are two possible lines of reasoning behind this. First, the more probable a biphone is, the easier it should be to access it, since with higher probability speakers have a stronger representation of phoneme templates occurring in succession. Note that this idea of reduction based on lexical access speed is debated in the literature (see, e.g., Arnold & Watson, 2015; Clopper & Turnbull, 2018).6 Second, the more probable a biphone is, the better our phonotactic motor skills of pronouncing the biphone will be, as our articulator muscles are trained better in pronouncing segment sequences that we pronounce often. Taken together, we expect biphone probability to be negatively correlated with duration: the more probable the biphones, the shorter the durations.

Biphone probabilities were calculated by the Phonotactic Probability Calculator (Vitevitch & Luce, 2004). For this, we first manually translated the target derivatives’ ASCII transcriptions of the Audio BNC, as well as the QuakeBox and ONZE transcription systems into the coding referred to as Klattese, as this is the computer-readable transcription convention required by this calculator. We then summed the biphone probabilities for each word and divided the result by the number of this word’s segments to obtain the average biphone probability for each word.

3.3. Modeling procedure

When modeling acoustic duration, it is important to control for any potential durational variation that arises simply from word type idiosyncrasies. We therefore decided to fit mixed-effects regression models to the data, using R (R Core Team, 2020), the lme4 package (Bates, Mächler, Bolker, & Walker, 2015), and lmerTest (Kuznetsova, Brockhoff, & Christensen, 2016). Mixed-effects regression can deal with unbalanced datasets and is particularly suited to investigate variables of interest while controlling for other potentially relevant variables (Baayen, 2008). We included a random intercept for word, i.e., our target derivative type, for speaker, and for affix.

In order to be able to include a random intercept for WORD and speaker, we included only word types and speakers for which we have at least three observations. For word, this reduced the data by 953 tokens for the Audio BNC, 377 tokens for the QuakeBox corpus, and 563 tokens for the ONZE corpus (leaving 6299, 2270, and 3111 tokens, respectively). For speaker, we lost 735 tokens for the Audio BNC, 226 tokens for the QuakeBox, and 573 tokens for the ONZE (leaving 5564, 2044, and 2538 tokens, respectively). For affix random intercepts, the token counts remain unchanged. For the final counts, refer again to Table 3.

In the course of fitting the regression models, we trimmed the dataset by removing observations from the models whose residuals were more than 2.5 or 2.0 standard deviations away from the mean, which led to a satisfactory distribution of the residuals (cf., e.g., Baayen & Milin, 2010). This resulted in a loss of 114 tokens (2 % of the data) for the Audio BNC, 85 observations (4.2 % of the data) for the QuakeBox corpus, and 112 observations (4.4 % of the data) for the ONZE corpus.

We used variance inflation factors to test for possible multicollinearity of the variables. Collinearity diagnostics are naturally inflated for models with interaction terms, which is why we created separate models with all our variables, but without the interactions, in order to check for collinearity problems. All VIFs were smaller than 2, i.e., far below the critical value of 10 (Chatterjee & Hadi, 2006).

Models were fitted with interactions between the variables of interest. The models included interactions between relative frequency and prosodic category, prosodic category and type of morpheme, and relative frequency and type of morpheme. The initial models also included all covariates. The models were then simplified according to the standard procedure of removing non-significant terms in a stepwise fashion. An interaction term or a covariate was eligible for elimination when it was non-significant at the .05 alpha level. Non-significant terms with the highest p-value were eliminated first, followed by terms with the next-highest p-value. This was repeated until only variables remained in the models of which at least one level reached significance at the .05 alpha level. To investigate the differences between different interaction levels we relevelled the dataset for each contrast, i.e., for each reference level constellation of base and affix, and of prosodic categories.

4. Results

We report the p-values for the analysis of variance of the fixed effects in our three final models in Table 4. These p-values were calculated with the Anova() function (Type III) from the car package (Fox & Weisberg, 2011). We document the full models for one respective reference level each in Table 6. The interested reader can access the models in the scripts at https://osf.io/xuh42/.

ANOVA p-values of fixed effects fitted to duration difference in the three corpora (Type III Wald chi-square tests). For empty cells, the predictors were non-significant and thus removed from the model during the fitting procedure.

| Audio BNC | QuakeBox | ONZE | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Chi2 | DF | Pr | Chi2 | DF | Pr | Chi2 | DF | Pr | |||||||

| Intercept | 12.7 | 1 | 0.000 | *** | 18.8 | 1 | 0.000 | *** | 4.0 | 1 | 0.045 | * | |||

| relative frequency | 0.3 | 1 | 0.564 | ||||||||||||

| type of morpheme | 236.7 | 1 | 0.000 | *** | 832.4 | 1 | 0.000 | *** | 434.9 | 1 | 0.000 | *** | |||

| prosodic category | 11.0 | 2 | 0.004 | ** | 51.4 | 2 | 0.000 | *** | 12.9 | 2 | 0.002 | ** | |||

| speech rate | 2467.2 | 1 | 0.000 | *** | 742.4 | 1 | 0.000 | *** | 929.3 | 1 | 0.000 | *** | |||

| bigram frequency | 6.1 | 1 | 0.014 | * | 4.7 | 1 | 0.031 | * | |||||||

| biphone probability | 23.2 | 1 | 0.000 | *** | 9.2 | 1 | 0.002 | ** | |||||||

| number of syllables | 56.5 | 4 | 0.000 | *** | 15.4 | 4 | 0.004 | ** | 6.6 | 4 | 0.160 | 7 | |||

| relative frequency:prosodic category | |||||||||||||||

| relative frequency:type of morpheme | 19.4 | 1 | 0.000 | *** | |||||||||||

| type of morpheme:prosodic category | 409.3 | 2 | 0.000 | *** | 1495.1 | 2 | 0.000 | *** | 862.6 | 2 | 0.000 | *** | |||

Before we discuss the effects which turned out significant and remained in the models, let us first examine the null results. We can see from Table 4 that the interaction between relative frequency and prosodic category was not significant in any of the corpora and was therefore removed from the models. The lack of interaction between relative frequency and prosodic category means that we already must dismiss Hseg2 at this point. We do not find evidence that the effect of relative frequency depends on prosodic integration or vice versa.

One problem within the null hypothesis significance testing (NHST) framework is that it is strictly speaking not possible to interpret non-significance as the non-existence of an effect. While we do not find support for an interaction between relative frequency and prosodic category and thus no support for Hseg2, we cannot claim that the opposite is true, i.e., we cannot ‘confirm’ the null hypothesis. To be able to claim with more confidence that prosodic word integration does not affect whether relative frequency protects against reduction, it can be useful to quantify the evidence for the null. One way to do this is by using the BIC approximation to the Bayes Factor (Wagenmakers, 2007).8 With this method, we can approximate the Bayes Factor by using the difference between BIC values of a full model for Hseg2 (including the interaction between relative frequency and prosodic category) and a model for its null hypothesis (i.e., a model that does not include this interaction). If we assume that it is a priori equally plausible that relative frequency and prosodic category interact and that they do not interact, we can estimate the models’ posterior probabilities with the help of the Raftery (1995) classification scheme. Table 5 compares the BIC and Bayes Factor estimates for the three corpora.

BIC approximation to the Bayes Factor (BF) comparing the models for Hseg2 (a full model including the interaction between relative frequency and prosodic category) and H0 (the same model but without this interaction) for the three corpora.

| BIC (Hseg2 model) | BIC (H0 model) | BIC difference | BF(Hseg2) | BF(H0) | |

|---|---|---|---|---|---|

| Audio BNC | –29,087.43 | –29,123.82 | 36.39 | 1.25e–08 | 79,795,562 |

| QuakeBox | –9,923.55 | –9,954.07 | 30.52 | 2.36e–07 | 4,236,810 |

| ONZE | –13,146.06 | –13,179.25 | 33.19 | 6.20e–08 | 16,120,645 |

Final models fitted to duration difference for all three corpora.

| AudioBNC | QuakeBox | ONZE | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Estimate | SE | Estimate | SE | Estimate | SE | ||||

| Intercept | –0.0398 | 0.0112 | * | –0.0531 | 0.0122 | *** | –0.0247 | 0.0123 | |

| relative frequency | –0.0010 | 0.0017 | |||||||

| type of morpheme affix | 0.0223 | 0.0014 | *** | 0.0804 | 0.0028 | *** | 0.0477 | 0.0023 | *** |

| prosodic category CG | 0.0196 | 0.0153 | 0.0204 | 0.0160 | 0.0050 | 0.0172 | |||

| prosodic category PW | 0.0421 | 0.0129 | * | 0.0943 | 0.0138 | *** | 0.0488 | 0.0148 | * |

| speech rate | –0.0338 | 0.0007 | *** | –0.0336 | 0.0012 | *** | –0.0299 | 0.0010 | *** |

| bigram frequency | –0.0017 | 0.0007 | * | –0.0021 | 0.0010 | * | |||

| biphone probability | –0.0063 | 0.0013 | *** | –0.0085 | 0.0028 | ** | |||

| number of syllables 3 | 0.0183 | 0.0040 | *** | 0.0057 | 0.0073 | 0.0022 | 0.0063 | ||

| number of syllables 4 | 0.0223 | 0.0050 | *** | 0.0167 | 0.0085 | 0.0090 | 0.0073 | ||

| number of syllables 5 | 0.0362 | 0.0054 | *** | 0.0398 | 0.0109 | *** | 0.0200 | 0.0091 | * |

| number of syllables 6 | 0.0593 | 0.0112 | *** | 0.0024 | 0.0216 | 0.0237 | 0.0181 | ||

| relative frequency:type of morpheme affix | 0.0056 | 0.0013 | *** | ||||||

| type of morpheme affix:prosodic category CG | –0.0374 | 0.0047 | *** | –0.0929 | 0.0053 | *** | –0.0796 | 0.0040 | *** |

| type of morpheme affix:prosodic category PW | –0.0571 | 0.0028 | *** | –0.1790 | 0.0047 | *** | –0.1092 | 0.0041 | *** |

| N (two observations per token) | 10901 | 3918 | 4852 | ||||||

| N speaker | 595 | 264 | 312 | ||||||

| N word | 441 | 178 | 242 | ||||||

| N affix | 8 | 8 | 8 | ||||||

| R2 fixed | 0.2274 | 0.3523 | 0.2932 | ||||||

| R2 total | 0.3870 | 0.4821 | 0.4836 | ||||||

We can see that in all three cases, the null hypothesis model (i.e., the one that assumes prosodic word integration to not have an influence on relative frequency effects) provides the better fit because of its lower BIC, and that it has the higher Bayes Factor value. The Bayes Factor, roughly speaking, tells us how many times ‘more’ we should believe in the respective hypothesis. According to Raftery (1995), if we start with the belief that the hypothesis and the null hypothesis are equally likely, a Bayes Factor of >150 constitutes ‘very strong’ evidence for the respective hypothesis (i.e., a posterior probability of >.99). We can thus say that for all three corpora, we find very strong evidence for the null. Prosodic word integration does not play any role in whether higher relative frequency can protect against reduction.

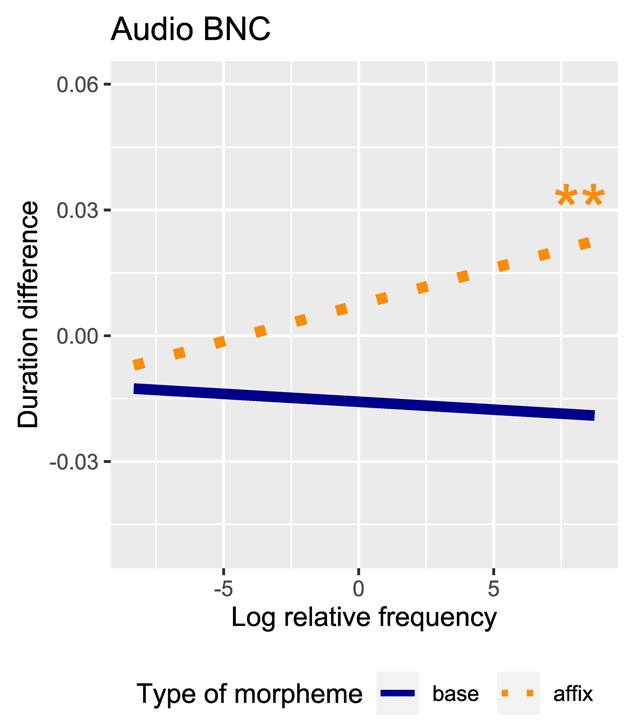

In addition, Table 4 shows that relative frequency was generally removed from all models both as interaction and as main effect due to non-significance, except from the Audio BNC. In the Audio BNC, we find one significant interaction between relative frequency and type of morpheme, where relative frequency is significant only if we look at the affix. In all three cases (despite the ‘significant’ effect in the Audio BNC), though, the BIC approximation to the Bayes Factor for the models with versus without relative frequency again tells us that these models constitute very strong evidence for the null (BF > 8000, posterior probability >.99 for all three corpora). Hseg1 is thus not well supported either: relative frequency generally does not affect duration in our data.

Before turning to the significant variables of interest, let us briefly look at the covariates. As seen in Table 6, the covariates generally behave as expected in the three models: speech RATE is always very highly significant and negatively correlated with duration. bigram frequency is significant in the Audio BNC and ONZE and negatively correlated with duration. biphone probability is significant in the Audio BNC and ONZE models and also negatively correlated with duration. Finally, number of syllables is positively correlated with duration in the three models.

Let us now examine the variables of interest that remained in the models, starting with relative frequency. relative frequency only showed one significant interaction with type of morpheme, namely in the Audio BNC. The interaction is illustrated in Figure 3. The x-axis represents the log of relative frequency; the y-axis represents the response variable duration difference. We use a blue, solid line to represent the slope of relative frequency when looking at the base, and an orange, dotted line to represent the slope of relative frequency when looking at the affix. Significance is indicated next to a given slope with asterisks, which means that only the slope of the dotted line is significantly different from zero.

In general, and in accordance with hypothesis Hseg1, we expect higher relative frequency to lead to increased durations of both base and affix. In the AudioBNC, this is indeed the case for affixes, but not for bases: Affix durations in the Audio BNC increase with increasing relative frequency (i.e., increasing segmentability). In other words, in the one case where relative frequency interacts with type of morpheme, a relative frequency effect manifests itself only on the affix, not on the base. All the other cases yield a null result: Base durations in the Audio BNC, and affix and base durations in the two New Zealand corpora, do not increase with higher relative frequency. As mentioned above, thus, our data do not convincingly support the segmentability hypothesis Hseg1, even though we find one effect in the expected direction. In addition, as discussed above, there were no interactions of relative frequency with prosodic category.

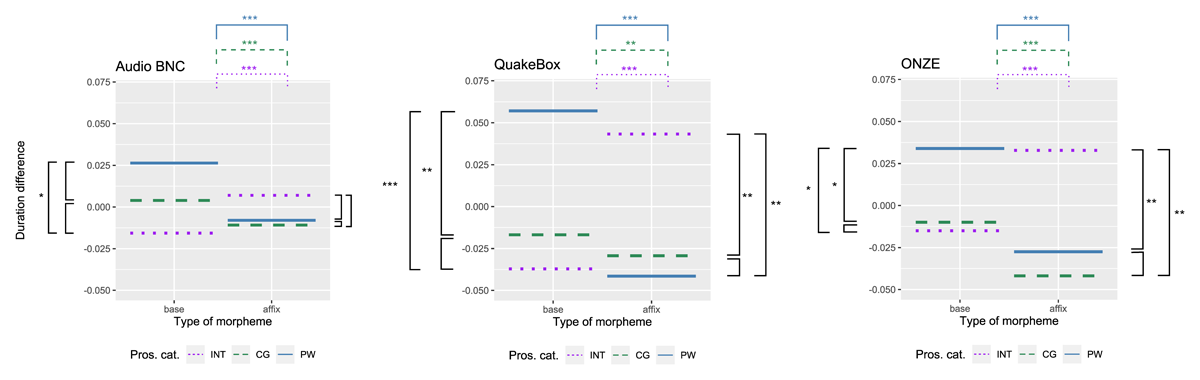

Next, we turn to the effects of prosodic category on duration. Figure 4 shows the interaction effects between prosodic category and type of morpheme on duration difference in the three corpora (Audio BNC, QuakeBox, and ONZE). The x-axis represents the type of morpheme, i.e., the two domains of durational measurement, base or affix. The y-axis represents duration difference. We use purple dotted lines to represent the Integrating (INT) condition; green dashed lines to represent the CliticGroup (CG) condition; and blue solid lines to represent the ProsodicWord (PW) condition. Significance between the contrasts is indicated by asterisks on brackets connecting the respective conditions above and beside each plot. For the contrasts between prosodic categories (Integrating versus CliticGroup versus ProsodicWord) within a type of morpheme condition, significance brackets are given on the left-hand and right-hand side of each plot. For the contrasts between types of morpheme (base versus affix) within a prosodic condition, significance brackets are given above each plot with colored solid, dashed, or dotted lines, corresponding to the prosodic category within which base and affix durations are compared.

First, let us look at the durations of affixes and bases in the different prosodic conditions. Figure 4 shows that seven of the 18 bars fall below the zero line: This means that in most cases, both affix durations and base durations are shorter than expected from their average segment duration.9 However, if we compare affixes and bases, we see that there are differences in the amount they deviate from their average segment duration (significance brackets above the plots).

Starting with the Integrating category (INT, purple dotted lines), we can see that integrating affixes behave as expected in all three corpora: They are consistently and significantly more lengthened than the bases to which they attach. In the ProsodicWord category (PW, blue solid lines), too, affixes behave as expected in all three corpora (they are less lengthened than their bases). In the CliticGroup category (CG, green dashed lines), affixes are less lengthened than their bases in all three corpora, while they would have been expected to be more lengthened.

Second, let us examine the contrasts between the prosodic categories within the respective domains of base and affix (significance brackets to the left and right of the plots, respectively). Let us look at the base condition first. We can see that in the Audio BNC, ProsodicWord bases are most lengthened. CliticGroup bases are less lengthened, and Integrating bases are least lengthened. Expressed in terms of the lengthening hierarchy formulated in Table 1 (Section 2.2.), the Audio BNC thus follows exactly the expected pattern ProsodicWord > CliticGroup > Integrating. However, two of the contrasts are not significant, so we could formulate the more accurate hierarchy ProsodicWord = CliticGroup = Integrating (with ProsodicWord > Integrating). The QuakeBox and ONZE corpora, too, are consistent with the expected lengthening hierarchy. However, the contrast between the clitic-group-forming category and the integrating category in the two New Zealand corpora does not reach significance. This results in the hierarchy ProsodicWord > CliticGroup = Integrating. Bases with prosodic-word-forming affixes are most lengthened, followed by bases with clitic-group-forming affixes and bases with integrating affixes.

Third, let us look at the affix condition. In the Audio BNC, integrating affixes are most lengthened, followed by prosodic-word-forming affixes and clitic-group-forming affixes. This is a different pattern from the expected one (which is CliticGroup > Integrating > ProsodicWord). However, since none of these contrasts are significant, the lengthening hierarchy is more accurately represented as Integrating = ProsodicWord = CliticGroup. In the QuakeBox corpus, Integrating affixes are most lengthened, followed by CliticGroup affixes and ProsodicWord affixes. The contrast between the integrating affixes and the other two affix types is significant, so that we are dealing with this hierarchy: Integrating > CliticGroup = ProsodicWord. Lastly, we also observe deviations from the expected lengthening hierarchy for the ONZE corpus. The ONZE affixes are most lengthened in the Integrating condition, followed by the ProsodicWord condition and the CliticGroup condition. This results in the pattern Integrating > ProsodicWord = CliticGroup.

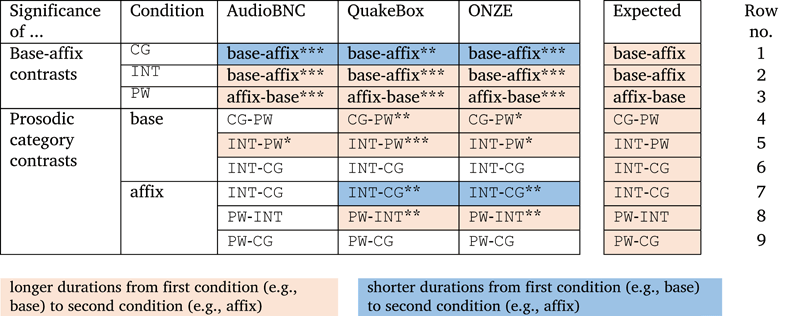

We can now evaluate all of our contrasts in terms of how the observed lengthening hierarchies overall fit with the expected hierarchies. Table 7 summarizes the significance levels for all measured contrasts we have discussed in the three corpora, color-coded for the direction of a given effect. For convenience, the last column of this table displays the directional changes in duration we would have expected based on the hypotheses outlined in Section 2.2. We set up the contrasts for this table so that in every cell, we expect more lengthening from the first condition (for example base or CG) to the second condition (for example affix or PW). More lengthening is indicated by orange shading. In cases where we observe less lengthening, we use blue shading.

Overall, we can observe that many, but not all of our expectations are confirmed by the data. Out of the 27 expectations (cells), 13 are in line with the predictions, five contradict the predictions, and nine do not reach significance. Prosodic contrasts within the base condition (Table 7, rows 4–6) are in line with the expectations, but two of the prosodic contrasts in the affix condition (row 7) go in the opposite direction from what was expected. A similar situation holds for the base-affix contrasts: The Integrating and ProsodicWord conditions (rows 2–3) behave as expected, while the CliticGroup condition (row 1) contradicts our expectations. The nine non-significant contrasts all pertain to the prosodic category contrasts (rows 4–9). Overall, only three out of nine rows are completely in line with the hypotheses.

Together with the findings for relative frequency, we can now relate all our findings to the hypotheses as follows. First, we saw earlier that Hseg1 is largely not supported, despite one effect in the expected direction in the Audio BNC: Higher relative frequency is not associated with longer durations. Second, Hseg2 is not supported either, as there was not a single significant interaction between relative frequency and prosodic category: How integrated an affix is into prosodic word structure does not modulate whether higher relative frequency can protect against reduction. In both cases, we have very strong evidence for the respective null hypothesis. Third, based on the findings summarized in Table 7, quite a few contrasts between bases and affixes within the prosodic categories (HPW, HINT, HCG), as well as contrasts between the prosodic categories within the affix domain (Hpros1–6), do not behave as expected: Durations often do not pattern according to prosodic boundary strength. The prosodic category approach cannot explain the patterning of the durational differences satisfactorily. We will now proceed to discuss these findings in more detail, returning to the theoretical assumptions of segmentability and prosodic word structure.

5. Discussion

We discuss our findings in the light of the hypotheses given in Section 2, which we repeat here for convenience:

- (1)

- Hseg1:

- The higher the relative frequency of a derivative (i.e., the more segmentable a derivative is), the longer will be its affix duration and base duration.

- (2)

- Hseg2:

- The more prosodically integrated an affix is (the weaker the prosodic boundary), the less likely can higher relative frequency protect the derivative against reduction.

- (3)

- HPW:

- ProsodicWord bases should be more lengthened than ProsodicWord affixes.

- HINT:

- Integrating affixes should be more lengthened than Integrating bases.

- HCG:

- CliticGroup affixes should be more lengthened than CliticGroup bases.

- Hpros1:

- ProsodicWord bases should be more lengthened than Integrating bases.

- Hpros2:

- Integrating affixes should be more lengthened than ProsodicWord affixes.

- Hpros3:

- CliticGroup affixes should be more lengthened than Integrating affixes.

- Hpros4:

- CliticGroup bases should be more lengthened than Integrating bases.

- Hpros5:

- CliticGroup affixes should be more lengthened than ProsodicWord affixes.

- Hpros6:

- ProsodicWord bases should be more lengthened than CliticGroup bases.

Three main findings emerge from our analysis: (1) Hseg1 is largely not supported by our data (with one exception), (2) Hseg2 is not supported by our data, and (3) HPW, HINT, HCG as well as the hypotheses Hpros1-6 are sometimes contradicted by the data.