1. Introduction

A central topic in linguistics is seeking to understand how humans learn linguistic patterns in order to become competent speakers. Universal and language-specific mechanisms are said to guide the learner through the learning progress (e.g., Baer-Henney, Kügler, & van deVijver, 2015; Culbertson, Smolensky, & Legendre, 2012; Kakolu Ramarao, Tang, & Baer-Henney, 2023; Moreton, 2008). To investigate these mechanisms, a powerful tool which is gaining increasing popularity is the artificial language learning (ALL) paradigm. The paradigm allows for monitoring countless aspects of language learning. With a relatively simple laboratory setting, we can observe mechanisms guiding language acquisition, language learning, and comparisons thereof. In such an experiment, an artificial miniature lexicon is governed by a certain grammatical pattern. Participants are first exposed to the artificial language and in a subsequent perception or production test it is examined whether the pattern has been learned.

Artificial languages are not only useful to track the acquisition path of the language-learning child (Berko, 1958; Kakolu Ramarao, Zinova, Tang, & van de Vijver, 2022; van de Vijver & Baer-Henney, 2014); artificial languages have also been used to investigate learning mechanisms in both children (Chambers, Onishi, & Fisher, 2003; Cristià & Seidl, 2008; Culbertson & Newport, 2015; White & Sundara, 2014) and adults (Baer-Henney & van de Vijver, 2012; Carpenter, 2010; Finley, 2011, 2017; Finley & Badecker, 2009; Martin & White, 2021; Moreton, 2008; Onishi, Chambers, & Fisher, 2002; Pater & Tessier, 2003; Wilson, 2006). Researchers have uncovered evidence for learning mechanisms, the so-called biases that are said to guide the learner during learning process (e.g., Baer-Henney et al., 2015; Carpenter, 2010; Culbertson & Newport, 2015; Culbertson et al., 2012; Finley, 2011, 2017; Finley & Badecker, 2009; Martin & White, 2021; Moreton, 2008; Tang, Kakolu Ramarao, & Baer-Henney, 2022; van de Vijver & Baer-Henney, 2014; Wilson, 2006). The consequence of biases is that certain phonological patterns are learned with more ease than others. Linzen and Gallagher (2017), for instance, have shown that English speaking participants rapidly take up a phonological pattern from an artificial language that requires two consonants of the artificial stimulus to be identical. After short training the identical consonant pattern is predominantly generalised (as compared to a non-identical consonant pattern). Learning mechanisms like this one are viewed as underlying learning mechanisms that all humans share.

However, when utilising the ALL paradigm, there is the risk that the learning effects experimenters observe actually are the unintended byproducts of the native language of the learner and/or the artificial language to be learned during the experiment. The present paper offers a possibility to disentangle a possible learning effect from artefacts of the native and the artificial language during artificial language learning. In order to provide convincing evidence, ALL research should fulfill the following criteria. First, convincing evidence for the universality of mechanisms should, in fact, address more languages or find replicated effects in multiple languages. Second, a possible effect should not be able to be explained by characteristics of the native language of the speaker nor by characteristics of the artificial language used in the paradigm but only due to learning. Fulfilling both criteria can pose some methodological problems as we will discuss in the following section. In the present paper, we offer an approach to address these problems. The approach facilitates designing cross-linguistic studies by statistically controlling for linguistic factors of the artificial and the native language. Several measures of wordlikeness are incorporated into the analysis as covariates.

1.1. Challenges in stimulus design in ALL research

Stimuli in ALL studies are typically designed by combining a subset of the L1 phoneme inventory (Baer-Henney et al., 2015; Skoruppa, 2019; White et al., 2018), or by simply replacing sounds in subsyllabic positions of real words (Keuleers & Brysbaert, 2010). This is to ensure that the items conform to the phonotactics of the participants’ L1. We believe that the desire to come up with AL stimuli that are similar and yet different to the L1 bears some risks to overlook peculiarities of the L1 that possibly have an impact on the outcome of an ALL study. In the remainder of this section, we discuss the possible challenges ALL research faces and a number of problems that arise from this methodology.

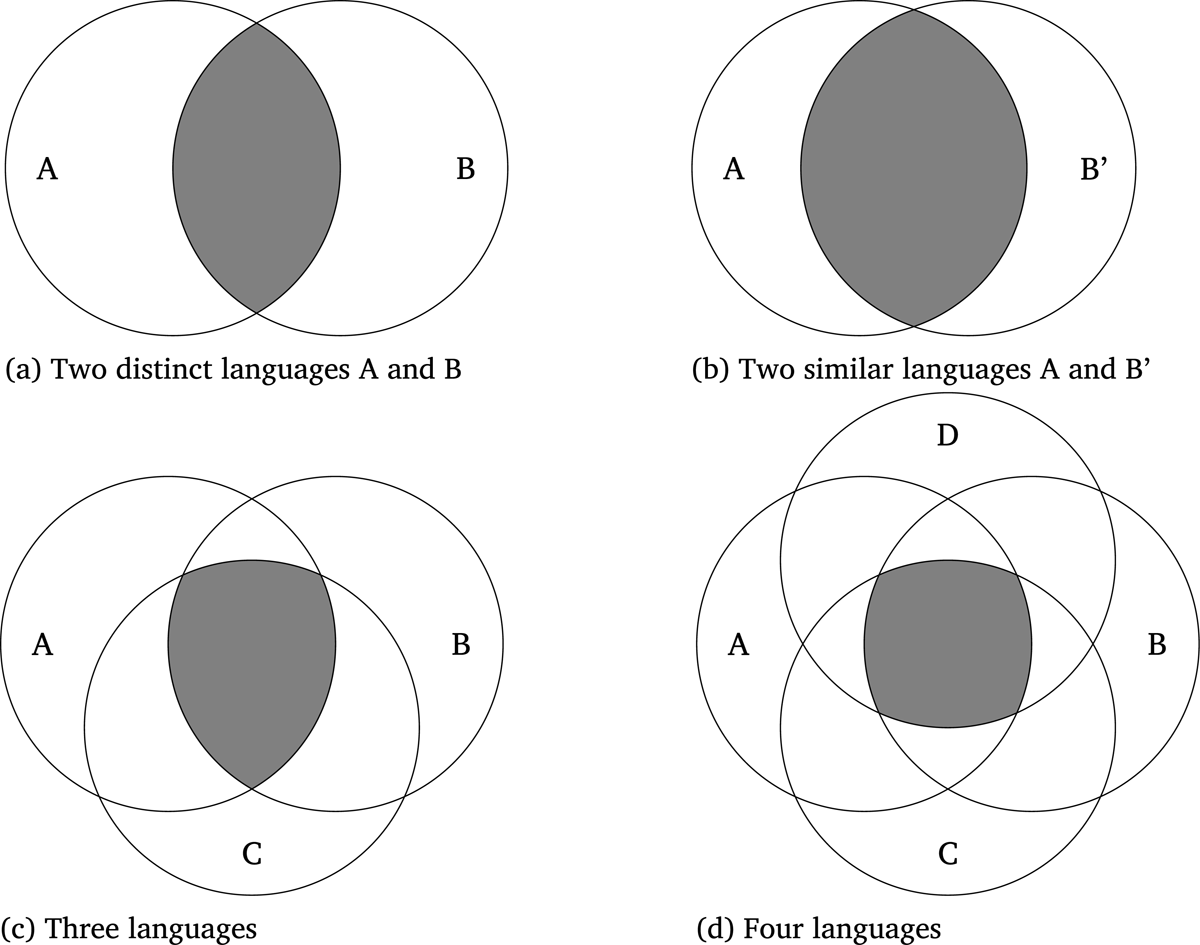

When conducting an ALL study researchers face several challenges. One of the challenges concerns the scenario of investigating the same mechanism with different speaker populations. Commonly, the same set of language materials is created for all different language groups in order to make a better comparison. However, the language material used cannot contain linguistic properties that vary too widely from the native languages. As the number of languages involved in the study increases, the design space of the material becomes more restricted. Let us consider a cross-linguistic study such as White et al. (2018)’s, which could raise some difficulties. The most basic criterion in this approach is to ensure the languages share the same phonemes. See Figure 1 for an illustration of the problem. In the best scenario, the number of overlapping phonemes would be reasonably large if the two languages are within the same language family (e.g., English and German) (Figure 1b). If the two languages are more distinct (e.g., German and Mandarin), the number of overlapping phonemes is small (Figure 1a). However, to better establish the universality of learning mechanisms, one must increase the number of languages as well as selecting languages that are more distinct, resulting in a highly reduced subset of phonemes (Figure 1c and Figure 1d).

With only a small subset of phonemes available, the research potential of the ALL paradigm becomes limited in terms of the type of mechanisms that can be examined and the number of artificial language stimuli used in the experiment (henceforth: nonwords) that can be generated. In sum, ALL experiments in phonology usually ensure that the miniature artificial language broadly conforms to the typical word shapes of the learners’ L1 in order to minimise the potential negative effects of being too different from the learners’ L1. For instance, learners could fail to perceive the nonwords correctly due to novel phonotactic patterns. At the same time, the nonwords in the miniature artificial language are designed to be different from the real words of the learners’ L1 in order to minimise the interference from existing word knowledge. This balance of trying to be similar and yet different from the participants’ L1 is difficult to strike. This is particularly an issue for cross-linguistic studies if a mechanism is found to be at work only in some language groups but not others. This could be driven by a difference in how this balance was struck across the language groups.

A second challenge is to cope with the fact that different native languages can influence the learning differently. A wealth of literature on Second Language Acquisition (see e.g., Edwards & Zampini, 2010; Kroll & De Groot, 2009, for an overview) has highlighted the role of language transfer in second language learning. When learning a new language, L1 influences how L2 (and L3) is learned. It has been shown that the same L2 is learned differently by learners with different L1s (Iverson & Evans, 2007, 2009; Schepens, van Hout, & Jaeger, 2020), and the similarity between L1 and L2 has been shown to have both a facilitatory effect and an inhibitory effect on language learning. Now ALL experiments on phonological research questions typically overlook the possible interaction of their L1 and the AL. Learners are faced with an artificial language, but the AL is not controlled for possible L1 effects. A particular L1 might interact differently with different artificial languages (see Onnis and Thiessen (2013) for such an effect in syntactic learning). The specific choice of speaker population to be tested can have a consequence for the outcome of the study.

One risk is that researchers could select languages that work against the mechanism of interest to best evaluate its robustness. For instance, an ALL study by Yin and White (2018) found an effect of homophony avoidance with L1-English participants. In this study, L1-English learners were biased against phonological patterns that create homophony. However, the fact that English does not have a high degree of homophony could be driving the effect of homophony avoidance. Therefore, this study could benefit from a replication with speakers of a homophone-rich language, such as Mandarin Chinese, to evaluate the universality of homophony avoidance. Consider the following two hypothetical examples: (1) In the specific choice of artificial items chosen by the researchers, they could overlook hidden “subpatterns” in L1. A specific nonword might resemble 80% of L1 words that follow the pattern of interest while the L1 as a whole does not display a pattern. For instance, in an experiment teaching a fronting pattern experimenters might overlook how the specific phonological contexts in the nonwords are accidentally very similar to a subpart of the learners’ L1 lexicon in which front sounds are very common, even though the overall lexicon does not display such a rule. (2) Researchers could over-rely on broad generalisations (e.g., L1 has vowel harmony or L1 is a trochaic language). The information that vowel harmony may only apply to a certain word class or that trochees are predominantly found in disyllabic words but not in trisyllabic words may be missed.

Let us take a closer look at what solutions to the abovementioned problems the ALL and psycholinguistic literature offers, and which problems are still to be solved. One approach used by some studies is to run a control condition in which there is only the test phase without training (Finley & Badecker, 2012), or a control condition with an uninformative training phase not containing critical items (Finley, 2011). The control group is then compared with the experimental group that received training. There are two main issues with the control group approach of dealing with L1 effects. First, the strength of L1 might be different across the two groups. Let us assume that the L1 has a similar effect as the hypothesised learning mechanism on the AL test items. The control group approach relies on the assumption that the L1 affects across the control group and the experimental group equally because the difference in the responses of the test items is interpreted as evidence for the learning mechanism at work. However, should the amount of L1 affect the two groups unequally, then the effect of the mechanism could be masked or falsely supported by the difference in the responses of the groups. The effect might be masked when the L1 effect is stronger in the control group than in the experimental group because, for instance, there is simply no AL input to rely on. Subsequently, the difference between the two groups are evened out. The effect might be falsely supported when the L1 effect is stronger in the experimental group than in the control group because, for example, the statistical patterns of the AL input primes those same patterns in the L1 thus enhancing the L1 effect. In other words, languages which already display a systematic pattern (in either direction) in their L1, and therefore in a control group, will not be evaluated accordingly, since there would be no possibilities to disentangle the influence of the mechanism at work and prior knowledge. Second, the control group approach could lead to potential misinterpretation of seemingly contradicting findings from different language groups. There is the risk that differences between control and experimental groups are bigger in one language population but smaller or absent in another language population. This would, in fact, suggest that the mechanism is not universal.

Another approach is to construct AL stimuli that have been controlled for their L1 wordlikeness. The following studies examine the existence of the patterns of interest using a lexicon. Baer-Henney (2015), for instance, used frequency counts of types and tokens to establish hypotheses regarding the outcome of the experiment if participants were to mirror lexical statistics. Similarly, Seidl and Buckley (2005) excluded a pre-existing bias on the basis of a global check of the lexicon in which they find that both patterns under investigation occur equally often. Other studies dedicated parts of their discussion to a simple check of lexical frequencies and find their results matching (Greenwood, 2016) or mismatching (Finley & Badecker, 2012; Myers & Padgett, 2014) the lexical statistics, concluding whether lexical knowledge could have explanatory power in the specific case or not. What these studies have in common is that they check and/or discuss lexical statistics, but they do not incorporate them into the analysis. These studies evaluate lexical statistics that concern the pattern of interest in the artificial language and they suggest not testing a population of speakers in which the pattern is already part of the language. While this way a pattern of interest may be under control (e.g., a pattern related to consonants), another one may not (e.g., a potential pattern related to vowels). It is thus possible that the researcher unintentionally uses particularly wordlike nonwords or that certain nonwords are similar to a subpart of the L1 lexicon.

In fact, in psycholinguistic literature outside the context of ALL, efforts in evaluating the wordlikeness of nonwords across multiple languages have been similarly limited with a few exceptions. For instance, in nonword repetition research, Boerma et al. (2015) and Howell et al. (2017) have created quasi-universal nonwords for the purpose of designing a nonword repetition task that would function well with a large number of languages with minimal bias towards a particular language. In these studies the nonwords are controlled for their phonological structures and the number of phonological neighbours. In phonological research, T.-Y. Chen and Myers (2021) created a platform for collecting wordlikeness judgements in an effort to encourage researchers to share their judgement data towards a compilation of a mega database of cross-linguistic wordlikeness judgements. While researchers could select nonwords that are similarly wordlike with this database, this project is still a work in progress and the languages involved are very limited. Methodologically, there are nonword generation tools that can generate nonwords based on user-specified lexical properties (e.g., Duyck, Desmet, Verbeke, & Brysbaert, 2004; Keuleers & Brysbaert, 2010). However, they were not designed for selecting cross-linguistic nonwords (at least not without further development; see Eden (2018) for a cross-linguistic approach of measuring phonological distances). Finally, this approach falls short of its extendibility to multiple languages especially if the languages are typologically different, since the more languages we try to take into account, the harder it would be to create nonwords that are similarly wordlike across the languages. In sum, we believe that this approach is not appropriate for ALL research on the universality of learning mechanisms and in the following section we introduce an approach to improve the control over the design of an artificial language in ALL research.

1.2. A lesson from psycholinguistics

Already in 1996, a critical review of the ALL paradigm by Redington and Chater (1996, p. 129) highlighted the importance of item control. It was stated that “Just as psycholinguists routinely control for word frequency, cloze value, and the like, so researchers should routinely control for factors such as bigram and trigram frequencies, if they intend to eliminate hypotheses based on knowledge of such simple fragments, rather than, for example, memory for whole strings, the extraction of an underlying grammar, and so forth.”

Building on this insight, the present paper harnesses methodologies in psycholinguistic literature in lexical processing and human learning: Rather than controlling unwanted L1 and AL factors in the experimental design through stimuli selection, we take lexical statistics of L1 and AL into account in a regression model (Baayen 2004, 2010). Controlling for L1 and AL effects means that we monitor whether wordlikeness of test items to L1 words or to the artificial training items played a role in learning. Subjects could prefer test words that are similar to the L1 lexicon and to the AL lexicon in two ways. A test item can be similar to the L1/AL and can be supported by L1/AL lexical statistics in terms of a) the target AL pattern of interest, and b) other non-target patterns. In fact, the methodological decisions made in an ALL study by Boll-Avetisyan and Kager (2016) exemplifies the necessity of including lexical statistics in a regression model since controlling unwanted factors through stimuli selection is not always possible. In their study, the authors attempted to control for wordlikeness of L1. During stimuli selection, the authors were able to ensure that their nonwords were balanced across conditions in term of their positional syllable frequencies, transitional probabilities between phonemes, and lexical neighbourhood density. However, they were unable to further control for cohort density, a measure related to lexical neighbourhood density. As a result, the authors added cohort density as a covariate, which turned out to a significant variable, in the statistical models in order to capture its potential L1 influence on the learners when tested with the AL materials.

This approach serves two particular purposes in ALL. First, in an ALL experiment with only one language group, the effects of L1 and AL can be regressed to reveal the existence of the learning effect, even without a control group. Second, in an ALL experiment with multiple language groups, the effects of the respective L1s can be regressed to evaluate the universality of the learning effect. This approach overcomes the aforementioned shortcomings of using a control group and using stimuli selection to control for L1 effects, offers a control for the interference of the AL lexicon, as well as gives additional insights in language learning. Regressing L1 and AL effects would give us the ability to understand L1 and AL effects and in how they jointly affect learning. Learners may learn other patterns of the AL lexicon, as well as the intended patterns that are relevant to the learning effect of interest. By controlling wordlikeness of test items to training items with regression, we can better understand whether the learning effect is at play. We are therefore less likely to miss the effect of an effect even if the effect was inherently small and possibly masked by L1 and AL. If this were the case, no difference between control and experimental groups would arise. By taking L1 and AL into account as covariates, subtle effects have a better chance of being detected. By regressing effects of multiple L1s instead of a categorical variable of language groups, researchers would be able to understand the cause of any potential language group differences. The statistical model enables us to better separate the native language effect from the learning effect by having gradient language factors (compared to a categorical factor of language groups). An example of this approach to understanding cross-linguistic differences can be found in a phonetic study by Burchfield and Bradlow (2014) on the syllable reduction effect in Mandarin and English (see Günther, Smolka, and Marelli (2019) for another example). The authors observed cross-linguistic differences, with Mandarin showing more syllable reduction than English. Crucially, this language group difference was reducible to the differences in the relative proportions of open and closed syllables across these languages. In other words, the observed cross-linguistic differences in the dependent variable are determined by specific cross-linguistic structural differences.

We illustrate our proposed approach by evaluating the impact of the L1 in artificial language learning. Linzen and Gallagher (2017)’s study on the identity effect in English will serve as a starting point for the investigation of the relevance of several factors from the L1 and AL lexicons. We adopted their paradigm because it investigates a general type of learning (not as specific as dealing with a learning bias), and the findings will be applicable to all learning studies, including the strand of ALL studies concerning possibly universal biases. Linzen and Gallagher (2017) trained English speakers with the same number of artificial words containing identical and non-identical consonants. Natural language is likely to have fewer words containing identical consonants than those containing non-identical consonants (Graff & Jaeger, 2009; see references within Tang & Akkuş, 2022). Thus, the training set exhibited an overrepresentation of consonant identity nonwords relative to chance and this led speakers to be more likely to accept new items as part of the learned language if they conformed to the identity pattern. We describe the details of the experiment in the section below. Linzen and Gallagher (2017) did run a control version of their experiment to rule out the possibility of a pre-existing bias resulting from words of the L1 as compared to resulting from exposure phase. Participants from the control group received no training and showed no preference for identical consonant frames while participants from the experimental group showed a preference. Nonetheless, it was acknowledged by the authors that the possible influence of L1 and the memory of AL exposure material seems to be an understudied problem in ALL research (Linzen & Gallagher, 2017, p.26).

The paper is organised as follows: Section 2 outlines the details of our study. We first introduce the logic of the original study by Linzen and Gallagher (2017) before we present the materials (2.1) of the two artificial languages for our Mandarin and German language groups, followed by the methodological details of our study (2.2). Section 2.3 introduces the variables of interests and their implementations. In this section, we will introduce the language-dependent variables (Section 2.3.1) and language-independent variables (Section 2.3.2). Section 3 presents our results for German and Mandarin speakers. Section 4 reviews our findings regarding the original identity effect, task effects, and L1 and AL effects before closing with a section on implications for learning research. Section 5 concludes the paper.

2 The present study

We adopted Linzen and Gallagher (2017)’s ALL experiment in which the authors detected learning of an identity pattern after short exposure.

Linzen and Gallagher (2017) tested English speakers. In their experiment participants listened to artificial language input during training. During the test, they were asked if unattested and attested items could be accepted as part of the artificial language. Specifically, we adapted their version 2a of the experiment (Linzen & Gallagher, 2017, p.10), in which the pattern to be learned is a probabilistic abstract generalization and does not make reference to the phonetic properties of any particular sound: We adjusted stimuli and training length to our needs. The artificial language input in training was ambiguous in the sense that half of the items conformed to an identity constraint; the other half did not. Items conforming to the identity constraint contained an identical consonant in each consonant position. We refer to these CaVCaV items as items with identical C_C_ frames. Items not conforming to the identity constraint contained different consonants in each consonant position. We refer to these CaVCbV items as items with non-identical C_C_ frames. During the test, participants were asked if attested and unattested items (with the same number of identical and non-identical C_C_ frames) could be accepted as part of the artificial language. Note that in this design there is not a correct or an incorrect answer to whether a given stimulus belongs to the artificial language exposed to the participants since there were equal number of identical and non-identical C_C_ frames during the training and test phase.1 Despite equal input of identical and non-identical C_C_ frames, participants were more likely to accept the identity pattern as compared to a non-identical items. This captures the preference with which participants are willing to take up patterns and generalise them to new material. While the input contains more identical nonwords than non-identical nonwords relative to chance, participants show rapid learning of this phonotactic pattern, namely the overrepresentation of identical nonwords. This tendency to overestimate the identity pattern as part of the new language is what Linzen and Gallagher (2017) refer to as identity effect.

The present study aims at extending the examination to two radically distinct languages and uses the method as a test case to investigate the role of the lexicon of both the native language and the AL in ALL. For the present study we created a Mandarin and a German artificial language version. Using a language-adapted AL lexicon we familiarised German and Mandarin speakers with an artificial language and aimed to (1) replicate the original effect in two other languages, and (2) also track if and how L1 and AL lexical knowledge contributes to our learners’ behaviour. We investigated several types of lexical knowledge that could have influenced performance in an ALL task.

To measure the influence of a learner’s L1 (German/Mandarin) and AL (the training nonwords) on the task, we selected three lexical variables that reflect different types of lexical knowledge. We investigated the influence of (1) activation diversity, a measure of distributions of co-occurring features across the lexicon during lexical selection, (2) neighbourhood density, a classic lexical variable for modelling analogical learning, and (3) the probability of the specific C_C_ frame, a variable which highlights a potential attention effect by the participants to the CVCV template as it was used by all of the nonwords in the experiment. While the first two variables control the artificial languages’ similarity to the learners’ L1 in a general way, the last variable, consonant frame identity, controls more directly for AL similarity. Consonant frame probability is a control variable that looks at the specific pattern in the artificial item and tells us about how often its specific pattern is found in the L1 lexicon. Neighbourhood density (the measure resulting from an Generalized Neighbourhood Model (GNM)) and activation diversity (the Naive Discriminative Learning (NDL) measure) look at L1 similarity more generally. Together they enable us to examine the role of the lexicon at different levels of specificity – over any general dimensions (vowels, consonants, or features) or over the target AL pattern.

In sum, the present study is a cross-linguistic study on learning an identity constraint while controlling for language-specific variables and as such it addresses several questions of interest and is applicable to other ALL studies as well. First, we aim to see whether the effect under investigation (here: identity) is a robust effect even if we take language-specific variables into account. By including L1 variables we test whether the identity effect originates from or may be masked by from the speakers’ L1. By including AL variables we test whether the identity effect originates from or may be masked by the design of the artificial language used in the experimental learning situation. Only if the effect under investigation turns out to be robust, even when we take into account language-specific effects, we can argue for its existence. Second, if the effect under investigation turns out to show up consistently across language populations we are able to argue for its universality.

In the following sections we describe the materials (Section 2.1) and the technical details of the method (Section 2.2), and finally we report how we prepared for the evaluation of language-dependent and language-independent variables under investigation (Section 2.3).

2.1. Materials

We created a Mandarin and German artificial miniature lexicon, out of which we chose individual training and test items for the ALL experiment. All items had a CVCV syllable structure with identical or non-identical consonant frames and tense language-specific vowels. The lexicon contained exposure items as well as attested and unattested test items. Half of these items conform to an identity constraint consonants and the other half does not.

Linzen and Gallagher (2017) tracked the learning of their artificial language with different amounts of exposure. The effect became apparent after only little exposure with few tokens per exposure item type. The identity effect was found in attested items after using two exposure tokens per type and in unattested items after using four exposure tokens per type (Linzen & Gallagher, 2017, experiment 2a). We therefore decided to use an equivalent set with three exposure sets to investigate influences of the native as well as the artificial lexicon.

Participants were assigned to one of four experimental groups – there was a German as well as a Mandarin version, and a frequent and an infrequent condition per language. For each group we sampled experimental stimuli from a group-specific item pool. In what follows we explain how stimuli for these pools were created before we turn to the question how stimuli were distributed during the experiment.

2.1.1 Stimuli creation

For the Mandarin and German version of the experiment we used a subset of language-specific consonants, since selecting a set of overlapping consonants of two typologically distinct languages would severely limit the number of possible nonwords, a problem that was illustrated in Figure 1. Our aim was to prepare four miniature lexicons: Two per language, one where preselected consonant frames were relatively frequent and one where consonant frames were relatively infrequent according to the lexical statistics of the native language (L1). Token frequency was used as a proxy for L1 wordlikeness to select consonant frames for each subgroup2. The frequency of consonant frames was estimated by calculating their Zipf values (a normalised frequency scale: log10 of the frequency per million plus three) (van Heuven, Mandera, Keuleers, & Brysbaert, 2014) in language-specific tokens.3 Each participant was presented with either the more frequent frames or the less infrequent frames of one language.4

The consonants used to construct the consonant frames were counterbalanced across two conditions: Identity (identical vs. non-identical frames) and attestedness (attested vs. unattested frames). Concretely, two non-overlapping sets of consonants were used to construct the consonant frames, with one set being used to construct unattested, identical frames and attested, non-identical frames (e.g., [m, ph, h, kh] in high frequent German condition) and another set being used to construct attested, identical frames and unattested, non-identical frames (e.g., [b, th, n, ʁ] in high frequent German condition). See Table 1, which summarises consonant frames used in the two languages, German and Mandarin, together with their frequency status. For half of the participants we set up a corresponding item set in which consonant frames of the exposure items/attested test items and unattested test items were interchanged.

Consonant frames and their lexical distributions in L1 lexicons.

| Exposure/Test (attested) | Test (unattested) | |||||

| Frame | TokenZipf | Frame | TokenZipf | |||

| German | High frequency | Identical | b_b_ | 5.506 | m_m_ | 5.294 |

| th_th_ | 5.047 | ph_ph_ | 5.219 | |||

| n_n_ | 4.500 | h_h_ | 4.283 | |||

| ʁ_ʁ_ | 4.015 | kh_kh_ | 4.085 | |||

| Non-identical | m_kh_ | 3.731 | ʁ_th_ | 5.158 | ||

| h_ph_ | 3.519 | b_ʁ_ | 5.127 | |||

| ph_m_ | 3.072 | th_n_ | 3.984 | |||

| kh_h_ | 1 | n_b_ | 2.072 | |||

| Low frequency | Identical | g_g_ | 3.644 | v_v_ | 2.373 | |

| l_l_ | 3.563 | z_z_ | 1.595 | |||

| t͡s_t͡s_ | 1.896 | j_j_ | 1 | |||

| f_f_ | 1 | d_d_ | 1 | |||

| Non-identical | z_d_ | 3.887 | l_g_ | 5.274 | ||

| d_v_ | 2.709 | t͡s_l_ | 4.943 | |||

| v_j_ | 1 | f_t͡s_ | 1 | |||

| j_z_ | 1 | g_f_ | 1 | |||

| Mandarin | High frequency | Identical | t_t_ | 5.711 | th_th_ | 5.391 |

| kw_kw_ | 4.784 | xw_xw_ | 4.809 | |||

| w_w_ | 4.559 | l_l_ | 4.517 | |||

| s_s_ | 4.055 | t͡sw_t͡sw_ | 4.234 | |||

| Non-identical | xw_l_ | 5.829 | t_w_ | 4.755 | ||

| l_t͡sw_ | 3.181 | w_kw_ | 4.286 | |||

| th_xw_ | 3.107 | s_t_ | 3.783 | |||

| t͡sw_th_ | 2.319 | kw_s_ | 3.190 | |||

| Low frequency | Identical | khw_khw_ | 3.976 | ph_ph_ | 4.052 | |

| t͡shw_t͡shw_ | 3.087 | ʈ͡ʂhw_ʈ͡ʂhw_ | 3.743 | |||

| pj_pj_ | 2.905 | nj_nj | 3.280 | |||

| ᶎ_ᶎ_ | 2.753 | thw_ thw_ | 3.030 | |||

| Non-identical | thw_nj_ | 4.306 | khw_ᶎ_ | 2.6784 | ||

| ph_thw_ | 3.456 | pj _t͡shw_ | 2.1733 | |||

| nj_ʈ͡ʂhw_ | 1 | t͡shw_khw_ | 1 | |||

| ʈ͡ʂhw_ph_ | 1 | ᶎ_pj_ | 1 | |||

Equipped with language-specific consonant frames we generated all possible CVCV sequences using the Mandarin vowel set {aː, ai, au, əi, iː, eː, oː, uː, ɤː, əu, yː} and the German vowel set {aː, eː, iː, oː, uː}.5 Four constraints were applied to come up with a final set of items. First, existing words were excluded. Second, we avoided token similarity by ensuring the first vowel of the items was not identical (we avoided stimuli such as naːno, naːne). Third, we avoided token similarity by ensuring the second vowel of the items was not identical (we avoided stimuli such as naːno, neːno). Fourth, we avoided vowel metathesis patterns across items (we avoided stimuli such as naːno, noːna). In the few remaining cases when we had to pick the violated forms, we picked the one that did not share a vowel at a certain position with the test item. If we had still no choice, we chose the one where the second vowel was identical to the test item. Of the remaining items we generated their syllable-bigram6 probability and picked the four CVCV tokens evenly across the spectrum of syllable-bigram probability. Mandarin is a tonal language with four lexical tones which are the high tone (tone 1), the rising tone (tone 2), the low tone (tone 3), and the falling tone (tone 4). Mandarin items were realised with the falling tone (tone 4) on both syllables. The tone sequence 4–4 is the most common disyllabic word forms in Mandarin (Lin 2016). German items were trochaic. A stressed penultimate before an unstressed ultimate corresponds to the most common word forms in German (Wiese, 2000). An example item set for one German participant7 is illustrated in Table 2; a full list of artificial items for both German and Mandarin speakers in all groups can be found in Tables 10, 11, 12, and 13 of the appendix.

Illustration of one item set presented to a participant in the frequent German group.

| Training | Test | ||||

| Attested | Unattested | ||||

| Identical C_C | beːbo | baːbu | boːbi | buːba | khoːkhi |

| thiːtha | theːthu | thoːthi | thaːtho | haːhu | |

| naːni | noːnu | nuːne | neːno | pheːpha | |

| ʁeːʁa | ʁiːʁo | ʁuːʁi | ʁaːʁu | miːmu | |

| Non-identical C_C | khoːha | kheːhi | khiːhu | kːhuːho | boːʁu |

| haːphi | hiːphu | huːpho | hoːphe | ʁiːthu | |

| phaːmi | phuːme | phoːma | pheːmu | theːni | |

| moːkha | muːkhe | maːkhi | meːkho | noːba | |

The two miniature item sets were recorded separately – one by a native speaker of Mandarin (Henan dialect) and one by a native speaker of Standard German. Items were recorded in the Mandarin carrier sentence “请你把 ___ 再说一遍” (Pinyin: qing3 ni3 ba3 ___ zai4 shuo1 yi1 bian4.) (en: Please say ___ once again.) or the German carrier sentence “Ich habe noch nie ___ gehört.” (en: I never heard ___ before.). The stimuli were recorded in an anechoic booth in the phonetics laboratory at Heinrich-Heine University Düsseldorf. We extracted stimuli and scaled their intensity to 70 dB using Praat (Boersma & Weenink, 2018).

2.1.2. Stimuli distribution

For each participant, there was an individual training and test set consisting of stimuli from the experimental group-specific item pool (frequent German, infrequent German, frequent Mandarin, infrequent Mandarin). For each of the language-specific eight attested consonant types (four identical and four non-identical), each participant was given three randomly selected tokens per type (out of four tokens) as the training set, and the remaining fourth token was used as an attested test item. For each of the eight unattested consonant types (four identical and four non-identical), each participant was given one randomly selected token per type (out of four tokens) as part of the unattested test items. In total, each participant was given 24 tokens (three tokens x eight attested consonant types) in the training set, eight tokens (one token x eight attested consonant types) in the attested test set, and eight tokens (one token x eight unattested consonant types) in the unattested test set. Presentation order of exposure and test items was randomised within the experimental phase.

2.2. Methods

Experiments were run online using Experigen (Becker & Levine, 2013). Participants were asked to wear headphones. During training, every training item was played once. During the test, participants were asked if testing items could be accepted as words from the new language. The instructions used by Linzen and Gallagher (2017) were translated with minor variations into German and Mandarin Chinese and can be found in Appendix A. After the experiment, participants were asked to fill out a short demographic background sheet asking for age, gender, and language history.

Participants were recruited via social media platforms and mailing lists. They provided informed consent and they were offered the chance to enter a raffle to win a gift voucher. Two hundred and thirty-two native German adults took part in the German experiment. Their mean age was 31.5 years, ranged between 18 and 70. One hundred and thirty-six were women, 50 were men, two identified as other. Forty-four participants did not provide information about their gender. In the Mandarin version of the experiment, 219 Mandarin native speakers took part, with a mean age of 21.5, ranging from 15–55. Ninety seven were women, 95 were men, and 27 did not provide gender information.

2.3. Variables under investigation

For the language-dependent variables, we computed them with respect to their L1 and AL. For the nonwords in each version of the experiment, the L1 variable was computed over the full L1 lexicon (German or Mandarin), and the AL variable was computed over the corresponding artificial language that was exposed to the participants. Thus, the AL variables were computed over the individual artificial exposure lexicon which was much smaller than the L1 lexicon.

To better estimate the L1 lexical knowledge of our participants, we chose frequency lists compiled using subtitle texts, namely SUBTLEX-DE (Brysbaert et al., 2011) and SUBTLEX-CH (Cai & Brysbaert, 2010) for German and Mandarin respectively. This was motivated by the fact that lexical frequencies derived from subtitle texts have consistently shown to outperform those from other genres in capturing behavioural responses in psycholinguistic tasks across languages (Brysbaert & New, 2009; de Chene, 2014; Keuleers, Brysbaert, & New, 2010; Tang, 2012; Tang & de Chene, 2014; Tang & Shaw, 2021). These reference lexicons were then enriched with IPA. The German lexicon was created by combining the lexical entries in the German section of CELEX (Baayen, Piepenbrock, & Gulikers, 1995) with SUBTLEX-DE’s frequency estimates. The Mandarin lexicon was created by transcribing the lexical entries in SUBTLEX-CH with CEDICT (Denisowski, 1997) and the pinyin pronunciation guide outlined in Duanmu (2007).8 Surprasegmental information was removed for German but not for Mandarin because German stress has a low lexical functional load (Surendran & Niyogi, 2003; Tang, Chang, et al., 2022) and is predictable (Féry, 1998) compared to Mandarin tones. ALL studies have consistently found that learners are sensitive to phonological features in the training stimuli; therefore, we compute our wordlikeness variables on the featural level as opposed to on the segmental level (Durvasula & Liter, 2020; Finley, 2022; Linzen & Gallagher, 2017) In addition, we also investigated a number of language-independent factors such as trial number, reaction time, and the original finding of the acceptability rate of identical patterns (Linzen & Gallagher, 2017). We controlled for whether C_C_ frames were attested or unattested during training.

2.3.1. Language-dependent variables

Three language-dependent predictors were considered and are described in detail in the next paragraphs. As measures of wordlikeness, we used activation diversity and neighborhood density, two variables that are relatively uncorrelated (Milin, Feldman, Ramscar, Hendrix, & Baayen, 2017). Third, we considered consonant frame probability.

Activation diversity Assessing activation diversity of the nonword test stimuli used is one way to account for wordlikeness of our stimuli. In this way we are able to control for how similar the stimuli are to the words of the native lexicon of the speaker, and we are able to control for how similar the stimuli are to the nonwords of the artificial training lexicon of the speaker. The following section describes how the measures are assessed. Activation diversity is a measure resulting from an Naive Discriminative Learning (NDL) model. NDL incorporates the Rescorla-Wagner learning rule (Rescorla & Wagner, 1972).

In this framework the learning process consists of cues (the set of input units) and outcomes (the set of output units). What is a cue and what is an outcome is specified in the specific learning scenario. Cues and outcomes can either be present or be absent. The learning rule captures the change in association strength between cues and outcomes. Presence and absence of cues and outcomes determine strength of associations in the network and – as a consequence – learnability of the outcomes. If a cue is not present in a learning event, then no change in association strength is made. If a cue and an outcome are both present, then the association strength increases. If a cue is present but an outcome is not present, then the association strength decreases. If a cue or an outcome has not been encountered before, then the association strength does not change. To put simply, the strength of association between a cue and an outcome depends on whether the cue is predictive of the outcome. Cues compete for predictive values based on whether a cue successfully predicts an outcome or not. Please see Appendix B for the mathematical details of the Rescola-Wagner equations (Rescorla & Wagner, 1972).

The Rescorla-Wagner learning rule has been shown to provide a psychologically plausible model of human learning in a number of lexical and phonological processing tasks. In lexical decision tasks, NDL-derived variables have been found to outperform classical lexical-distributional measures (Milin et al., 2017). NDL was able to model morphological variations in Russian and provide complementary information to other modelling approaches (logistic regression and decision trees and random forest) (Baayen, Endresen, Janda, Makarova, & Nesset, 2013). Crucially, it has also been applied in ALL studies to understand the mechanism underlying language learning (Vujović, Ramscar, & Wonnacott, 2021). Recent studies have shown that latent variables derived from a cue-to-outcome matrix can capture behavioural responses in a number of psycholinguistic tasks, such as lexical decision and word naming, similarly well compared to a number of classic psycholinguistic variables, such as token frequency, family size, and phonotactic probability.9

In lexical processing, typically an NDL model is trained on certain types of sublexical cues (e.g., bigrams of letters or acoustic characteristics), (input units) and words as outcomes (output units) (see Milin et al., 2017; Nixon, 2020). It is designed to discriminate between words on the basis of sublexical cues. The model results in a cue-to-outcome matrix and each cell in the matrix contains the weight of a specific cue activating a specific outcome. As the model is being exposed to each word and its corresponding cues, the activation weights are updated accordingly. In this way, the NDL model represents the mental lexicon, which is dynamic and flexible. The matrix then offers the possibility to check the wordlikeness of new bigram combinations. Thus, activation values can be derived, stored as L1 and AL activation diversity measures, and used as an additional predictor in our final model.

Thus, the latent variable that is of our particular interest is the activation diversity given a set of cues. In the cue-to-outcome matrix, each cue is a vector of the same length as the total number of outcomes (nonwords for the AL exposure lexicon, and words for the L1 lexicon).

Unlike previous studies such as Milin et al. (2017), the cues of our NDL models were not computed over bigram letters but rather over bigram phonological features. The feature specification of each phone was taken from the PHOIBLE feature chart (Moran, McCloy, & Wright, 2014). Following the framework of autosegmental phonology (Goldsmith, 1976), the bigrams were computed separately over segment tiers and tonal tiers. While segments were broken down into features, tones were not, and the tone values were used to compute tonal bigrams directly. Concretely, this means that in our assessment, we used featural bigram representations such as a bigram of features [+syllabic] followed by [-syllabic] as cues to check against the outcomes.

For each test nonword we assessed the activation diversity for L1 and AL. The ndl2 library (version 0.1.0.9002) was used to implement our NDL models (Baayen, Milin, Đurđević, Hendrix, & Marelli, 2011; Shaoul et al., 2015). With respect to the L1, we first trained an NDL model using an L1 lexicon to obtain a cue-to-outcome matrix, where cues are bigram features and the outcomes were real words of German or of Mandarin. To then obtain the L1 activation diversity value for each nonword stimulus in the testing phase, we converted each nonword into a featural bigram representation and looked up the activation values that correspond to each featural bigram cue using the cue-to-outcome matrix.10 The sum of the absolute values of the activation values for all the cues of a nonword is its activation diversity value. With respect to the AL activation diversity, the only difference is the lexicon that the NDL model was trained on first. Instead of a German/Mandarin lexicon, an NDL model was trained using the individual miniature AL lexicon which contains only the exposure items per participant. Thus, for each test nonword we computed an L1 activation diversity value and an AL activation diversity value. In this way, we interpret activation diversity as a measure of wordlikeness of a nonword. A test nonword that is very wordlike with respect to the reference lexicon (L1 or AL) would have cues with high activation values in the cue-to-outcome matrix and thus would have a high (L1 or AL) activation diversity value.

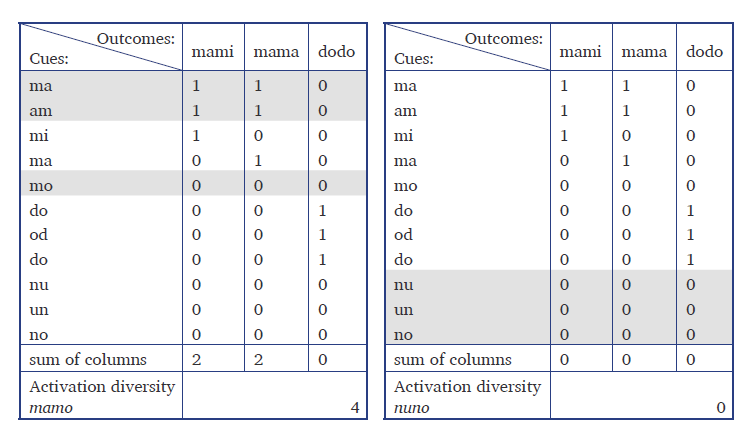

To illustrate the NDL procedure to gain activation diversity values, a miniature example is provided in Table 3. Table 3 shows that how L1 activation diversity values for the two test nonwords mamo and nuno were computed for a miniature German lexicon consisting of three words mami (engl. mummy), mama (engl. mum) and dodo (engl. dodo). Outcomes are the three words and cues in our example are bigram segments.11 The hypothetical test nonwords mamo and nuno are broken down to cues. To evaluate the activation level of each test nonword, we computed the sum of the weights in the vectors for each outcome.12 Based on the present model, mamo receives a higher activation diversity than nuno.

Fixed effects summary for Study I (German, attested). β: Coefficient; SE: Standard error; z: z-value; CILower95% and CIUpper95%: 95% confidence intervals of the coefficient from bootstrapping; pBootstrapped: p-value from bootstrapping simulations.

| β | SE | z | CILower95% | CIUpper95% | pBootstrapped | ||

| (Intercept) | 1.4258 | 0.1223 | 11.6624 | 1.2098 | 1.6729 | <.001*** | |

| L1 | Activation diversity | –0.1969 | 0.0893 | –2.2044 | –0.3684 | –0.0288 | .016* |

| Neighbourhood density | –0.2778 | 0.0935 | –2.9708 | –0.4641 | –0.0985 | .004** | |

| Consonant frame prob. | –0.1483 | 0.0812 | –1.8247 | –0.3099 | 0.0017 | .072∙ | |

| AL | Activation diversity | – | – | – | – | – | n.s. |

| Neighbourhood density | 0.4568 | 0.1258 | 3.6303 | 0.2162 | 0.7012 | <.001*** | |

| Language independent | Identity (Identical vs. Non-identical) |

0.6749 | 0.1553 | 4.3445 | 0.3847 | 0.9761 | <.001*** |

| Trial number | –0.5205 | 0.0717 | –7.2538 | –0.6644 | –0.3882 | <.001*** | |

| Response time | –0.6544 | 0.0940 | –6.9574 | –0.8385 | –0.4771 | <.001*** | |

| Number of observations: 1856; number of participants: 232; number of items: 128 | |||||||

| Level of significance: ∙ (p ≤ 0.1), * (p ≤ 0.05), ** (p ≤ 0.01), *** (p ≤ 0.001). | |||||||

Neighbourhood density While activation diversity has been shown to capture the same amount of variance as a number of psycholinguistic variables in psycholinguistic tasks, neighbourhood density was found to be the least correlated variable with activation diversity (Milin et al., 2017, Table 5). Neighbourhood density is a classic lexical variable that reflects analogical learning (Nosofsky, 1986). It is known to affect the speech production, the speech perception, and the acceptability judgement of nonwords. Nonwords with a high neighbourhood density are more accurate in nonword repetition (Gathercole, 1995; Munson, Kurtz, & Windsor, 2005), take longer to reject in auditory lexical decision (Chuang et al., 2019; Luce & Pisoni, 1998), and are more acceptable as a real word in acceptability judgement (Bailey & Hahn, 2001; Harris, Neasom, & Tang, In prep) than nonwords with a low neighbourhood density. More specifically, in first and second language acquisition, words with a high neighbourhood density are better acquired than those with a low neighbourhood density (Coady & Aslin, 2003; Storkel, Armbrüster, & Hogan, 2006). Neighbourhood density is a measure of wordlikeness of a nonword. We therefore hypothesise that neighbourhood density of a nonword could influence whether it would get accepted as a word of the artificial language.

Fixed effects summary for Study I (German, unattested). β: Coefficient; SE: Standard error; z: z-value; CILower95% and CIUpper95%: 95% confidence intervals of the coefficient from bootstrapping; pBootstrapped: p-value from bootstrapping simulations.

| β | SE | z | CILower95% | CIUpper95% | pBootstrapped | ||

| (Intercept) | 1.3606 | 0.1156 | 11.7709 | 1.1423 | 1.6034 | <.001*** | |

| L1 | Activation diversity | –0.3528 | 0.0971 | –3.6329 | –0.5431 | –0.1692 | <.001*** |

| Neighbourhood density | –0.3232 | 0.0964 | –3.3517 | –0.5123 | –0.1362 | <.001*** | |

| Consonant frame prob. | –0.2944 | 0.0884 | –3.3306 | –0.4729 | –0.1246 | <.001*** | |

| AL | Activation diversity | – | – | – | – | – | n.s. |

| Neighbourhood density | 0.2246 | 0.0938 | 2.3939 | 0.0363 | 0.4128 | .012* | |

| Language independent | Identity (Identical vs. Non-identical) |

0.5464 | 0.1635 | 3.3416 | 0.2338 | 0.8734 | <.001*** |

| Trial number | –0.4082 | 0.0660 | –6.1819 | –0.5425 | –0.2804 | <.001*** | |

| Response time | –0.4951 | 0.0808 | –6.1263 | –0.6581 | –0.3474 | <.001*** | |

| Number of observations: 1856; number of participants: 232; number of items: 128 | |||||||

| Level of significance: ∙ (p ≤ 0.1), * (p ≤ 0.05), ** (p ≤ 0.01), *** (p ≤ 0.001). | |||||||

The GNM (Bailey & Hahn, 2001) was used to estimate the neighbourhood density of nonwords. GNM compares each nonword with all the words in the lexicon weighted by the phonological distances between each nonword and the real words and the token frequency of the real words.13 The advantage of GNM over a strict one-segment difference neighbourhood density metric is that it takes into account the whole lexicon (not just words that are immediate neighbours) while adjusting for a neighbour’s importance by how distant it is from the nonword (the more distant it is, the less important it is) and how frequent it is (the lower the frequency, the lower the importance). The gradient nature of GNM is particularly advantageous for computing over small lexicons (e.g., the AL lexicons) as otherwise many nonwords would have zero number of immediate neighbours. To determine the phonological distance between the nonwords and the real words, we first computed the phonetic similarities between all phones by taking the proportion of matched features over all features using the phonological distinctive feature system by PHOIBLE (Moran et al., 2014).14 We then used these phonetic distances as weights to compute the weighted Levenshtein distance (Levenshtein, 1966) between two words.

For instance, to compute the neighbourhood density of a nonword, mamo, over a German lexicon, mami, which is a frequent word of German (engl.: mummy), will contribute more than dodo (engl.: dodo), which is an infrequent word of German. The reason to reward the nonword mamo with a relatively high neighborhood density value is because the nonword mamo is phonologically closer to a frequent real word mami than to an infrequent real word dodo. This means the nonword mamo receives a strong neighborhood support by being phonologically close to a highly frequent real word mamo.

Consonant frame probability The experimental paradigm set out to test whether a consonant identity pattern is preferred over a non-identity pattern with lexical items of either the CaVCaV or the CaVCbV pattern. This could direct the participants’ attention to the consonant frame C_C_, and therefore enhance the potential effect of lexical statistics over the consonant frames. For this reason, we included the probability of C_C_ as an additional lexical variable for examination. The probability of C_C_ is the number of word types with a given frame C_C_ divided by the number of all CVCV word types.15 The choice of using type frequency over token frequency was to minimise the potential overlap with the activation diversity variable which was trained on token-based phonotactic information. Unlike the variables activation diversity and neighbourhood density, this was computed only with respect to the L1 lexicons (Brysbaert et al., 2011; Cai & Brysbaert, 2010) because the number of items with the same consonant frame was matched across frame types in the AL lexicons. Thus there is no variation with the probability of word types.

2.3.2. Language-independent variables

Three language-independent predictors were considered: Trial number, response time, and identity.

Trial Number Trial number is the number of the trial in a testing session. It was included to evaluate the effect of recency of the exposure of the artificial language, as we know that recently exposed grammatical structure are preferred (Luka & Barsalou, 2005).

We hypothesised that the participants would more likely accept a nonword as a word of the artificial language at the beginning of the testing session (a low trial number) than at the end of the session (a high trial number). In other words, as the experiment progressed (from a low trial number to a high trial number), the probability of a Yes response, which denotes accepting a nonword as a word of the artificial language, decreases.

Response Time Response time in this study is the time it takes to respond to each nonword. In many behavioural tasks response time is included as a control for the well-known trade-off between speed and accuracy (e.g., Davidson and Martin (2013); Heitz (2014)). This is not how we should interpret response time because there is not a correct or an incorrect answer to whether a given nonword belongs to the artificial language exposed to the participants. We hypothesise that the longer the response time, the probability of a Yes response, which denotes accepting a nonword as a word of the artificial language, decreases.

Identity In the original study by Linzen and Gallagher (2017) in English, learners were shown to display an effect towards accepting more nonwords with an identical consonant pattern Ca_Ca_, than those with a non-identical consonant pattern Ca_Cb_ as words of the artificial language. Their learners were exposed to an artificial language with an overrepresentation of identical consonant frames relative to chance, and as a consequence learners exhibited a preference for identical consonant frames over non-identical consonant frames, which demonstrated learning of the phonotactic pattern. We expect learning of this identity pattern as well. Furthermore, a successful replication of this effect in our experiments would serve as indirect evidence that our experiments are successful replications of the original study in two new languages, thus lending validity to other findings from our study.

As in many ALL studies, Linzen and Gallagher (2017)’s learning effect, the identity effect, was tested for its generalisability. This was done by means by evaluating whether the identity effect holds in both familiar items (nonwords with consonant frames attested in the exposure phase) and unfamiliar items (nonwords with consonant frames not attested in the exposure phase). The evaluation of the identity effect in the unattested items is crucial since it enabled the researchers to investigate not only memory but also the generalisability of the observed patterns. Linzen and Gallagher (2017) found the identity effect was robust across both attested and unattested items and there was no significant difference between the two sets of items. The generalisability of the observed identity effect relies on the assumption that the unattested items do not resemble the exposure items in the consonant frame type; therefore, the observed identity effect is not due to the participant’s experience of the exposure items. However, our AL variables and the time course variables in fact challenge this rigid assumption in two ways. First, while the unattested items did not overlap with the exposure items in their consonant frames at the segmental level, the same cannot be said at the individual featural level for both the consonant frames and indeed the vowels. Second, the time course variables evaluate the potential recency effect the participants have with respect to the exposure items. Therefore, we aim at jointly evaluating the identity effect with the AL and the time course variables. This would allow us to evaluate the role of exposure items in the processing of the unattested nonwords and see if the effect of identity would remain significant.

2.4. Model procedure

Linear mixed-effects logistic regression models were fit to the responses conducted using the lme4 package in R (Bates, Mächler, Bolker, & Walker, 2015; R Core Team, 2013). For each of the experiments, two models were fitted over the nonwords with attested C_C_ frames and the nonwords with unattested C_C_ frames respectively. The primary focus of our study is to evaluate whether or not lexical statistics such as the ones we outlined in Section 2.3 have an effect on the behavioural responses of an ALL experiment. To evaluate the effect of lexical statistics, four models were fitted over the German group’s attested nonwords (Model 1) and unattested nonwords (Model 2), the Mandarin group’s attested nonwords (Model 3) and the unattested nonwords (Model 4), predicting either a Yes or a No (base-level) response. In total, the four models were fitted with the predictor variables outlined in Section 2.3 as fixed effects and per-speaker and per-item random intercepts to allow for idiosyncrasies of individual speakers and items, as is typical of psycholinguistic research.

The regression structure of the initial models is shown below (note that the language-dependent predictors have either ‘L1’ or ‘AL’ in parentheses referring to their respective languages. L1 is referring to either German or Mandarin and AL is referring to an artificial language).

Response (Yes/No) ∼ Identity + Activation diversity (L1) + Neighbourhood density (L1) + Activation diversity (AL) + Neighbourhood density (AL) + Consonant frame probability (L1) + Trial number + Response time + (1 | Participant) + (1 | Item)

Following standard practice in regression modelling, the continuous variables were z-score normalised (e.g., Baayen, 2008, Sec. 2.2). Z-score normalization allows us to compare the relative strength of our continuous predictors directly. The distributions of activation diversity and response time were skewed; therefore, they were log-transformed (base 10) before z-score normalization. Neighbourhood density was already on a log scale. Our categorical predictor Identity was sum-coded (Wissmann, Toutenburg, & Shalabh, 2007) with non-identical as the base level. The dependant variable, which is the participants’ response (Yes/No), was coded with ‘No’ as the base level.

To evaluate potential collinearity issues, we computed the condition number (Belsley, Kuh, & Welsch, 1980), using the function collin.fnc in the library languageR (Baayen, 2013). Our variables have a condition number of 2.09 and 3.12 in Study I (German) and Study II (Mandarin) respectively. According to Baayen (2008), these values indicate no issues of collinearity.

These initial models were then simplified following a step-down, data-driven model selection procedure which compared nested models using the backward best-path algorithm of Gorman and Johnson (2013), making use of the anova() function and likelihood ratio test provided by R. After the step-down procedure, by-participant random slopes for the optimal fixed effects were fitted to ensure that our estimates of the effects of these factors will be relatively conservative. The random slopes were kept only if they are justified by the data and if there are no singularity or non-convergence issues. It has been suggested that the likelihood ratio test can be anti-conservative (Luke, 2017) when used as a measure of statistical significance. We, therefore, chose a relatively liberal threshold of alpha, α = 0.1, to be conservative in our model selection procedure, preferring to include potentially relevant predictors in the final model and their statistical significance will then be evaluated using bootstrapping. The final model will be presented in the next section.

The statistical significance of the individual predictors in all the models was evaluated by bootstrapping. Bootstrapping was carried out using the bootmer function in the lme4 library. One-thousand bootstrap simulations were performed for each model. Bootstrapped p-values and confidence intervals at 95% were computed for each predictor in each model. We follow the conventional alpha-level of 0.05 for significance. Therefore, we will refer to any p-value below 0.05 as “significant” and any p-value greater than 0.05 but smaller than 0.1 as “near-significant”.

In the following sections, we will first present the results of the planned analyses of Study I (German) and of Study II (Mandarin). In addition to these planned analyses, we will present the results of two exploratory analyses on the effect of attestedness and its interactions motivated by the results from Study I and Study II. Readers should note that these models are exploratory and their results should be interpreted with caution. This is because a) the effect of attestedness is not our study of interest, b) we have no a-priori reasons to examine the interactions of our many fixed effects and their random slopes, and c) we wanted to avoid making our models too complex to interpret and risk overfitting the small data that we have (approx. 1,000 data points per model).

3. Results

3.1. Study I: German

3.1.1. Study I: German: Attested

Table 4 summarises the fixed effects in Model 1, which is fitted over the German attested nonwords. The model structure of the best model is shown below.

Response (Yes/No) ∼ Identity + Activation diversity (L1) + Neighbourhood density (L1) + Neighbourhood density (AL) + Consonant frame probability (L1) + Trial number + Response time + (1 + Response time | Participant) + (1 | Item)

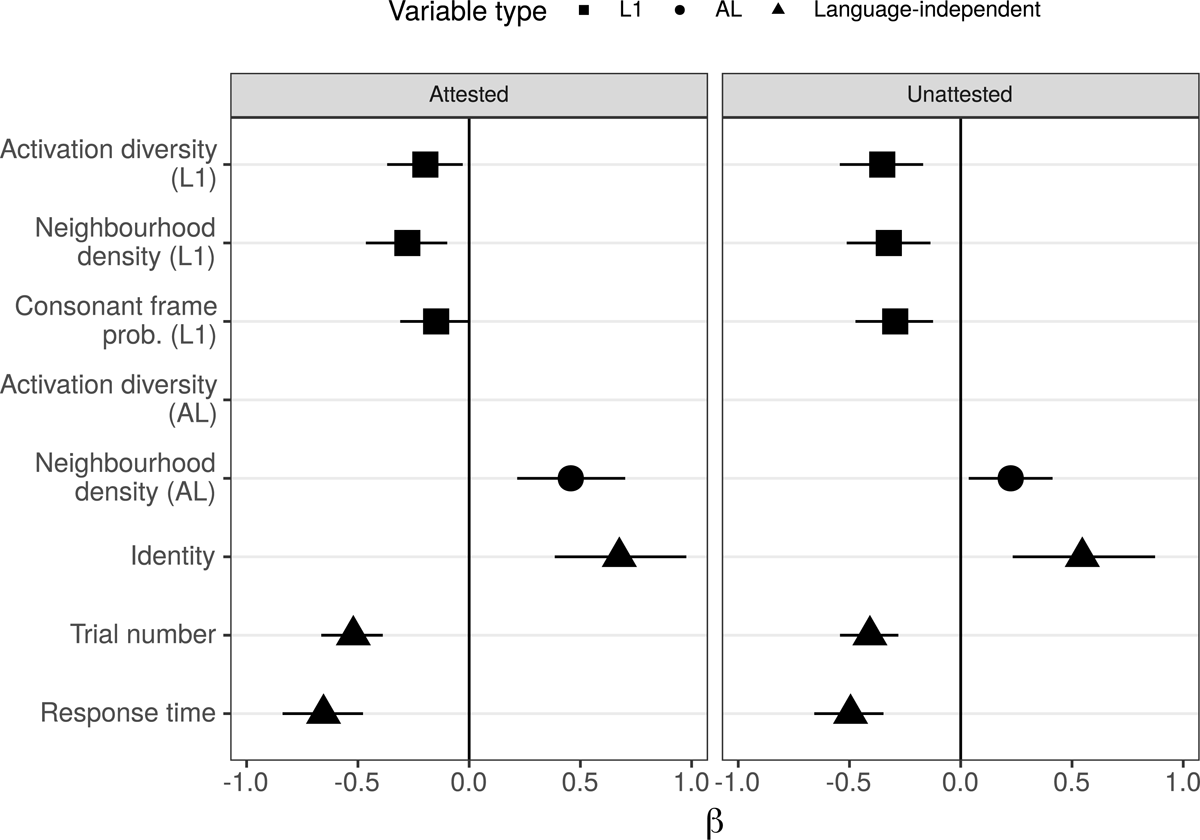

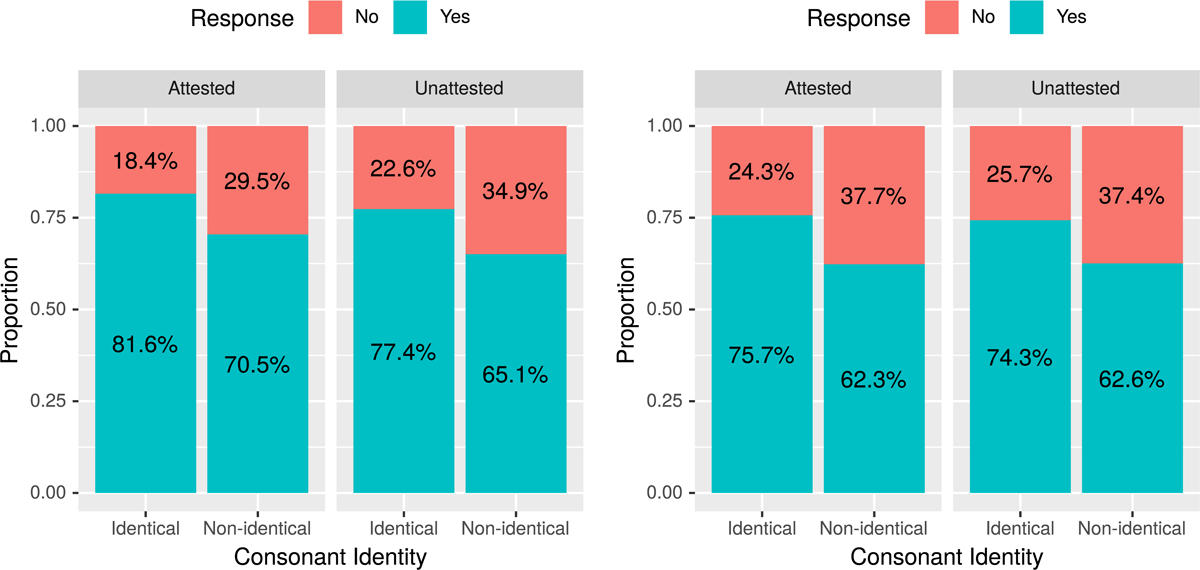

We first examine the language-independent variables. All three of the language-independent variables were highly significant in the expected directions: These are Identity, trial number, and response time. The effect of identity was in the same direction as the original study for English, such that the nonwords with identical consonant were more likely to be accepted. The effect of trial number and response time suggested there is a recency effect – the higher the trial number and the longer the response time, the lower the likelihood of acceptance. In other words, the more recent a nonword was processed both across and within trials, the more likely it would get accepted. Having examined the language-independent variables unrelated to L1 and AL effects, we move on to the three L1 variables and two AL variables. Two of the L1 variables were significant: Activation diversity and neighbourhood density. The third L1 variable, consonant frame probability, was near significant. All three L1 variables have a negative coefficient suggesting that the more L1-like the nonword (i.e., the higher the activation diversity, the higher the neighbourhood density, and the higher the consonant frame probability), the lower the likelihood of acceptance. Only one of the two AL variables was significant: Neighbourhood density. Activation diversity was not significant since it was dropped from the model selection. Neighbourhood density has a positive coefficient suggesting that the more AL-like the nonword (i.e., the higher the neighbourhood density), the higher the likelihood of acceptance. Finally, we compare the effect sizes of the L1 and AL variables. Neighbourhood density (AL) has a stronger effect than its L1 counterpart as well as the other two L1 variables, activation diversity and consonant frame probability. In sum, the influence of the AL lexicon is stronger than that of the L1 lexicon with the attested items. Random effects in Model 1 are summarised in Table 14 of the appendix.

3.1.2. Study I: German: Unattested

Table 5 summarises the fixed effects in Model 2, which is fitted over the German unattested nonwords. The model structure of the best model turned out to be the same as in Model 1 (see Section 3.1.1).

We first examine the language-independent variables. Like Model 1, Identity, trial number and response time were highly significant in the expected directions. Identical consonant frames are accepted more than non-identical consonant frames; the higher the trial number, the lower the likelihood of acceptance; and the longer the response time, the lower the likelihood of acceptance. Therefore, the effect of identity and recency were found in both attested and unattested nonwords. We now examine the three L1 variables and two AL variables. All three of the L1 variables were highly significant in the same direction as Model 1: Activation diversity, neighbourhood density, and consonant frame probability. Like Model 1, the more L1-like the nonword (i.e., the higher the activation diversity, the higher the neighbourhood density, and the higher the consonant frame probability), the lower the likelihood of acceptance. Just like Model 1, only neighbourhood density (AL) was significant with a positive coefficient, while activation diversity (AL) was dropped from the model selection. Again we compare the effect sizes of the L1 and AL variables. Contrary to Model 1, neighbourhood density (AL) has a weaker effect than its L1 counterpart as well as the other two L1 variables, activation diversity and consonant frame probability. In sum, the influence of the AL lexicon is weaker than that of the L1 lexicon with the unattested items. Random effects in Model 2 are summarised in Table 15 of the appendix.

Figure 2 summarises the effect size of the significant variables in Model 1 and Model 2. It illustrates that the direction of the significant effects is consistent across the attestedness condition. L1 variables have a negative effect while the AL variable has a positive effect. Identical consonant frames are more likely to be accepted. Nonwords that were responded to earlier in the experiment are more likely to be accepted across and within trials.

3.2. Study II: Mandarin

3.2.1. Study II: Mandarin: Attested

Table 6 summarises the fixed effects in Model 3, which is fitted over the Mandarin attested nonwords. The model structure of the best model is shown below.

Fixed effects summary for Study II (Mandarin, attested). β: Coefficient; SE: Standard error; z: z-value; CILower95% and CIUpper95%: 95% confidence intervals of the coefficient from bootstrapping; pBootstrapped: p-value from bootstrapping simulations.

| β | SE | z | CILower95% | CIUpper95% | pBootstrapped | ||

| (Intercept) | 0.9209 | 0.0948 | 9.7063 | 0.7462 | 1.1172 | <.001*** | |

| L1 | Activation diversity | – | – | – | – | – | n.s. |

| Neighbourhood density | –0.2405 | 0.0793 | –3.0309 | –0.4025 | –0.0867 | .002** | |

| Consonant frame prob. | – | – | – | – | – | n.s. | |

| AL | Activation diversity | – | – | – | – | – | n.s. |

| Neighbourhood density | 0.3226 | 0.1006 | 3.2079 | 0.1118 | 0.5267 | .002** | |

| Language independent | Identity (Identical vs. Non-identical) |

0.7173 | 0.1349 | 5.3176 | 0.4647 | 0.9859 | <.001*** |

| Trial number | –0.2774 | 0.0609 | –4.5531 | –0.3980 | –0.1581 | <.001*** | |

| Response time | –0.3651 | 0.0759 | –4.8140 | –0.5146 | –0.2266 | <.001*** | |

| Number of observations: 1752; number of participants: 219; number of items: 128 | |||||||

| Level of significance: ∙ (p ≤ 0.1), * (p ≤ 0.05), ** (p ≤ 0.01), *** (p ≤ 0.001). | |||||||

Response (Yes/No) ∼ Identity + Neighbourhood density (L1) + Neighbourhood density (AL) + Trial number + Response time + (1 + Response time | Participant) + (1 | Item)

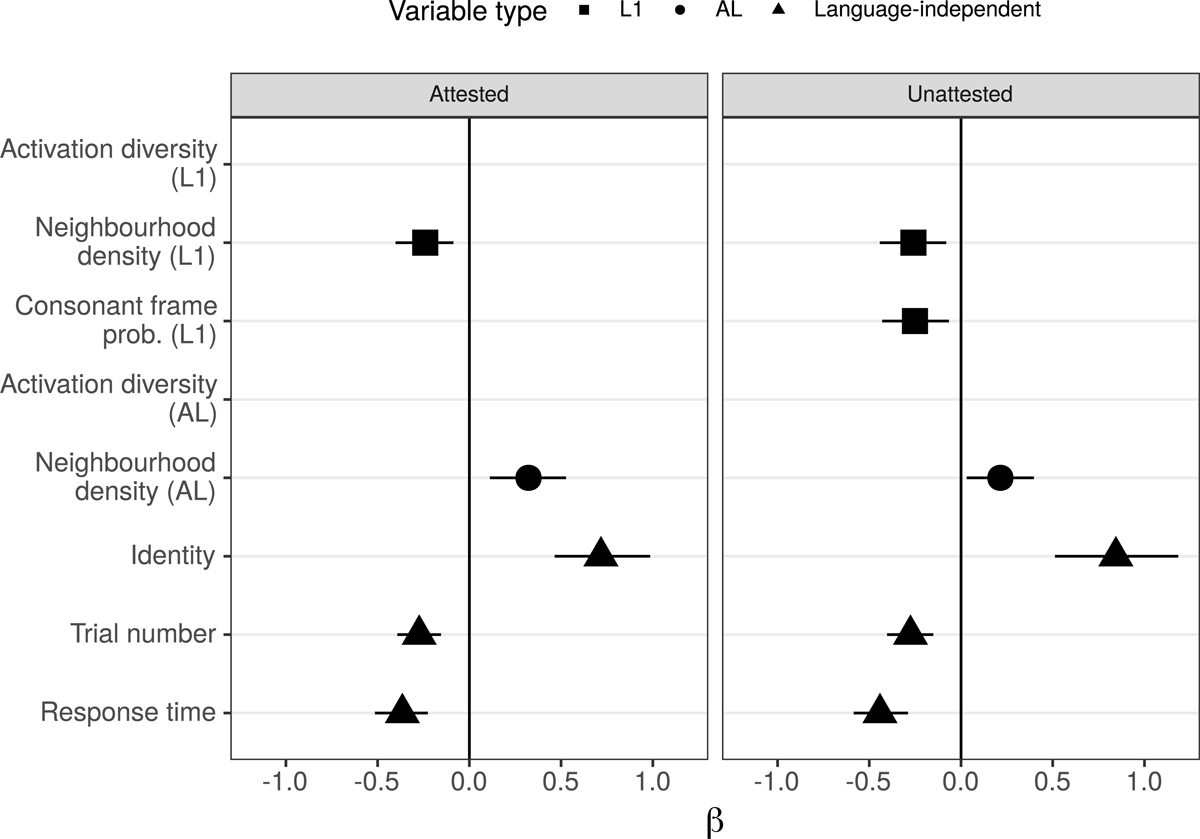

Again we first examine the language-independent variables. Like the German group (Model 1 and Model 2), Identity, trial number, and response time were highly significant in the expected directions. Identical consonant frames are accepted more than non-identical consonant frames; the higher the trial number, the lower the likelihood of acceptance; and the longer the response time, the lower the likelihood of acceptance.

Unlike the German group (Model 1), of the three L1 variables, only neighbourhood density was significant, while both activation diversity and consonant frame probability had no significant effect on nonword acceptance. The effect of neighbourhood density (L1) suggests that the more L1-like the nonword, the lower the likelihood of acceptance. Of the AL variables, only neighbourhood density AL was significant in the same direction – the more AL-like the nonword, the higher the likelihood of acceptance. Activation diversity (AL) was not significant. Finally, the effect size of neighbourhood density (AL) is higher than its L1 counterpart. Besides the insignificance of the two L1 variables, activation diversity and consonant frame probability, the direction of the L1 and AL effects and their relative difference of their effect sizes are consistent with that of the German group (Model 1). In sum, the influence of the AL lexicon is stronger than that of the L1 lexicon with the attested items. Random effects in Model 3 are summarised in Table 16 of the appendix.

3.2.2. Study II: Mandarin: Unattested

Table 7 summarises the fixed effects in Model 4, which is fitted over the Mandarin unattested nonwords. The model structure of the best model is shown below.

Fixed effects summary for Study II (Mandarin, unattested). β: Coefficient; SE: Standard error; z: z-value; CILower95% and CIUpper95%: 95% confidence intervals of the coefficient from bootstrapping; pBootstrapped: p-value from bootstrapping simulations.

| β | SE | z | CILower95% | CIUpper95% | pBootstrapped | ||

| (Intercept) | 1.1052 | 0.1118 | 9.8829 | 0.8917 | 1.3261 | <.001*** | |

| L1 | Activation diversity | – | – | – | – | – | n.s. |

| Neighbourhood density | –0.2596 | 0.0964 | –2.6928 | –0.4424 | –0.0806 | .008** | |

| Consonant frame prob. | –0.2507 | 0.0952 | –2.6330 | –0.4298 | –0.0662 | .004** | |

| AL | Activation diversity | – | – | – | – | – | n.s. |

| Neighbourhood density | 0.2147 | 0.0938 | 2.2861 | 0.0307 | 0.3975 | .016* | |

| Language independent | Identity (Identical vs. Non-identical) |

0.8441 | 0.1701 | 4.9618 | 0.5122 | 1.1841 | <.001*** |

| Trial number | –0.2754 | 0.0639 | –4.3086 | –0.4028 | –0.1508 | <.001*** | |

| Response time | –0.4418 | 0.0743 | –5.9449 | –0.5851 | –0.2889 | <.001*** | |

| Number of observations: 1752; number of participants: 219; number of items: 128 | |||||||

| Level of significance: ∙ (p ≤ 0.1), * (p ≤ 0.05), ** (p ≤ 0.01), *** (p ≤ 0.001). | |||||||

Response (Yes/No) ∼ Identity + Neighbourhood density (L1) + Consonant frame probability (L1) + Neighbourhood density (AL) + Trial number + Response time + (1 | Participant) + (1 | Item)

The language-independent variables were all significant and their effects are in the same direction as all the other models. Identity, trial number and response time were highly significant in the expected directions.

Of the three L1 variables, activation diversity was not significant and was dropped during model selection, while neighbourhood density and consonant frame probability were both significant with a negative effect. The more L1-wordlike the nonword, the lower its likelihood of acceptance. Of the two AL variables, only neighbourhood density was significant with a positive effect. A comparison of the effect sizes across the L1 and AL variables suggest that neighbourhood density (AL) has a weaker effect than its L1 counterpart as well as consonant frame probability (L1).

Besides the insignificance of the L1 variable activation diversity, the direction of the L1 and AL effects and the relative difference of their effect sizes are consistent with that of the German group (Model 2). Again, the influence of the AL lexicon is weaker than that of the L1 lexicon with the unattested items. Random effects in Model 4 are summarised in Table 17 of the appendix.

Figure 3 summarises the effect size of the significant variables in Model 3 and Model 4. It illustrates that the direction of the significant effects is consistent across the attestedness condition with a minor difference in how consonant frame probability (L1) is only significant in the unattested condition.

3.3. Attestedness and its interactions

In the models for attested items (model 1, and model 3), we observed that AL variables have a stronger effect than the L1 variables. In contrast, in the models for the unattested items (model 2, and model 4), the L1 variables have a stronger effect than the AL variables. To better evaluate this pattern, we conducted two additional analyses by testing if attestedness interacts with L1 and AL variables for each language group separately. Attestedness was sum-coded with unattestedness as the base level. Below, we present the best model for German; next we present the best model for Mandarin.

Response (Yes/No) ∼ Identity + Activation diversity (L1) + Neighbourhood density (L1) + Neighbourhood density (AL) + Consonant frame probability (L1) + Trial number + Response time + Activation diversity (L1):Attestedness + Neighbourhood density (AL):Attestedness + Consonant frame probability (L1):Attestedness + (1 + Response time | Participant) + (1 | Item).

Response (Yes/No) ∼ Identity + Neighbourhood density (L1) + Consonant frame probability (L1) + Neighbourhood density (AL) + Trial number + Response time + Consonant frame probability (L1):Attestedness + (1 + Response time | Participant) + (1 | Item)

Table 8 and Table 9 summarise the fixed effects of the two regression models, which are fitted over the German nonwords and the Mandarin nonwords respectively. There is no main effect of attestedness found in either the German group or the Mandarin group. For German, attestedness interacts with neighbourhood density (AL) significantly and two interaction terms were near significant: Activation diversity (L1), and consonant frame probability (L1). Attestedness did not significantly interact with neighbourhood density (L1), and it was dropped during model selection. For Mandarin, only one of the four interaction terms was significant: Attestedness interacts with consonant frame probability (L1). Attestedness did not significantly interact with neighbourhood density (L1) and neighbourhood density (AL), and they were dropped during model selection. Random effects of the two models are summarised in Table 18 and Table 19 of the appendix.

Fixed effects summary for Study I with attestedness and its interactions with L1 and AL variables (German, attested, and unattested). β: Coefficient; SE: Standard error; z: z-value; CILower95% and CIUpper95%: 95% confidence intervals of the coefficient from bootstrapping; pBootstrapped: p-value from bootstrapping simulations.

| β | SE | z | CILower95% | CIUpper95% | pBootstrapped | ||

| (Intercept) | 1.4229 | 0.1033 | 13.7782 | 1.2352 | 1.6254 | <.001*** | |

| L1 | Activation diversity | –0.2822 | 0.0730 | –3.8654 | –0.4301 | –0.1388 | .002** |

| Neighbourhood density | –0.2911 | 0.0738 | –3.9476 | –0.4328 | –0.1456 | <.001*** | |

| Consonant frame prob. | –0.2418 | 0.0676 | –3.5751 | –0.3776 | –0.1095 | <.001*** | |

| AL | Activation diversity | – | – | – | – | – | n.s. |