1. Background

One important question in prosody research is the following: How do speakers make syllables and words prominent in speech, and how do listeners make use of this information? The answer to this question is complex, entailing a consideration of a language’s various cues to prominence, and the listener’s incorporation of prominence information in different domains of perception and processing.

In speech production, the literature has documented various ways in which speech articulations and acoustics are modulated by prosodic prominence, referred to here under the umbrella term of “prominence strengthening.” These effects generally help enhance a given segment’s perceptual salience, and/or enhance acoustic (or featural) properties relevant for the contrast system of a given language (e.g., Beckman, Edwards, & Fletcher, 1992; Cho, 2005; Cole, Kim, Choi, & Hasegawa-Johnson, 2007; de Jong, 1995; Garellek, 2014; Kim, Kim, & Cho, 2018).

In comparison, relatively little work has been carried out examining the perceptual component of the above question. The present study thus addresses one part of this line of inquiry from the perspective of the listener. In three experiments, this study tests how glottalized voice quality and production of a glottal stop impact the perception of vowels in American English, in line with the hypothesized function of glottalization as prominence marking. The perception of /ɛ/ versus /æ/ is adopted as a test case. A visual-world eyetracking experiment further tests how the influence of glottalization plays out in online speech processing, and compares this data to that of a previous study (Steffman, 2021a), informing our understanding of how prominence cues are integrated as speech unfolds.

The introduction proceeds with a working definition of prosodic prominence (1.1), the role that vowel-initial glottalization has been shown to play in speech production (1.2), and finally the role of prosodic information in perception (1.3), motivating the test of vowel-initial glottalization as a prominence cue.

1.1. Defining prominence

As suggested by continuing and recent reviews (Baumann & Cangemi, 2020; Ladd & Arvaniti, 2023), defining prominence is not an entirely straightforward enterprise. For the purpose of the present study, prominence is considered in two regards.

Firstly, following commonly used terminology from Jun (2005, 2014), a language’s prosodic system can be described as having “head prominence” and/or “edge prominence.” In the former, the expression of prominence is linked to a prosodic head. Relevant to the present study, in American English this head is a metrically prominent syllable in a phrase. Metrically prominent syllables may be marked as phrasally prominent, and produced with a prominence-lending pitch movement (a pitch accent). This sort of prominence will henceforth be described as “phrasal prominence.” Of note, In languages which are described as lacking head prominence, the notion of a prosodic head is not relevant and intonational F0 events demarcate domain (phrase) edges; Ladd and Arvaniti (2023) raise the question if, in languages of this sort, the concept of prosodic prominence is a useful one at all.

Another definition of prominence can be made without reference to metrical structure, or the prosodic features of a particular language. This is a language-general notion of “standing out.” Two definitions are as follows:

- (1)

- “Prosodic prominence [is] the strength of a spoken word relative to the words surrounding it in the utterance” (Cole, Mo, & Hasegawa-Johnson, 2010, p. 425).

- (2)

- “Loosely defined, ‘perceptual prominence’ refers to any aspect of speech that somehow ‘stands out’ to the listener” (Baumann & Cangemi, 2020, p. 20).

The definition in (1) uses the concept of a word, though the same definition could also apply to sub-word units. Both this definition and the perceptual definition in (2) are evidently related to phrasal prominence: a phrasally prominent, pitch-accented syllable/word will be prominent in the sense of both (1) and (2).1 However the definitions are broader in that other properties besides phrasal prominence may also impact the (perceived) “strength” of a word in relation to surrounding material (including, e.g., word frequency as in Baumann & Winter, 2018). One relevant example of this is domain-initial strengthening (e.g., Cho & McQueen, 2005; Keating, 2006; Keating, Cho, Fougeron, & Hsu, 2004). Here the phonetic properties of segments are strengthened in phrase-initial positions, though not necessarily in analogous fashion to strengthening under phrasal prominence (Kim, Kim, & Cho, 2018). These strengthening effects can be seen as enhancing the acoustic/phonetic prominence of a given segment, if prominence is defined as in (1) and (2) above.

As will be described in Section 1.2, vowel-initial glottalization in American English can be related both to phrasal prominence, and to the more general definitions given in (1) and (2). On the one hand, it is probabilistically predicted by phrasal prominence: Phrasally prominent vowel-initial words are more likely to be preceded by glottalization, discussed below. On the other hand, vowel-initial glottalization is also predicted by phrasing, and can be seen as an instance of general acoustic/phonetic prominence strengthening for the following vowel. Both of these views of prominence are thus relevant when considering vowel-initial glottalization effects.

1.2. Vowel-initial glottalization in speech production

“Glottalization” is used here as a cover term to refer to the production of a sustained closure of the vocal folds, i.e., a glottal stop [ʔ], and localized voice quality changes that are associated with constriction of the vocal folds during voicing (Garellek, 2013; Huffman, 2005). The cover term is useful if we consider the latter of these to be an “incomplete” or lenited glottal stop realization, as is common in the literature (Dilley, Shattuck-Hufnagel, & Ostendorf, 1996; Pierrehumbert & Talkin, 1992).

Of the languages described in the UPSID database (Maddieson & Precoda, 1989), about half use glottalization contrastively (often represented as /ʔ/). However, in many languages that do not use glottalization contrastively, it is well documented that glottalization is nevertheless pervasive in speech, for example in English, Dutch, and Spanish (Dilley et al., 1996; Garellek, 2014; Jongenburger & van Heuven, 1991). An important task for speech research is thus accounting for the prevalence and distribution of glottalization in spoken language.

One clear predictor of glottalization in American English (among other languages) is prosodic organization, both related to prosodic boundaries and prosodic prominence, as noted above. Glottal stops are described as being “inserted” at the beginning of vowel-initial words in prosodically strong positions, where prosodically strong positions include the beginning of a prosodic phrase (Dilley et al., 1996; Pierrehumbert & Talkin, 1992), and in words which bear phrasal prominence (Dilley et al., 1996; Garellek, 2013). Dilley et al. (1996) in particular show that phrase-medial, word-initial vowels in pitch-accented (phrasally-prominent) syllables are glottalized at higher rates as compared to non-prominent equivalents. Notably however, not all pitch accented word-initial vowels are glottalized, and vowels in words which lack pitch-accent but do not have a reduced vowel are more likely to be glottalized than reduced vowels. Speakers also vary widely in their overall rate of glottalization and the extent to which prominence impacts their rate of glottalization. In this sense, glottalization in word-initial vowels is only probabilistically related to phrasal prominence marking, though with a clear tendency to co-occur with phrasal prominence. Redi and Shattuck-Hufnagel (2001) document similar patterns, and consistent inter-speaker variation, and state: “It is clear from these results and from earlier studies that phrase-level glottalization is not obligatory […] glottalization may serve as a marker of ‘degree of finality’ (when it occurs at phrase boundaries) or ‘degree of prominence’ (when it occurs at pitch-accented syllables). Perceptual experiments will be necessary to evaluate the hypothesis that glottalization unrelated to segmental allophony is interpreted by listeners as evidence for a boundary or a prominence, and to determine whether it is interpreted along a continuum or as a contrastive binary feature” (p. 427). The present study addresses both of these perceptual questions.

Garellek (2013, 2014) further suggests a functional motivation for vowel-initial glottalization in American English, using electroglottography (EGG) to examine voicing in vowel-initial words. Garellek (2014) found that phrase-initial vowels, particularly non-prominent vowels, were generally produced with less vocal fold contact during voicing, corresponding to breathy voicing (not glottalization). This suggests, for Garellek’s data at least, glottalization is not having a systematic effect on non-prominent vowels phrase-initially and is more related to prominence marking (cf. Dilley et al., 1996). This effect also became larger at higher-level phrasal domains. Breathier phrase-initial voicing can be attributed to phrase-initial pitch reset, where falling pitch (immediately after reset) results in relaxation of the cricothyroid and thyroarytenoid muscles, and vocal fold abduction (Mendelsohn & Zhang, 2011; Zhang, 2011). Breathier voicing generally leads to decreased intensity and weaker formant energy (Garellek & Keating, 2011; Gordon & Ladefoged, 2001), and Garellek (2014) accordingly proposes that phrase-initial glottalization, most evident in his data in prominent phrase-initial vowels, occurs as a countervailing influence which mitigates the effects of pitch-reset-induced breathiness on voice quality. Glottalization in prominent phrase-initial vowels “strengthens” these vowels, as described by Garellek, in the sense that it engenders more high frequency energy and overall intensity, and boosts frequency information that will be useful in vowel perception (Kreiman & Sidtis, 2011; cf. Garellek, 2013 who found a boost of harmonic energy between 1500–2500 Hz). Glottalization may also be functionally useful in prominence-marking in separating prominent vowel-initial words from surrounding material, and modulating the amplitude envelope in the vicinity of prominent vowels to make them stand out. Preceding silence from a glottal stop will likewise give a boost to listeners’ auditory system at the onset of the vowel (Delgutte, 1980; Delgutte & Kiang, 1984). This view of phrase-initial (and phrase-medial) glottalization implicates (acoustic/phonetic) prominence as a driving force behind it, in that vowels which are preceded by glottalization are enhanced (though this may be either at prosodic domain edges to mitigate phrase-initial breathiness, or at phrasally prominent prosodic heads). In this sense, glottalization in word-initial vowels in American English can be seen as an example of phonetic prominence strengthening, which is related probabilistically to phrasal prominence.

In addition to prosodic prominence, other factors have been shown to influence the rate and distribution of glottalization preceding a vowel in various languages. These include speech rate (Pompino-Marschall & Żygis, 2010; Umeda, 1978) and vowel height (Groves, Groves, Jacobs, et al., 1985; Michnowicz & Kagan, 2016; Pompino-Marschall & Żygis, 2010; Thompson, Thompson, & Efrat, 1974). As documented in German and Spanish, the relative openness of vowels in a vowel hiatus environment predicts the production of glottalization between them: Lower (more open) vowels are more likely to be preceded by a glottal stop (Mckinnon, 2018; Pompino-Marschall & Żygis, 2010). However, relevant to the present study, in American English this is not systematic. Umeda (1978) found no relationship between relative differences in vowel height and production of a glottal stop in a hiatus environment, and Garellek (2013) found that the rate of production of glottal stop in a vowel-initial word was not related to vowel height. Given this, it appears that vowel-initial glottalization is not well predicted by vowel height in American English as it is in e.g., German. This point will be returned to in Section 3.3 in light of the results.

1.3. Prosody and prominence in perception

Given the aforementioned patterns attested in the speech production literature, we can now consider some ways in which prosodic information impacts speech perception, and how these prior findings relate to the objectives of the current study.

In some studies, prosodic information (e.g., an intonational tune), has been shown to exert a predictive, or anticipatory, influence on speech processing. For example, Weber, Grice, and Crocker (2006) found that German intonational tunes are used by listeners to disambiguate temporarily ambiguous sentences as S(ubject) V(erb) O(bject) or OVS, prior to critical case information which disambiguated the constituent order. Similar anticipatory effects of pitch accent type were shown by Ito and Speer (2008), where by a prominent (L+H*) pitch accent was interpreted as conveying contrastive focus on one element in adjective-noun pairs, generating anticipatory looks to a referent (e.g., as participants decorated a Christmas tree: “Hang the blue ball, now hang the GREEN ….” generates anticipatory looks to a green ball). Results such as these in Weber et al. (2006) and Ito and Speer (2008) (among others, e.g., Dahan, Tanenhaus, & Chambers, 2002; Nakamura, Harris, & Jun, 2022; Snedeker & Trueswell 2003) indicate that prosodic cues, especially intonational tunes, can be used to anticipate upcoming speech in terms of syntactic, discourse, and information structure.

Complementing this research, the role of prosodic features such as prominence in the perception of speech segments (and relatedly in pre-lexical and lexical processing) has been a recent topic of interest in the literature (Kim, Mitterer, & Cho, 2018; McQueen & Dilley, 2020; Mitterer, Cho, & Kim, 2016; Mitterer, Kim, & Cho, 2019). In comparison to the results described in the preceding paragraph, data in this line of research offers a different view of the way in which listeners use prosodic information in their perception of fine-grained phonetic detail, and their integration of prosody in perception of cues to segmental contrasts. As alluded to above, it is well documented in the speech production literature that prosodic organization modulates cues that are relevant in the perception of segmental contrasts (see e.g., Keating, 2006 for an overview). For example, voice onset time (VOT) in aspirated stops, an important cue for voicing contrasts, varies systematically as a function of prosodic factors. VOT is longer at the beginning of prosodic domains and in phrasally prominent positions (Cole et al., 2007; Keating et al., 2004; Kim, Mitterer, & Cho, 2018). Another example of prosodically modulated cues to segmental contrasts, described in more detail in Section 2, is that of vowel formants. To the extent that phrasal prosody impacts segmental realization along these lines, the listener is hypothesized to benefit from integrating prosodic information in their perception of segmental cues (Kim & Cho, 2013; Mitterer et al., 2016).

A model which has framed this line of inquiry and received empirical support is that of Prosodic Analysis (Cho, McQueen, & Cox, 2007; McQueen & Dilley, 2020). The model’s architecture stipulates simultaneous parses of segmental information and prosodic information from the speech signal, though the role of each of these in processing is different. Adopting an activation-competition view of word recognition, the model postulates that segmental information activates entries in the lexicon, while phrasal prosodic information is used to select among possible lexical candidates. In the original formulation of the model this entails the reconciliation of prosodic boundaries and word boundaries to determine lexical selection (cf. Christophe, Peperkamp, Pallier, Block, & Mehler, 2004). Empirical support for the model comes from studies showing a delayed influence of prosodic boundary information in processing (Kim, Mitterer, & Cho, 2018; Mitterer et al., 2019), consistent with a post-lexical influence in word recognition. This framing of the role of prosody in processing departs somewhat from the anticipatory effects described above, and this follows from the fact that prosodic characteristics are good predictors of sentence and discourse structure as in Weber et al. (2006) and Ito and Speer (2008); however, they are not good predictors of particular lexical items themselves (i.e., generally speaking, a given word can be produced with a range of prosodic expressions, phrase-medially, phrase-initially, and so on). In this sense, the Prosodic Analysis model (and existing data) suggests that prosodic information is not used to anticipate a given word, but is instead integrated with bottom-up cues in lexical processing with a relative delay, consistent with modulation of activated lexical hypotheses. In other words, if the listener’s task is to identify a lexical item (in the absence of other good predictive information), prosodic cues may be integrated in this process but not used to anticipate what word will be said prior to acoustic information about that word is perceived. What the Prosodic Analysis model and available data show more generally is the importance of considering both prosodic and segmental factors as being processed in parallel in speech recognition, with many outstanding questions (see McQueen & Dilley 2020 for a recent overview).

With respect to glottalization specifically, recent perception and processing studies in Maltese, a language in which /ʔ/ is contrastive, suggest that listeners are sensitive to its prosodic patterning in the language (Mitterer et al., 2019; Mitterer, Kim, & Cho, 2021a, 2021b). In addition to marking a phonemic contrast in Maltese, vowel-initial words can be glottalized when they are at the beginning of a prosodic phrase as a form of phrase-initial strengthening. Glottalization thus serves a sort of dual function: It is phonemic and conveys contrast, and also patterns based on prosodic organization. Mitterer et al. (2019) show that listeners are aware of this dual patterning. When a word is phrase-initial, the listener is more likely to attribute the presence of glottalization as being driven by prosody, thus inferring a phonemically vowel-initial word. In contrast, when glottalization precedes a vowel phrase-medially, the listener is more likely to infer that the word is phonemically/contrastively glottalized. Consistent with the prosodic analysis model, these effects were seen to be delayed in time, as assessed in a visual world eyetracking study, and supporting that prediction from the prosodic analysis model. Mitterer et al. (2021a) show that glottal stops differ from other stops (e.g., /t/) in that they do not strongly constrain lexical access, suggesting that listeners’ interpretation of glottalization is intimately linked to prosodic features in a way that differs from other stops. Mitterer et al. (2021b) further show that glottalization is clearly interpreted as a prosodic feature in that it impacts syntactic parsing decisions in the resolution of attachment ambiguity: The presence of word-initial glottalization leads listeners to posit a preceding prosodic boundary, and thus the presence of a syntactic boundary. These results together thus suggest that vowel-initial glottalization can be treated as prosodic cue in perception by listeners, even when glottalization is contrastive.

Steffman (2021a) offers another relevant comparison for the present study. Steffman examined the influence of prosodic prominence on listeners’ perception of vowel contrasts, as cued by the intonational tune and durational patterns of a phrase. Vowels are strengthened phonetically by formant modulations described in Section 2. Steffman thus tested how phrase-level prominence impacted the perception of vowel formants, and further examined the timecourse of its influence. As noted above, in American English, the expression of prominence is related to the placement of pitch accents in a phrase, which are linked to metrically prominent syllables and (in the autosegmental-metrical model of American English intonation, e.g., Pierrehumbert, 1980) are implemented as F0 targets in an intonation contour. Steffman manipulated F0, duration and intensity in a phrase to shift perceived pitch accentuation, and the perceived prominence of a target word, in the stimuli. In one condition, the target word (which was categorized by listeners) was relatively prominent, interpretable as having an (H*) pitch accent in the phrase “I’ll say [TARGET] now” (where [TARGET] indicates the target word; this could be uttered in a broad focus context). In the other condition, the target word was preceded by focus on the verb “say”: “I’ll SAY [target] now,” where “say” bore a prominent L+H* pitch accent (this could be uttered in a contrastive focus context, e.g., A: “Will you write [target] now?”, B: “I’ll SAY [target] now”). In this condition the target is post-focus and non-prominent (more details on the stimuli in Steffman, 2021a are given in Section 4.5.2, which compares that data to the results of this study). This prominence manipulation is one of phrasal/global prominence cues, and was found to impact listeners’ perception of the target vowel in line with the patterns which will be described in Section 2.

Using eyetracking data, Steffman additionally found that, in contrast to the strictly delayed influence of prosodic boundaries documented in previous studies (Kim, Kim, & Cho, 2018; Mitterer et al., 2019), phrasal prominence showed subtle earlier influences in vowel perception, though these effects were quite small, and strengthened over time to be more robust later in processing. The presence of the earlier effect was discussed in Steffman (2020, 2021a) as reflecting prominence processing at multiple stages, described in terms of the Multistage Assessment of Prominence in Processing (MAPP) model. This model proposes that prosodic information needn’t show a strictly delayed (post-lexical) influence in processing as in the Prosodic Analysis model. Instead, early effects reflect “phonetic prominence”: The relative acoustic/phonetic salience of a word (signaled by whatever cues lend prominence in this sense). The fact that the effect was strongest later in time was interpreted as the result of a more abstract/phonological prominence percept (e.g., the presence or absence of pitch-accentuation), which is reconciled with lexical candidates, under the hypothesis that the lexicon contains information about prosodically conditioned pronunciation variants along the lines of Brand and Ernestus (2018); Mitterer et al. (2021a); Pitt (2009). Notably, this multi-stage effect was generated from stimuli that varied both in terms of phonological prominence (pitch accent structure within the phrase), and necessarily, the relative phonetic prominence of the target word. One prediction from the MAPP model is thus that cues which convey only “phonetic prominence,” i.e., vowel initial glottalization, without varying a more global prominence in terms of pitch accent structure etc., should show a clear early effect, and a different online processing pattern than the effect in Steffman (2021a). The present data thus address this prediction from the model directly as a test of how different cues to prominence may be processed differently.

2. The present study

Given these recent studies on the role of prominence in vowel perception and the processing of vowel-initial glottalization, the present experiments will inform if prominence cued by glottalization should be considered as a mediating factor in vowel perception in American English, a language where glottalization is not contrastive. To the extent that vowel-initial glottalization is a relevant prominence cue, we can examine the timecourse of its influence in relation to the general prediction from the prosodic analysis model that prosody shows a delayed influence in processing, and compare this data to that in Steffman (2021a).

Relevant to the present study, the literature documents a variety of ways in which vowel articulations may be modulated under prominence. Typically, prosodic prominence is here considered in terms of phrase-level prominence marking: The presence or absence of a pitch accent. A well-documented pattern of prominence strengthening in vowels has been termed sonority expansion, where sonority is defined as “the overall openness of the vocal tract or the impedance looking forward from the glottis” (Silverman & Pierrehumbert, 1990, p. 75). In this sense, a more sonorous vowel articulation is one which is produced with increased amplitude of jaw movement and other articulatory adjustments that allow more energy to radiate from the mouth. Sonority-expanding gestures make a vowel articulation more acoustically prominent (louder, longer etc.), and have been described as enhancing its “sonority features” (de Jong, 1995). Other effects, not consistent with sonority expansion, have also been documented in the literature, for example, the production of more extreme high vowel articulations (as with /i/), which are not more open but instead reflect hyperarticulation of the vowel target under prominence (Cho, 2005; de Jong, 1995; Erickson, 2002). In this sense, patterns of prominence strengthening are dependent on the vowels under consideration, and the system of contrasts in the language (e.g., Cho, 2005; Garellek & White, 2015), and so is the listener’s perception of vowels a function of prominence (Steffman, 2020).

Vowels which do undergo sonority expansion are realized as acoustically lower and backer in the vowel space, with higher F1 and lower F2 (Cho, 2005), and listeners’ perception of prominence in a prominence transcription task reflects this formant variation as well (Mo, Cole, & Hasegawa-Johnson, 2009). This pattern will form the basis of the test case adopted in the present study as we ask if listeners expect a more prominent variant of a vowel (specifically with higher F1 and lower F2) to be realized in a prominent context.

The questions raised in Section 1 are addressed by testing if a glottal stop modulates vowel perception in line with sonority expansion effects on vowel formants (Experiment 1), using the contrast between /ɛ/ and /æ/ as a test case (vowels which undergo sonority expansion). This study further tests if fine-grained glottalization cues that do not entail a sustained stop generate the same effect (Experiment 2), and if glottalization mediates online processing of vowel information in the ways predicted by the current model of Prosodic Analysis (Experiment 3). For this purpose two methods are used: A two-alternative forced choice task, and a visual world eyetracking task. In both, listeners categorized stimuli on an /ɛ/-/æ/ continuum with various contextual manipulations of glottalization. The stimuli used in the present experiments, the data for each experiment, and the scripts used the analyze the data are included in full in the open-access repository for the paper hosted via the Open Science Framework at https://osf.io/v4cdz/.

2.1. Predictions

In order to help explain the creation of the stimuli, let us first consider several empirical predictions. If a vowel preceded by glottalization is perceived as prominent, a more prominent acoustic realization of that vowel may be expected by listeners. In this case, it would mean a lower and backer realization of the vowel (with higher F1 and lower F2), with a prominent /ɛ/ essentially becoming acoustically more like /æ/. The corresponding perceptual response would thus be a shift in categorization of the F1/F2 continuum, with more sonorant (lower, backer) F1/F2 values categorized as /ɛ/ in a prominent context (when preceded by glottalization), as compared to a non-prominent one. Empirically, this predicts increased /ɛ/ responses under prominence. Such an effect would constitute perceptual re-calibration for a prominent vowel realization. It is worth noting here that Steffman (2021a) found this effect with the same contrast, when prominence was cued by global/phrasal context as described above.

2.2. Materials

The materials used in all experiments reported here were created by re-synthesizing the speech of a male American English speaker. The speech used in making the stimuli was recorded in a sound-attenuated booth in the UCLA Phonetics Lab, using an SM10A ShureTM microphone and headset. Recordings were digitized at 32 bit with a 44.1 kHz sampling rate.

2.2.1. A full glottal stop: Experiments 1 and 3

The method for creating the stimuli was to design a continuum that varied in F1 and F2, ranging between two vowels, and manipulate the presence or absence of preceding glottalization. The two words used as endpoints of the continuum were “ebb” /ɛ/, and “ab” /æ/. F1 and F2 were manipulated by LPC decomposition and resynthesis using the Burg method (Winn, 2016) in Praat (Boersma & Weenink, 2020). The formant values for each endpoint were based on model sound productions of “ebb” and “ab,” with measures across the entire vowel (i.e., time-series measurements that included the dynamics of F1 and F2). The resynthesis process estimated the source and filter for the starting model sound from the “ebb” model. The filter model’s F1 and F2 were then adjusted to match those of a model “ab” production. From these two filter models, eight intermediate filter steps were created by interpolating between these model endpoint values in Bark space (Traunmüller, 1990). Phase-locked higher frequencies from the starting base /ɛ/ model were restored to all continuum steps, improving the naturalness of the continuum. The result was a 10-step continuum ranging from /ɛ/ to /æ/ values in F1 and F2. Intensity and pitch were invariant across the continuum.

The starting point for stimulus creation was a production of the sentence “say the ebb now,” with “the” produced as [ðǝ], which was how the model speaker produced it without explicit instruction (as compared to the alternative pronunciation [ði]).2 The sentence was produced with an H* pitch accent on the word “ebb,” such that the word with the target vowel bore the final (nuclear) pitch accent in the phrase (this was systematic in the model speaker’s other productions of the sentence, including those which were not used in stimulus creation, and was a natural way for them to produce the sentence).

The file from which the continuum was created was one produced without a glottal stop preceding the target word. The model speaker (a trained phonetician) reported that it was most natural for them to produce a glottal stop between the two vowels, though renditions with and without a glottal stop were both easy to produce. The speaker was prompted to record multiple productions of both target words with and without a preceding glottal stop. The base files for stimulus creation were selected as those which had the clearest production of the target vowels, sounded natural in terms of tempo etc., and which were either very clearly produced with, or without, a glottal stop. The creation of the continuum only altered F1 and F2 in the target word as described above, creating a [ðǝɛb] to [ðǝæb] continuum, with continuous formant transitions from the precursor vowel to the target (as there was no intervening glottal stop). Formant tracks for the 10-step continuum, and preceding vowel are shown in Figure 1 panel B. This constitutes what will be referred to as the “no glottal stop condition,” where no glottal stop preceded the target sound in the vowel hiatus environment. The formants in the precursor vowel [ǝ] were also slightly lowered and backed in the vowel space (F1 raised, F2 lowered) so that these manipulations did not introduce a confound related to spectral contrast effects.3 This manipulation made the precursor vowel sound slightly lower than a canonical [ǝ], though it was clearly intelligible and judged to sound natural.

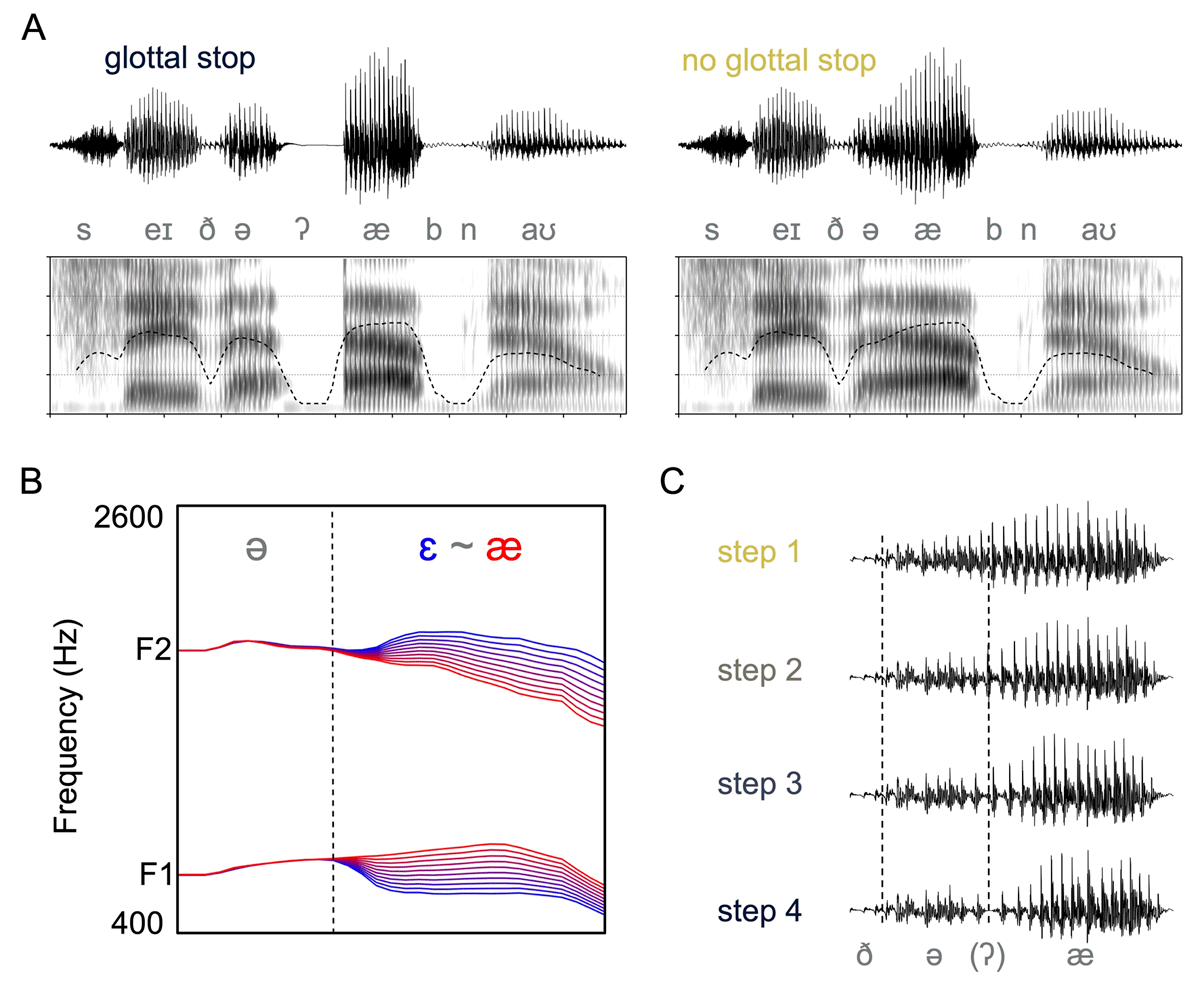

Visualizations of the stimuli used in all Experiments. Panel A: Waveforms and spectrograms showing the glottal stop manipulation (y axis 0–4000 Hz and ticks on the x axis are placed at 100 ms intervals, in this example the target vowel is at step 10, the most /æ/-like). The intensity profile is additionally overlaid on the spectrograms as a dashed line. Panel B: Formant tracks showing the 10-step continuum created from the VV sequence (the target and the preceding vowel). Panel C: Waveforms showing the four steps of the glottalization continuum from Experiment 2, with just the target vowel and preceding vowel shown. The two vertical lines show the beginning and end of [ǝ] respectively (the rightmost line being the same as the vertical line in Panel B).

The method for creating the “glottal stop condition” was to cross-splice [ʔ] from a different production of the carrier phrase in which it preceded the target. The portion of the glottal stop that was inserted was the silent closure (approximately 100 ms in duration), and the short aperiodic burst that accompanied the release of the stop (approximately 15 ms). The stop duration was based on several repetitions from the model speaker (in a careful speech style), and was judged to sound appropriate for the speech rate and of the stimuli. This duration is fairly long, though not outside of the norm: Byrd (1993) describes the durational characteristics of glottal stops in the TIMIT database of American English and finds a mean duration of 76 ms for glottal stop closures between two vowels with 100 ms falling within one standard deviation of that mean (cf. Henton, Ladefoged, & Maddieson, 1992).4

The production from which [ʔ] was cross-spliced was [ðǝʔæb]. In the case that any information about the following vowel is contained in the release of the stop (though none was perceived), it would bias listeners towards /æ/ when a glottal stop precedes the target, which is the opposite of the predicted prominence effect, described in Section 2.1. The point at which the glottal stop was inserted was where formant trajectories began to shift to the target vowel, indicated by the dashed vertical line in Figure 1 panel B. The insertion of [ʔ] resulted in a sudden end to the vowel in the precursor. To render the precursor more natural, several periods from [ǝ] in the production of [ðǝʔæb] were cross-spliced and appended to the precursor vowel at a zero crossing in the waveform. This cross-spliced material replaced the six pitch periods that immediately preceded formant variation along the continuum in the no glottal stop condition (with approximately 60 ms of voicing replaced). The cross-spliced material introduced a dip in amplitude and irregular voicing going into the glottal stop, which was judged to improve the naturalness of the stimuli substantially. This modified precursor vowel and following [ʔ] were cross-spliced to precede all steps on the continuum, resulting in a [ðǝʔɛb] to [ðǝʔæb] continuum, one endpoint of which is shown in Figure 1 panel A. Note that the appended periods were identical for all stimuli, as the precursor did not vary across the formant continuum. All stimuli underwent formant resynthesis, however the glottal stop condition was created by cross-splicing, while there was no cross-splicing manipulation in the no glottal stop condition. This was done in order to keep the continuum acoustically identical across conditions. As a consequence the glottal stop condition is, in a sense, less natural than the no glottal stop condition, though the manipulation was found to sound very similar to naturally produced glottal stops (produced by the speaker in recording for the stimuli). The sudden onset of the target vowel in the glottal stop condition was additionally found to match the acoustic profile of these naturally produced stops and thus deemed to be an adequate manipulation of glottalization cues.

2.2.2. A glottalization continuum: Experiment 2

As is well documented in the speech production literature, and noted above, the way in which glottalization is realized phonetically is notoriously variable, and needn’t entail the production of a sustained stop at the glottis (Dilley et al., 1996; Garellek, 2013; Redi & Shattuck-Hufnagel, 2001). As such, an important question is if different realizations of a glottal stop produce similar perceptual effects. Various studies have shown that glottalization may be cued perceptually by a decrease in pitch and intensity (Gerfen & Baker, 2005; Pierrehumbert & Frisch, 1997). Accordingly, Experiment 2 was designed to create a continuum that varied in glottalization strength. Step 1 in the glottalization continuum in Experiment 2 was the same as the “no glottal stop condition” in Experiment 1. Three additional glottalization conditions were created (labeled step 2–4 in Figure 1 panel C). In each, pitch and intensity cues were varied to signal an increase in the strength of glottalization between the pre-target and target vowels. The endpoint of the continuum is not a complete stop (unlike the glottal stop condition in Experiment 1).

This manipulation was implemented by decreasing the f0 and intensity at the juncture of the two vowels, indicated by the dashed vertical line in Figure 1 panel B. The seven f0 periods at and surrounding this point were manipulated. Intensity was manipulated as a 2 dB decrease in intensity per glottalization continuum step for these seven periods, which were then cross-spliced into the original unmodified production at zero crossings in the waveform. The pitch manipulation, which was implemented with the PSOLA method in Praat (Moulines & Charpentier, 1990) took the f0 period at the juncture and decreased it linearly by 25 Hz at each step. An original f0 of approximately 115 Hz at Step 1 thus became 90, 65, and 40 Hz at Steps 2, 3 and 4 respectively. f0 was interpolated linearly from this low point across the surrounding three periods on either side to the f0 values surrounding them. The result was a four-step continuum in strength of glottalization, shown in Figure 1 panel C.

Experiment 2 used a subset of the formant continuum steps from Experiment 1, as it was observed that listeners in Experiment 1 were essentially at ceiling in their categorization responses for steps 1–3. For this reason only steps 3–10 from Experiment 1 were used.

3. Experiments 1 and 2

Experiments 1 and 2 are described and presented together here, given their similarity. In addition to the general prediction of increased /ɛ/ responses under prominence, In Experiment 2 we can further predict that increasing strength of glottalization should entail increasing strength of this effect, where we see additive shifts in categorization from Steps 1–4 along the glottalization continuum.

3.1. Participants and procedure

3.1.1. Experiment 1

Thirty participants were recruited for Experiment 1. All participants were self-reported native American English speakers with normal hearing, and were recruited from the student population at the University of California, Los Angeles. Each participant completed a language background questionnaire and provided informed consent to participate. Participants received course credit for their participation. The online platform that was used to control stimulus presentation was Appsobabble (Tehrani, 2020).

The procedure was a simple two-alternative forced choice (2AFC) task in which participants heard a stimulus and categorized it as one of two words, “ebb” or “ab.” Participants completed testing seated in front of a desktop computer monitor, in a sound-attenuated room in the UCLA Phonetics Lab. Stimuli were presented binaurally via a PELTORTM 3MTM listen-only headset. The target words were represented orthographically, each target word centered in each half of the monitor. The side of the screen on which the target words appeared was counterbalanced across participants, such that for half of the participants “ebb” was on the left, and for the other half “ebb” was on the right.

Participants were instructed that their task was to identify which word they heard by key press, where a “j” key press indicated the word on the right side of the screen, and an “f” key press indicated the word on the left. Prior to the test trials, participants completed four training trials. In these trials, the continuum endpoints were presented once in each glottalization condition. In the subsequent test trials, each unique stimulus was presented 10 times, in random order, for a total of 200 test trials during the experiment (20 unique stimuli × 10 repetitions). Halfway through the test trials, participants were prompted to take a short self-paced break. The experiment took approximately 15–20 minutes to complete in total.

3.1.2. Experiment 2

Thirty-four participants, none of whom had taken part in Experiment 1, were recruited from the same population for Experiment 2. Data collection and recruitment took place remotely due to COVID 19. Participants were asked to complete the experiment in a quiet location while using headphones. There were a total of 32 unique stimuli used in the experiment (8 formant continuum steps × 4 glottalization continuum steps) each of which was repeated a total of 7 times for a total of 224 trials in the experiment. The four training trials in Experiment 2 presented the endpoints of the glottalization continuum (step 1 and step 4), with the endpoints of the formant continuum, such that listeners heard the endpoints of both continua. The experimental procedure was otherwise the same as in Experiment 1.

3.2. Analysis

The analysis of categorization data in all experiments reported here was carried out using a Bayesian logistic mixed-effects regression model, implemented with the R package brms (Bürkner, 2017). The models were run using R version 4.1.2 (R Core Team, 2021) in the RStudio environment (RStudio Team, 2021). Weakly informative normally distributed priors were employed for both the intercept and fixed effects (mean = 0, standard deviation = 1.5 in log-odds space).5,6

In reporting effects two measures are given, both characterizing the estimated posterior distribution for a given fixed effect. First we report the estimate and 95% credible intervals (CrI) for an estimate. This gives the effect size (in log-odds), and characterizes the distribution/certainly around the estimate. When 95% credible intervals exclude 0, this suggests a consistently estimated directionality, and accordingly a robust influence. In comparison, 95% credible intervals which include 0 would indicate substantial variability in the estimated direction of an effect, and therefore a non-reliable impact on categorization. An additional metric is reported: The “probability of direction,” henceforth pd, computed with bayestestR package (Makowski, Ben-Shachar, & Lüdecke, 2019). This metric is conceptually similar to reporting CrI, but is useful in that it corresponds more intuitively to a frequentist model’s p-value: The pd indexes the percentage of a posterior distribution which shows a given sign, with values ranging between 50 and 100 percent. A posterior centered precisely on zero (i.e., no evidence for an effect), will have a pd of 50, while a posterior with a skewed negative or positive distribution will have pd that approaches 100. Convincing evidence for an effect would come from pd values that are greater than 97.5 (the pd value that corresponds to 95% CrI excluding zero; a pd value of 100 would indicate all of the distribution for an estimate excludes the value of zero, this would be very strong evidence for an effect). Tables showing all fixed effects estimates for each model are included in the Appendix.

Models were coded to predict categorization responses, with an /ɛ/ response mapped to 1, and an /æ/ response mapped to 0. The formant continuum was coded as a continuous variable, and scaled and centered. In Experiment 1, glottalization was contrast coded with the presence of a glottal stop mapped to 0.5, and the absence of a glottal stop mapped to –0.5. Categorization responses were predicted as a function of continuum step, glottalization, and the interaction of these two fixed effects. In Experiment 2, the glottalization continuum was treated as a continuous variable, and was scaled and centered. Categorization responses were predicted as a function of glottalization continuum, formant continuum, and their interaction. As a control variable, stimulus repetition was also included as a fixed effect, referring to the repetition of a given unique stimulus over the course of the experiment, to account for the possibility of listener bias in categorizing repeated stimuli. This variable was centered and scaled, and ranged from 1–10 in Experiment 1, 1–7 in Experiment 2 and 1–8 in Experiment 3 (due to the different number of repetitions of each unique stimulus). Random effects in the each model included random intercepts for participant and random slopes for all fixed effects and the interaction between glottalization and continuum step.

3.3. Results and discussion

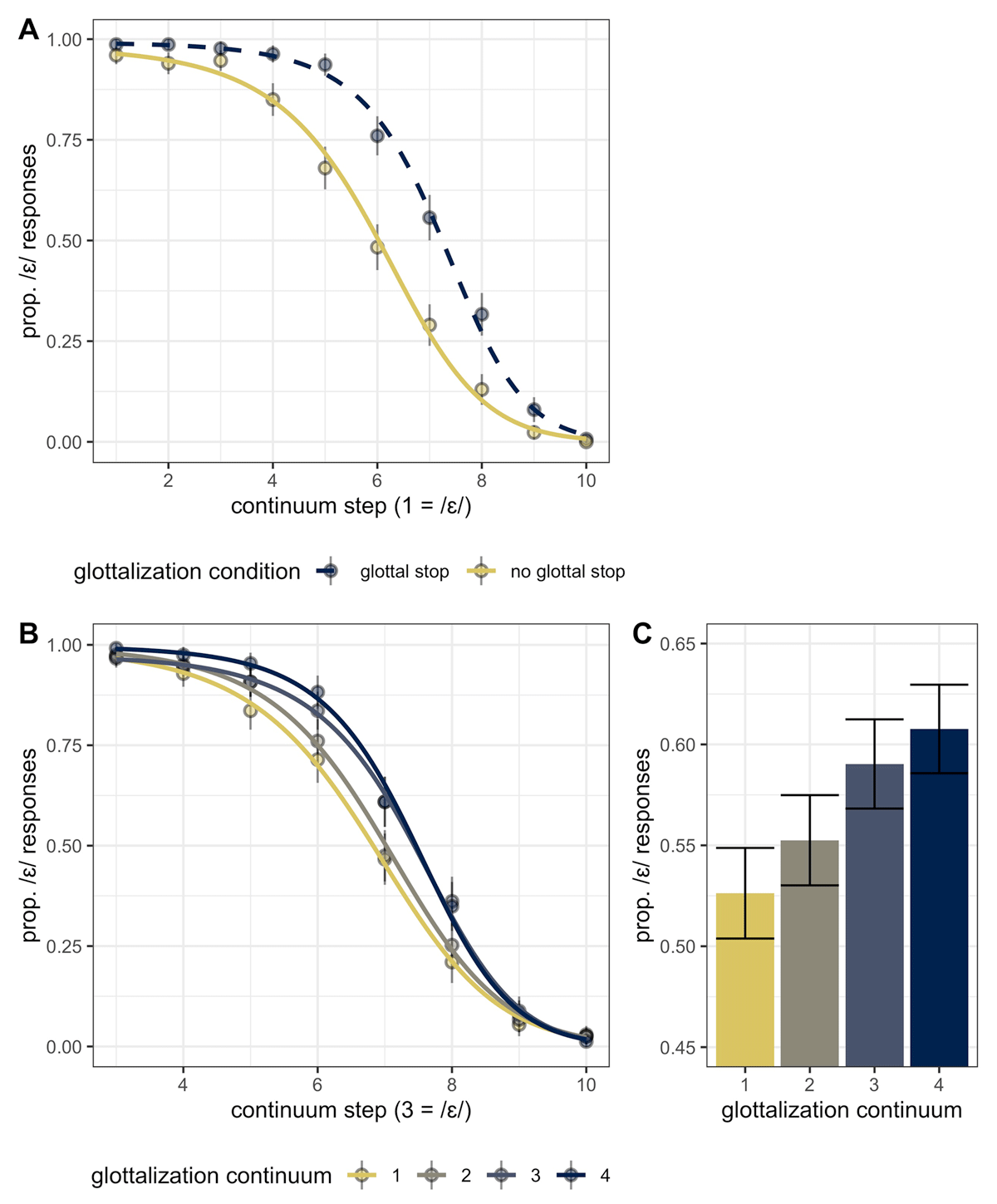

The results of Experiments 1 and 2 are shown together in Figure 2. In both Experiment 1 and Experiment 2 changing formant values along the continuum shifted categorization in the expected way; increasing (scaled) step values along the continuum decreased the log-odds of an /ɛ/ response (Experiment 1: β = –3.42, 95%CrI = [–3.76, –3.10]; pd = 100; Experiment 2: β = –3.06, 95%CrI = [–3.41, –2.73]; pd = 100). Neither experiment showed a credible effect of the stimulus repetition variable (pd = 72 in Experiment 1, pd = 83 in Experiment 2), indicating that there was not a categorization bias introduced by repetitions of unique stimuli.

Categorization results in Experiment 1 (panel A) and 2 (panel B and C). In panels A and B, the x axis shows the formant continuum and the y axis shows listeners’ proportion of /ɛ/ responses at each step, split by glottalization condition. Lines in panel A and B show a logistic fit to the data with points showing empirical means. Error bars show one SE from the data (not model estimates). Panel C shows the effect of the glottalization continuum on the x axis, pooled across formant continuum steps. Step numbering for the formant continuum refers to the values from the original 10 step continuum, with Experiment 2 ranging from step 3 to step 10.

In Experiment 1, the glottal stop condition showed a credible effect in shifting categorization (β = 1.74, 95%CrI = [1.30,2.17]; pd = 100). As shown in Figure 1A, a preceding glottal stop increased /ɛ/ responses. This result lines up with the predictions outlined in Section 2.1, suggesting that listeners do indeed adjust their perception of the contrast in line with sonority expansion: A vowel preceded by a glottal stop is expected to be realized as a more prominent variant, i.e., lower and backer in the vowel space.

In Experiment 2, the glottalization continuum additionally showed a credible effect in shifting categorization responses (β = 0.40, 95%CrI = [0.30,0.50]; pd = 100). This is evident in Figure 2B as increasing rightward shifts along the glottalization continuum, with the strongest glottalization cues (step 4) showing the largest difference from step 1 (no glottalization). The results are further shown in Figure 3B, which collapses across all steps of the formant continuum, showing a graded increase in /ɛ/ responses as glottalization cues increase in strength. The effect size (in log odds) is smaller than in Experiment 1, though direct comparisons are not straightforward because of the way that the variables were coded. In Experiment 2, the estimate is for a one-unit change in the scaled value of glottalization continuum step. Relating the scaled and centered values to actual continuum values and comparing the difference between step 1 and step 4 (weakest to strongest glottalization cues) yields an estimated log-odds change of approximately 1.05, suggesting a slightly smaller effect than the full stop in Experiment 1. This may be expected because glottalization cues, even at their strongest in Experiment 2, are in a sense “weaker” than the full stop in Experiment 1. This effect size estimate is in agreement with an alternative parameterization of the model in which glottalization continuum was treated as a four level categorical variable, included in the open access repository.7

There was additionally a credible interaction between continuum and glottal stop condition in both Experiments (Experiment 1: β = –0.77, 95%CrI = [–1.15, –0.41]; pd = 100; Experiment 2 β = –0.26, 95%CrI = [–0.42, –0.12]; pd = 100). The interaction was inspected using the estimate slopes function from the modelbased package (Makowski, Ben-Shachar, Patil, & Lüdecke, 2020), which estimated the marginal effect of the formant continuum across glottalization conditions; see the online repository for code implementing this assessment of the interactions. In Experiment 1, this assessment showed a larger effect of formant continuum step for the glottal stop condition (β = –3.80, 95%CrI = [–4.23, –3.41]) as compared to the no glottal stop condition (β = –3.03, 95%CrI = [–3.39, –2.70]). The same trend was observed for an increase along the glottalization continuum in Experiment 2, where the most glottalized endpoint showed the largest effect of formant continuum step (β = –3.40, 95%CrI = [–3.86, –3.00]) as compared to the not glottalized endpoint (β = –2.71, 95%CrI = [–3.07, –2.38]), with an increase in the effect along the glottalization continuum. A larger effect of formant continuum step is analogous to a steeper categorization slope, and in this sense the presence of the interaction can be taken to suggest that glottalization leads to sharper categorization of differences in vowel formants.8 This makes sense if we consider glottalization as rendering the target vowel more perceptually prominent, though glottalization also simply acoustically sets the target apart from preceding context, which enhances auditory processing as noted above (Delgutte, 1980; Delgutte & Kiang, 1984).

We can now consider the results of Experiment 1 and 2 in relation to the aforementioned relation between vowel height and vowel-initial glottalization, whereby a general cross-linguistic pattern is that lower vowels favor glottalization (e.g., Brunner & Zygis, 2011). On the one hand, this relationship could be treated as a statistical pattern by listeners: Glottalization could lead to the expectation of a lower vowel phoneme (in the present study, /æ/). The results indicate that this is clearly not the case, as glottalization favors perception of /ɛ/. The fact that a lower vowel percept is not favored by preceding glottalization comports with the findings that there is not a predictive relationship between phonological/categorical vowel height and the production of vowel initial glottalization (Garellek, 2013; Umeda, 1978), such that listeners do not use preceding glottalization to identify the vowel as being the lower vowel category /æ/. What the results indicate instead is that vowel-initial glottalization leads listeners to re-calibrate such that the acoustic space which is mapped to a given vowel category is lower and backer (in F1/F2), in line with sonority expansion. This relation to (acoustic) vowel height is a restatement of the predicted prominence effect, though future work will benefit from looking at other vowels, including those which are not realized as acoustically lower/backer under prominence (e.g., American English /i/, Cho, 2005).

The data from Experiments 1 and 2 thus supports the prediction that vowel-initial glottalization serves a prominence-marking function for listeners. Notably, we can see that different realizations of glottalization engender similar perceptual effects, with a clear relationship between strength of glottalization and the magnitude of the perceptual shifts evidenced by listeners. The effect seems to change fairly continuously as a function of the glottalization continuum, addressing “whether [glottalization] is interpreted along a continuum or as a contrastive binary feature” (Redi & Shattuck-Hufnagel, 2001, p. 427).

4. Experiment 3

Given the effect of glottalization on categorization in both Experiments 1 and 2, Experiment 3 examined the timecourse of its influence in online processing in a visual world eyetracking task.

4.1. Materials

Experiment 3 made use of the same materials as Experiment 1, though it used a subset of the 10 step continuum. The method by which the Experiment 3 stimuli were selected was the same as that used in Mitterer and Reinisch (2013). The overall interpolated categorization function for Experiment 1 was inspected. The point at which the interpolated function crossed 50% (i.e., the most ambiguous region in the continuum) was identified. The three steps on each side of this crossover point were used in Experiment 3. This led to the selection of steps 4–9 from Experiment 1. There were accordingly 12 unique stimuli used (6 continuum steps × 2 prominence conditions).

4.2. Participants and procedure

Forty participants, none of whom had taken part in Experiment 1 or 2, were recruited from the same population as previous experiments to participate in Experiment 3. Testing was carried out in a sound-attenuated room in the UCLA Phonetics Lab.

Participants were seated in front of an arm-mounted SR Eyelink 1000 (SR Research, Mississauga, Canada) set to track the left eye9 using pupil tracking and corneal reflection at a sampling rate of 500 Hz, and set to record remotely (i.e., without a head mount) at a distance of approximately 550 mm. At the start of the experiment, participants’ gaze was calibrated with a 5-point calibration procedure.

Stimuli were presented binaurally via a PELTORTM 3MTM listen-only headset. The visual display was presented on a 1920 × 1080 ASUS HDMI monitor. In each trial, participants were presented with a black fixation cross (60px by 60px) in the center of monitor. The target words themselves were displayed in 60pt black Arial font, with one word centered in the left half of the monitor, and the other in the right half of the monitor. The side of the screen on which the words appeared was counterbalanced across participants, though for a given participant the same word always appeared on the same side of the screen as in Kingston, Levy, Rysling, and Staub (2016); Reinisch and Sjerps (2013). Two interest areas (300px by 150px) were defined around the target words. These were slightly larger than the printed words, to ensure that looks in the vicinity of the target words were also recorded, following Chong and Garellek (2018) and Kingston et al. (2016).

The onset of the audio stimulus was look-contingent, such that stimuli did not begin to play until a look to the fixation cross had been registered. This was done to ensure that participants were not already looking at a target word at the onset of the stimulus. As soon as a look to the fixation cross was registered, the audio stimulus began, and the target words appeared simultaneously with the onset of the audio. The trial ended after participant provided a click response. The next trial began automatically after a click response was registered. At the start of each new trial, the cursor position was re-centered on the computer screen, following Kingston et al. (2016). Trials were separated by an interval of one second. Eye movements were recorded from the first appearance of the fixation cross until the participants provided a click response and the next trial began.

There were four practice trials, with each continuum endpoint being presented in each prominence condition once. Following this, there were a total of 96 test trials; each of 12 unique stimuli was presented a total of eight times, with stimulus presentation completely randomized. The experiment, including calibration, took approximately 20 minutes to complete.

4.3. Timecourse predictions

Given the variables under consideration and the previous accounts of prosody and prominence in processing described in Section 1.3, we can operationalize some predictions for Experiment 3, which will motivate the analyses described below. First, a general expectation is that vowel-internal formant cues should exhibit a rapid influence in online processing as shown, for example, by Reinisch and Sjerps (2013). It takes approximately 200 milliseconds to program a saccadic eye movement (e.g., Matin, Shao, & Boff, 1993), meaning that we expect (at least) a 200 ms lag between the time that a given stimulus dimension is presented to listeners and the time it influences their looking behavior. Given this, we can predict to see an influence of vowel acoustics (modeled with the continuum variable) in online processing as early as 200 ms from the onset of the target vowel, a rapid effect.

Taking this timing as a baseline for what constitutes a rapid effect, consider the timecourse predictions for vowel-initial glottalization from both the Prosodic Analysis model and MAPP model.

-

Prosodic Analysis model:

1. Prediction 1: Timing of effects. If a glottal stop is processed as contributing only to a prosodic parse of the signal which is integrated later in word recognition following Cho et al. (2007), it should show a later-stage effect in line with Kim, Mitterer, and Cho (2018) and Mitterer et al. (2019). Given the expectation that formant information is processed rapidly, this predicts an asynchrony between the influence of these two effects, with formant cues showing an earlier influence than glottalization.

2. Prediction 2: Interaction between effects. Relatedly, if formant cues are used only to activate lexical hypotheses (independent of prosodic information), there should be no interaction between formant cues and glottalization, most crucially early in processing. This predicts that early processing of formant information will not vary across glottalization conditions.

-

MAPP model:

1. Prediction 1: Timing of effects. Following the MAPP model, if glottalization is a prominence effect that modulates (early) sublexical processing, we can predict that its influence will be simultaneous with the influence of vowel formants.

2. Prediction 2: Interaction between effects. Another prediction from the MAPP model is that processing of formant information will interact with glottalization such that formant cues will be processed differently depending on glottalization. This predicts that (early) processing of formant information will vary across glottalization conditions.

Importantly, as described in Section 2.2 the glottalization manipulation only preceded the target vowel in time in the stimuli, and the target itself is acoustically identical across glottalization conditions.

We now turn to the results, first examining the categorization responses and a preliminary look at the eye movement data. Following this, more details on the eyetracking analysis and the eyetracking results are presented in Section 4.5.

4.4. Results

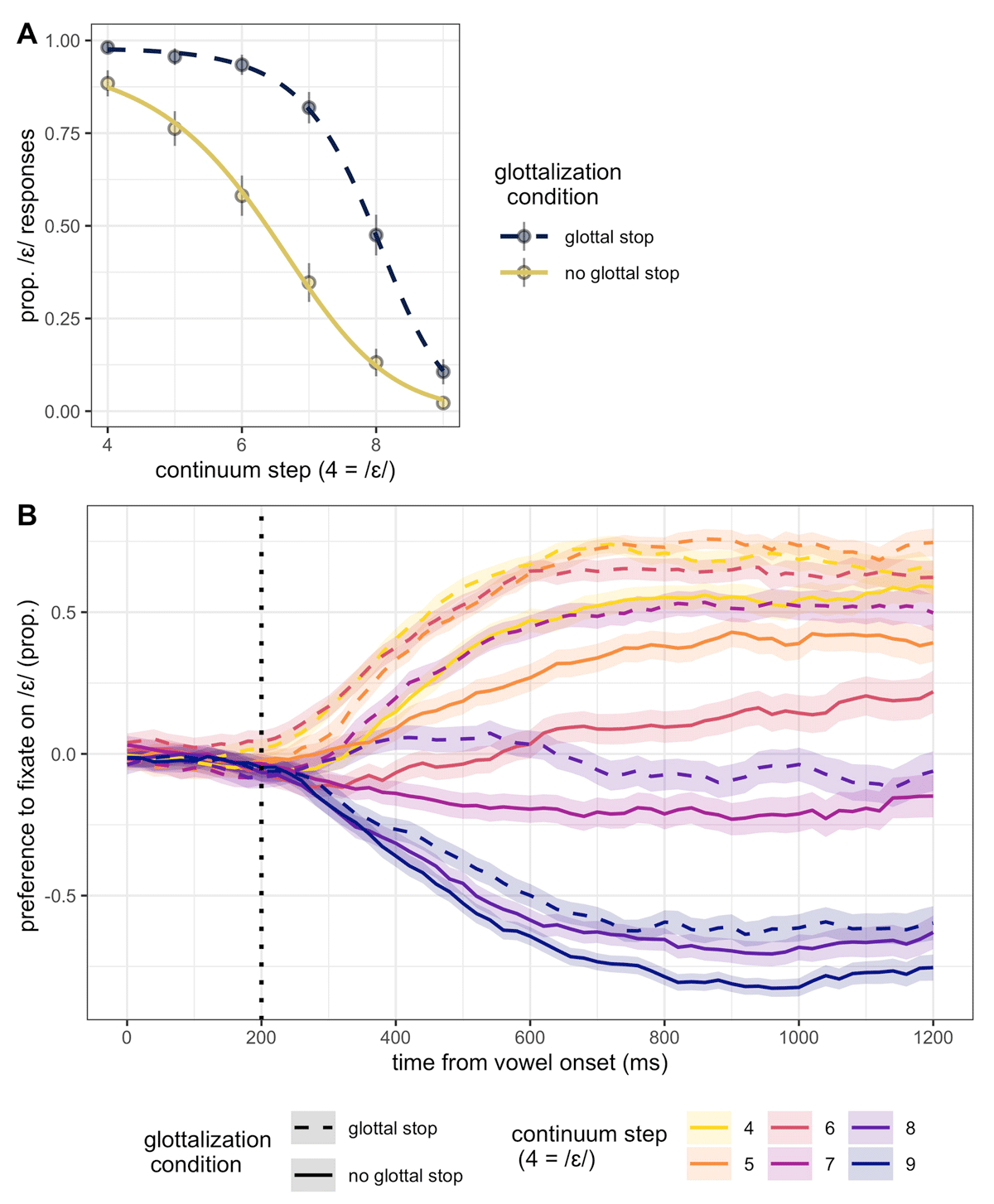

As shown in Figure 3 panel A, categorization results from Experiment 3 essentially replicated Experiment 1. Formant cues from the continuum exerted a reliable influence in categorization (β = –2.64, 95%CrI = [–3.01, –2.28]; pd = 100), and we can see the categorization function is overall fairly well-anchored. The glottal stop effect from Experiment 1 was also replicated, with the presence of a preceding glottal stop increasing listeners’ /ɛ/ responses (β = 2.51, 95%CrI = [2.00, 3.04]; pd = 100). An overall bias towards /ɛ/ is also evident in the tendency of listeners to categorize the target as /ɛ/, especially when it is preceded by a glottal stop. As with the previous Experiments, the control variable for stimulus repetition did not show a credible effect (pd = 88). There was additionally a credible interaction between continuum and glottal stop condition, mirroring what was seen in Experiment 1 (β = –0.43, 95%CrI = [–0.78, –0.11]; pd = 99). Comparison of the marginal effect of formant continuum across glottalization conditions also aligns with what was seen in Experiment 1 in showing a larger effect in the glottal stop condition (β = –2.85, 95%CrI = [–3.29, –2.46]) as compared to the no glottal stop condition (β = –2.42, 95%CrI = [–2.81, –2.05]).

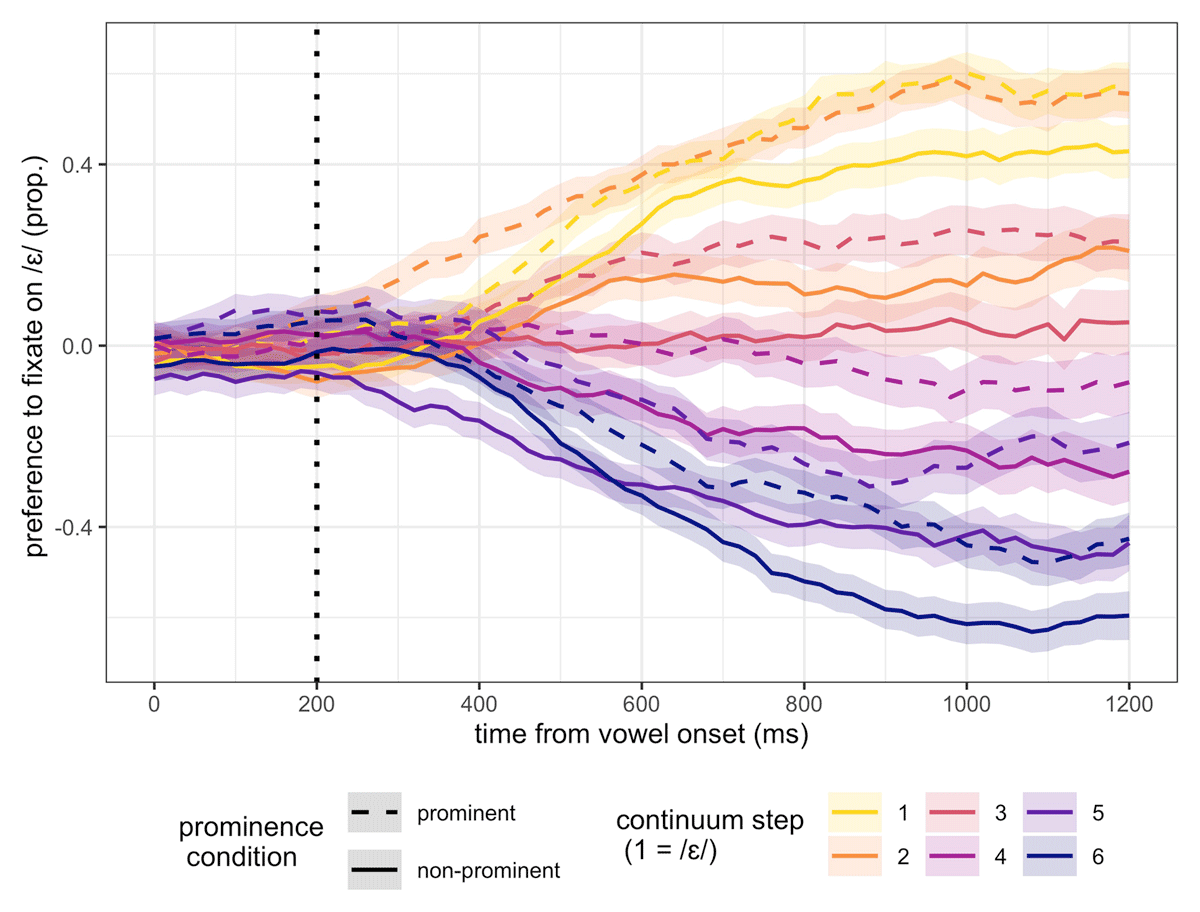

Categorization results in Experiment 3 (panel A), and eye movement data in Experiment 3 (panel B; see text). Error bars and ribbons show one SE, computed from the data. The vertical dotted line at 200 ms indicates the earliest time at which information in the target vowel is expected to impact fixations. Step numbering refers to the values from the original 10 step continuum used in Experiment 1.

Figure 3 panel B shows the eye movement data from the experiment, plotting eye movement trajectories as a function of continuum step and glottalization condition. The measure plotted on the y axis is listeners’ preference to fixate on /ɛ/, computed as the proportion of looks to /ɛ/ minus looks to /æ/ in each 20 ms time bin. Here a value of zero indicates no preference, a positive value indicates a preference to fixate on /ɛ/, and a negative value indicates a preference to fixate on /æ/. Note that the time which is marked as zero on the x axis is the precise point in the stimulus (in either glottalization condition) where there begins to be any difference based on vowel continuum acoustics, corresponding to the positioning of the dashed line in Figure 1. In other words, the stimuli up until this time will be different based on the glottalization manipulation preceding the target vowel, but there are not yet any formant cues to vowel identity at this point. We can see the effect of continuum step in the separation of lines based on coloration, with more /ɛ/-like continuum acoustics leading to a preference to fixate on /ɛ/. This separation, or fanning out, of trajectories appears to occur at roughly 200 ms from the onset of the vowel. The effect of vowel-initial glottalization is also evident in the separation we see based on line type: In line with the categorization data, a preceding glottal stop (dashed lines) facilitates looks to /ɛ/, an online effect corresponding to the categorization results we have seen thus far. We can also note that there is an /ɛ/-bias in eye movements, as also suggested by the categorization data, with steps 1–4 showing a strong /ɛ/ preference. Qualitatively, it thus appears that both vowel-internal acoustic cues, and preceding glottalization, are both shaping listeners’ perception of the target word.

4.5. Eyetracking analyses and results

Two complementary analyses of the eyetracking data are presented here. The dependent measure in each analysis was a “preference measure,” which offers a normalized measure of listeners’ propensity to fixate on a target (cf. Reinisch & Sjerps, 2013). This measure is computed as log-transformed looks to “ebb” minus log-transformed looks to “ab,” using the empirical logit (Elog) transformation given in Barr (2008).10 This measure was computed within a given time bin in a trial, the size of which was different in the two different analyses, described below. The analysis window of 0–1200 ms from the onset of the target vowel in the stimulus is used.

In the first eyetracking analysis, eye movement data from Experiment 3 was analyzed by a Generalized Additive Mixed Model (GAMM) using the R packages mgcv (Wood, 2006) and itsadug (van Rij, Wieling, Baayen, & van Rijn, 2016). GAMMs have recently been suggested to offer an appealing alternative to moving window analyses in that they allow for an encoding of the temporal contingency across time bins, and further allow for modeling non-linearity in the data (see Zahner, Kutscheid, & Braun, 2019 for a discussion of the advantages of GAMMs for eyetracking data). The data was sampled at 20 ms time bins for the GAMM analysis (as in Steffman, 2021a; Zahner et al., 2019). The GAMM was an AR1 error model, fit using the technique described in e.g., Sóskuthy 2017, to reduce residual autocorrelation. The rho parameter was specified in the model based on a previous run of the same model without the AR1 component (see the open access repository for code implementing this). The number of knots in some terms were increased (to k = 20) following inspection with the gam.check function, after which the number of knots was adequate as determined by that function.

The model was fit with parametric terms for continuum step (scaled and centered), glottalization condition, and the interaction between these fixed effects. The control variable of stimulus repetition was additionally included. Parametric terms in the GAMM model are analogous to fixed effects in mixed effects models and capture if listeners’ fixation preference in the analysis window as a whole varies as a function of the predictors. Smooth terms in GAMMs are additionally fit to model changes over time, and (potentially) non-linear patterns in the data. The model was fit to capture the interaction between continuum acoustics and time using a non-linear tensor-product interaction term, which allows us to examine how, over time, vowel acoustics mediate listeners’ preference to fixate on a given target. Crucially, this term was interacted with glottalization condition as a “by” term in the tensor-product term, modeling the potential interaction between glottalization, and the influence of continuum acoustics over time. As a control variable, an additional tensor-product term was fit for (scaled) stimulus repetition over time, modeling how the dependent variable changed over time as a function of repetition. This term showed no systematic effect of repetition on looking behavior (in line with previous categorization analyses), so it will not be discussed further, though a plot of the predictions from the model for the influence of stimulus repetition is included on the open access repository. Random effects in the model were specified using the reference-difference smooth method described in Soskuthy (2021), with factor smooths for participant, and for participant by glottal stop condition (coded as an ordered factor). In both factor smooth terms, the m parameter was set to 1, following Baayen, van Rij, de Cat, and Wood (2018) and Soskuthy (2021). The numerical GAMM model output is included in the appendix, though the terms in the model as it was coded are generally not useful for intrepreting timecourse questions of interest here (Nixon, van Rij, Mok, Baayen, & Chen, 2016; Zahner et al., 2019).

The model described above will be compared to one which did not include a non-linear interaction term for glottalization condition with continuum step and time. In this model, glottalization condition was not included as a “by” term in the tensor-product interaction, but instead in a separate smooth modeling the effects of glottalization over time. This latter model thus captures an independent effect of glottalization, but crucially, not an interaction with continuum step. The fit of the two models will be compared in light of the predictions described in Section 4.3, testing prediction 2 from each model. The code for both models and model comparison is contained in full on the open-access repository.

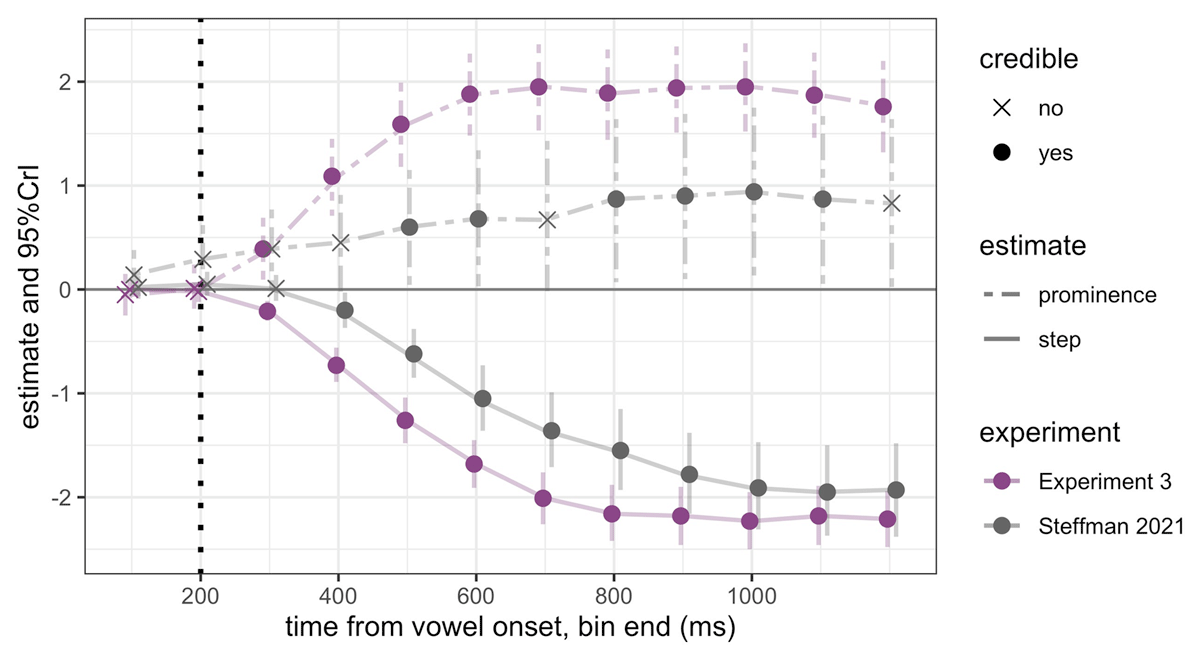

The second analysis presented here is a traditional moving window analysis, which assesses how vowel-internal formant cues influence eye movements in relation to the glottal stop manipulation. The moving window analysis serves the purpose of comparing across Expeirment 3 and Steffman (2021a) with a focus on the relative timing of the influence of continuum step (formants) and the prominence manipulation. Notably, a GAMM model can be used for this purpose too, however in the GAMM numerical time estimates for the influence of continuum step needs to be computed with difference smooths on a pairwise basis, i.e., the timing of an effect between step 1 and step 2, step 1 and step 3, and so on. The moving window analysis thus offers a more global picture of the timing of continuum step. This additional analysis is accordingly carried out to provide converging evidence for the effects in Experiment 3, and to further offer a compact comparison with data from Steffman (2021a). Models were fit for each experiment separately, due to the fact that the continuum acoustics, and the nature of the prominence effects were different across them. Despite these substantial differences, the relative timing of the continuum effect and prominence effect can be considered comparable, given the predictions in Section 4.3. In other words, because the prosodic analysis model predicts a two-stage influence of formants and then prominence we can test this prediction in both Experiment 3 and the data from Steffman (2021a), and evaluate the relative timing of the effects across experiments (prediction 1 from each model in Section 4.3), even though the formant acoustics and prominence cues are not directly comparable to one another. More details from Steffman (2021a) are given in Section 4.5.2, following the presentation of the GAMM results of Experiment 3.

Time bins of 100 ms were used in the moving window analysis, with the preference measure computed at 100 ms intervals across a trial. A 100 ms window was selected because it provides a fairly fine-grained temporal assessment, while also granting a reasonable amount of independence from bin to bin (Barr, 2008; Mitterer & Reinisch, 2013), a known issue in moving window analyses. The dependent measure was predicted as a function of (scaled) formant continuum step, and glottalization context (coded as in the categorization models), and the interaction of these two fixed effects in each time bin. Stimulus repetition was again included as a fixed effect. Random effects were random intercepts for participant and random slopes that were the same as the fixed effects and interaction term. These models were run in brms as with models of the categorization data. The assessment of the models will be in terms of when, over binned time, each has a robust effect on listeners’ fixations, with a focus on the relative timing of continuum step and prominence.

4.5.1. GAMM results

The GAMM modeling analysis focused on the relationship between glottalization and formants in jointly shaping listeners’ processing of the target word, testing the predictions in Section 4.3. To test if including an interaction between continuum step and glottalization (in the tensor product term of the model) improved model fit, the GAMM with this interaction was compared to one in which glottalization condition was in a separate smooth term over time (described above), using the compare ML function in itsadug (van Rij et al., 2016). A Chi-Square test on the ML scores indicated that the model containing the interaction between glottalization and continuum step is a significantly better model than the one lacking the interaction (χ2” (4) = 57.14, p < 0.001). This suggests that the way formant cues are processed interacts with glottalization condition. The nature of this interaction is explored below.

First, we can note that the parametric terms in the best fitting GAMM model confirm an influence of vowel formants and glottalization in the analysis window as a whole (p < 0.001 for both), as would be expected given the observations made of Figure 3. Further, aligning with all categorization analyses, the repetition control variable did not have a significant effect on eye movements (p = 0.72).

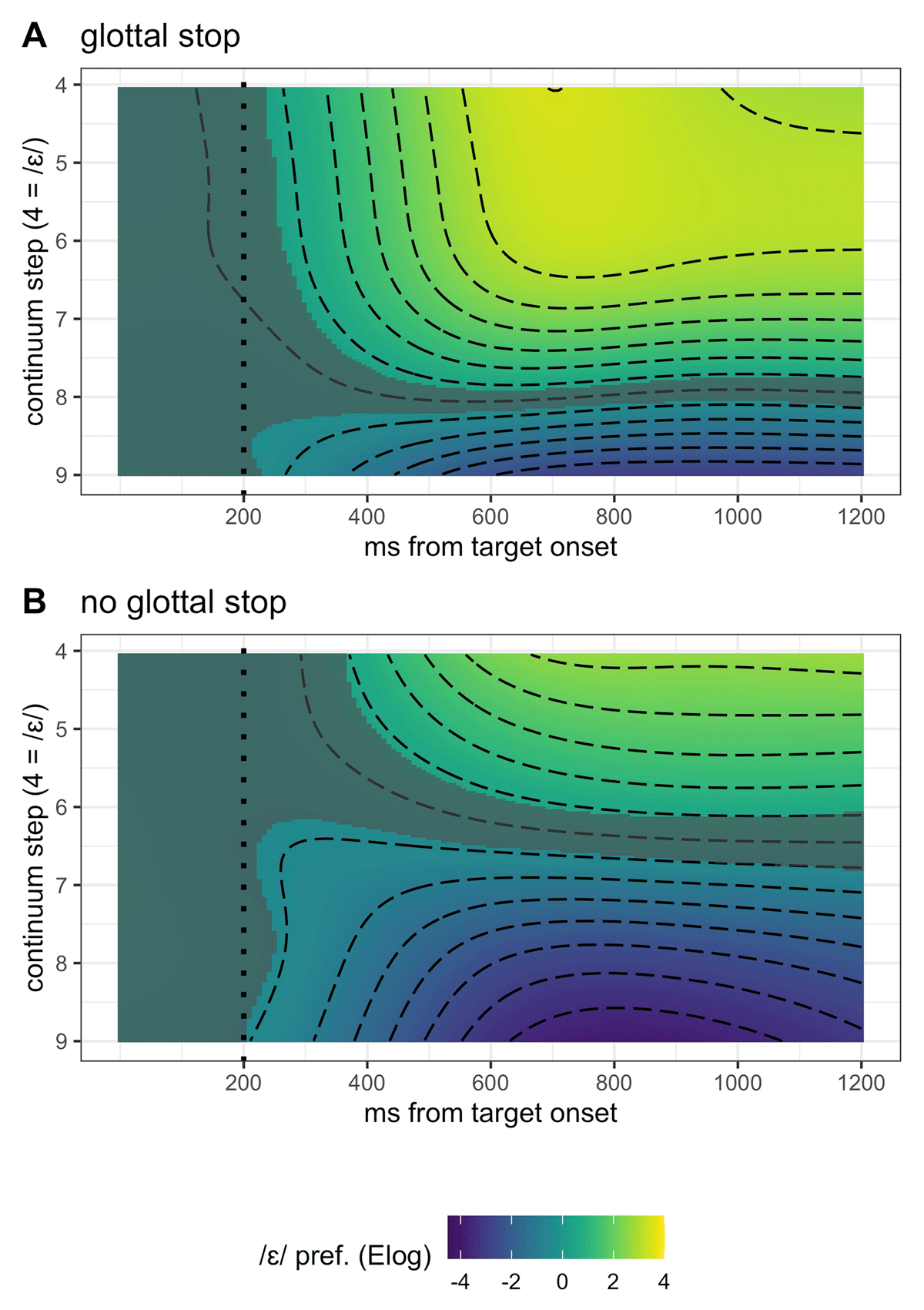

To assess the relationship between continuum step, glottal stop condition, and time, three-dimensional topographic surface plots are presented in Figure 4. These plots show the model fit, representing the effect of continuum step (as a continuous variable on the y axis) over time (on the x axis). The dependent variable (listeners’ Elog-transformed preference to fixate on the /ɛ/ target) is represented on a gradient color scale. The two panels represent model fits for each glottalization condition, panel A being when the target is preceded by a glottal stop. A value of zero (in the middle of the color scale) indicates no preference, while a positive value (closer to yellow on the color scale) indicates a preference for the /ɛ/ target. A negative value (closer to purple on the color scale) indicates a preference for /æ/. Shading on the surface shows locations where listeners’ preference is not significantly different than zero, i.e., when 95% CI from the model estimate include the value of zero. Note that listeners do not show a preference early in the analysis window, with shading on all of the surface prior to approximately 200 ms. The fact that shading occupies the first 200 ms of the analysis window indicates that listeners are not using information that precedes the target vowel to predict target vowel identity independently. If preceding information (i.e., the presence of a glottal stop) was systematically used to predict vowel identity directly, shading on the surface would disappear prior to 200 ms from the vowel onset (if observed, this sort of predictive effect would suggest an issue with the experimental design in the sense that the task is too predictable, and unlike more naturalistic speech perception).

Surface plots showing the GAMM model fit in Experiment 3, with continuum step on the y axis, time on the x axis, and listeners’ log-transformed fixation preference indexed by coloration. Gray shading indicates places on the surface where listeners have no preference for either target. The vertical dotted line at 200 ms indicates the earliest time at which information in the target vowel is expected to impact fixations. Step numbering refers to the values from the original 10 step continuum used in Experiment 1.

As time progresses, listeners develop graded preferences based on continuum step. At the end of the analysis window, there is a range of preferences: A stronger /ɛ/ preference at step 4 on the continuum, and a stronger /æ/ preference at step 9. Note too that a portion in the middle region of the continuum never attains a significant preference in either panel. That is, the model finds that the ambiguous region of the continuum remains ambiguous even at the end of the analysis window. This is shown by a narrow band of the shaded area persisting until the end of the analysis window. With this in mind, we now can assess the impact of a glottal stop on listeners’ processing of the continuum over time. The effect of the glottal stop is evident in observing (1) the coloration of each panel A and B, and (2) the shape and position of the shaded area showing areas on the surface for which listeners did not have a preference for either target. In terms of coloration, note the color scale used in both panels is shared by them: The same color on each panel reflects the same degree of /ɛ/ preference. We can see that each panel overall occupies different color spaces, with the glottal stop condition showing a stronger /ɛ/ preference (more yellow on the plot), and the no glottal stop condition showing a stronger /æ/ preference (more purple on the plot). In other words, acoustically identical continuum steps are perceived as more like one target or the other, as a function of glottalization. Importantly, these differences are evident as early as listeners show any preference, that is, as soon as shading on the surfaces disappears.11

Additionally, the surface plots show that glottal stop condition also influences which stimuli are perceived as ambiguous by listeners. This is apparent in the vertical positioning of the shaded region, particularly the narrow band of that region that persists throughout the analysis window. The regions along the continuum which show no preference in looks vary based on glottal stop condition, starting early (roughly 200 ms from target onset) and persisting throughout the analysis window. This pattern is not only reflected in the narrow band of the shaded region, but also in the surrounding shading which extends around that region. This shading shows a relative delay in processing formant cues in the region of steps 7–9 in the glottal stop condition, and steps 4–6 in the no glottal stop condition, whereby regions more in the proximity of ambiguous steps show slower recognition of a vowel (i.e., a significant fixation preference). Critically, where these regions are is impacted by the glottalization manipulation. This pattern can also be framed in terms of expectations: Pre-target glottalization cues favor the recognition of a particular vowel, slowing down recognition of the alternative (though notably, this pattern does not constitute a predictive effect in the sense that only at 200 ms from target onset do listeners begin to show a preference). Inspection of the surface plots therefore supports a difference in early formant processing across conditions, with differences across conditions evident at the earliest moments, early modulation of which vowel acoustics are ambiguous to listeners, and the speed at which a particular vowel is recognized.

To complement the visualization of the surface plots with another assessment of the glottalization effect, the difference smooth between glottalization conditions was computed, which offers a time estimate for the overall effect of glottalization (with scaled continuum step and repetition variables set to their median by default). A difference smooth models the difference between two conditions over time. When the difference becomes reliably different from the value of zero (with 95% CI for the smooth excluding zero) we can take this to indicate when (in time) an effect is reliable (see the open access repository for the difference smooth code and visualization). The difference smooth shows that the effect of glottalization condition becomes significant 242 ms from the onset of the target vowel until the end of the analysis window, a further indication that its influence is early in time.

In summary, the GAMM analysis supports predictions 1 and 2 of the MAPP model: Glottalization interacts with the processing of formant information early in time as shown by the surface plots, and shows an early overall influence as indicated by the difference smooth (242 ms from vowel onset).

4.5.2. Comparison to Steffman 2021