1. Introduction

With increased numbers of online speech perception studies, the fields of phonetics and phonology have converged on some aspects of methodology. One of the design components that many online perception studies include is a check for whether participants are wearing headphones (e.g., Bieber & Gordon-Salant, 2022; Geller et al., 2021; Giovannone & Theodore, 2021; McPherson, Grace, & McDermott, 2022; Merritt & Bent, 2022; Saltzman & Myers, 2021; A. C. L. Yu, 2022). However, it has not quite been established how these headphone checks might be impacting different types of experiments and what the implications are for interpreting results. Understanding headphone checks is important for interpreting and comparing data collected in different ways, as well as knowing whether they should be used as criteria in evaluating research methods.

Online studies are valuable in part because of convenience; they are useful for researchers working with restrictions on in-person work, allow rapid collection of data, and make large sample sizes more feasible than they are for in-person studies. They also provide access to a diverse population of participants (Berinsky, Huber, & Lenz, 2012; Casler, Bickel, & Hackett, 2013; Shapiro, Chandler, & Mueller, 2013). Within linguistics, accessing a more diverse population is particularly relevant in facilitating research that depends on demographic groups that cannot easily come to campus, or languages and language varieties which are not spoken where the researcher is located.

Given the importance of online research, it is important to establish well-motivated best practices and to understand the effects of methodological choices. This paper focuses on headphone checks, but also touches on additional factors that can play a role in data quality, including filters for participant characteristics, instructions on required devices, and post-task questions. First, I examine whether headphone checks (or other participant filtering methods) change whether significant effects are found and what the effect sizes are. Then I discuss potential causes and implications. If different methods produce different results, then we need to consider whether the difference is a benefit or a drawback, based on who is being excluded and why. Many methodological choices for online studies are aimed at removing noisy data from participants who could not hear the stimuli or were not paying attention. However, exclusions might have other unintended effects, such as disproportionately excluding participants from certain age groups or geographic regions.

1.1. Online versus in-person data

Online studies often have slightly lower accuracy or smaller effects of experimental conditions than in-person studies. Nevertheless, there is a strong correlation between online results and in-person results, as has been demonstrated in studies that compare the same task in each modality (e.g., Cooke & García Lecumberri, 2021; Elliott, Bell, Gorin, Robinson, & Marsh, 2022; Slote & Strand, 2016; Wolters, Isaac, & Renals, 2010; A. C. L. Yu & Lee, 2014). Some studies find no significant difference between online and in-person results (e.g., Denby, Schecter, Arn, Dimov, & Goldrick, 2018).

Many of the differences between in-person and online studies seem to be due to greater variation among online participants. On the one hand, this might be due to diversity of the sample. In-person experiments often use convenience samples that are primarily university students, and the results among university students and other population groups sometimes differ (Peterson, 2001). Online studies usually recruit from a participant population that is more diverse in age and education, as well as many other characteristics (Berinsky et al., 2012; Casler et al., 2013; Shapiro et al., 2013). While these factors will not necessarily impact behavior in every linguistic study, some of them do have the potential to influence results; for example, a range of studies are likely to be impacted by hearing loss among older participants or dialect differences associated with socio-economic status. Because of these differences in the population being sampled, effects of variables like age (e.g., Shen & Wu, 2022) might appear to be effects of the online medium if a study does not control for them.

On the other hand, some of the variation in results for online participants might be due to variability in aspects of the experimental context, such as participants’ listening equipment. Online studies have much less control over the experimental setup than in-person studies do. While in-person studies are often conducted in the same room with the same equipment for all participants, online participants may vary substantially in the device they use to play the stimuli and enter responses, as well as their location (both across large scale geographic areas and small-scale differences in the type of room), background noise, surrounding activity and other distractions, and stability of their internet connection. Not all of these potential sources of variation have been investigated, so it remains unclear what the relative contribution of each factor might be.

Consistent with an effect of experimental setup rather than the participants themselves, Cooke and García Lecumberri (2021) find that online participants transcribe sentences less accurately than in-person participants even when both conditions sample from the same population. They also find that self-rated headphone quality is a predictor of accuracy; online participants who reported using more expensive headphones had higher accuracy than those who reported using cheaper headphones, and those with expensive headphones performed similarly to in-person participants. There are differences in the audio produced by different broad categories like in-the-ear versus over-the-ear headphones, as well as differences within each category (Breebaart, 2017). However, variation across listening devices is not the only reason for differences between in-person and online studies. In one particularly striking example, McAllister, Preston, Ochs, and Hitchcock (2022) mailed the same model of headphones to all online participants, but still found more variability among online participants than in-person participants. Additionally, studies that do not involve audio input also often find lower accuracy or smaller effects among online participants than among in-person participants (Dandurand, Shultz, & Onishi, 2008; Schnoebelen & Kuperman, 2010).

Participants in online studies sometimes produce poor data because of low effort or limited attention. For speech perception studies, it is particularly relevant that online participants sometimes report distractions that involve speech, such as listening to music, watching television, or talking to another person (Chandler, Mueller, & Paolacci, 2014; Clifford & Jerit, 2014; Hauser, Paolacci, & Chandler, 2019). If the poor listening environment is because of additional audio that is being presented through the same device, even high quality headphones will not provide a benefit. A headphone check will usually filter out participants with low attention, low effort, or poor listening environments, but other types of checks for accuracy or attention will also filter out such participants. Some studies which use headphone checks also use additional checks for attention or audio quality, such as excluding participants with low accuracy on clear items (Brown et al., 2018; Mills, Shorey, Theodore, & Stilp, 2022; Saltzman & Myers, 2021) or inaccurate responses for catch trials that instruct them to provide a specific response (Brekelmans, Lavan, Saito, Clayards, & Wonnacott, 2022; Nayak et al., 2022; Seow & Hauser, 2022). Including multiple types of checks makes it difficult to evaluate which one(s) were most valuable in filtering out participants with low attention or poor listening environments and not filtering out additional participants. Some studies include checks to ensure attention and ability to hear the audio stimuli without including a headphone check (Denby & Goldrick, 2021; D’Onofrio, 2018; Getz & Toscano, 2021). Another approach is to include post-task questions for participants to self-report whether they were paying attention to the task, encountered technical difficulties, and/or felt their data was usable (Beier & Ferreira, 2022; Nayak et al., 2022). All of these methods are likely to filter out unusable data.

It is possible that participants in online studies are more likely to misunderstand the task than participants in in-person studies, because the experimenter is not present to verify participants’ understanding. Some authors speculate that misunderstanding of the task might contribute to low accuracy among online participants. For example, in their study testing the dichotic loudness headphone check, Woods et al. (2017) suggest that the relatively high fail rate for participants who reported wearing headphones might in part be due to some of them not understanding the task instructions. However, I am not aware of any studies directly testing whether differences in understanding the task contribute to differences between in-person and online studies, or whether such an effect would differ from effects of attention.

Another potential source of variability is inaccurate information about the participants. Sometimes participants misrepresent themselves in order to access studies (Chandler & Paolacci, 2017; Peer, David, Andrew, Zak, & Ekaterina, 2021). This misrepresentation could potentially influence results due to recording inaccurate information about characteristics that are being examined as predictors, such as gender or age. It could also obscure results due to some participants not belonging to the target population; sometimes participants will claim to be native speakers of English or other languages that studies are restricted to (Aguinis, Villamor, & Ramani, 2021), which would make their data unusable for most phonological experiments. Headphone checks will not help with this aspect of potential variation, because they are not language specific, aside from requiring participants to be able to understand the instructions.

There are many potential factors that might contribute to more variable or less accurate results in online studies, based on the experimental setup, characteristics of the participants, and how the experiments are designed. Focusing just on headphone usage might sometimes decrease how closely researchers consider other sources of variation, even though it is often unclear what the relative contribution of different factors is. Some factors are almost never considered, despite having demonstrable effects on data collection. For example, time of day impacts many demographic characteristics of the participants who do an online task (Casey, Chandler, Levine, Proctor, & Strolovitch, 2017), but this is a factor that is rarely controlled or reported.

It is also important to remember that variation across listeners and their listening environments in online studies is not necessarily a bad thing. On the one hand, it might make small effects more difficult to find, if variation across participants is essentially adding noise to the data. On the other hand, variation across participants may capture more representative patterns of behavior than would be found in a more uniform population, and variation in the experimental environment may be useful in reducing potential effects of factors like the experimenter’s dialect (cf. e.g., Hay, Drager, & Warren, 2009). Online listeners may have listening environments that are more typical of natural language use, which could result in different behavior than perception under laboratory conditions; both conditions are likely to be informative (cf. lab speech versus natural speech, e.g., Wagner, Trouvain, & Zimmerer, 2015).

1.2. Checking for headphones

Many researchers want to ensure that participants use headphones to complete perception experiments. At least half of online perception studies include a headphone check to ensure that participants are wearing headphones, either the dichotic loudness test (Woods et al., 2017) or the Huggins pitch perception test (Milne et al., 2021). Following are some examples (not an exhaustive list) of online phonetics and phonology experiments divided based on whether they used headphone checks and whether they instructed listeners to use headphones. See the appendix for a tabular survey of this information.

The dichotic loudness test presented by Woods et al. (2017) is used in many experiments (e.g., Krumbiegel, Ufer, & Blank, 2022; Lavan, Knight, Hazan, & McGettigan, 2019; McPherson et al., 2022; Mepham, Bi, & Mattys, 2022; Merritt & Bent, 2022; Mills et al., 2022; Nayak et al., 2022; Saltzman & Myers, 2021; A. C. L. Yu, 2022).

The Huggins check, only recently popularized by Milne et al., 2021, already appears in many experiments (e.g., Beier & Ferreira, 2022; Brekelmans et al., 2022; Ringer, Schröger, & Grimm, 2022; Tamati, Sevich, Clausing, & Moberly, 2022; Wu & Holt, 2022).

Studies that do not include a headphone check often instruct listeners that they should use headphones; some will additionally include a question at the end of the experiment asking participants what device they used to for listening to the stimuli (Davidson, 2020; Getz & Toscano, 2021; Reinisch & Bosker, 2022), though others do not (Manker, 2020; McHaney, Tessmer, Roark, & Chandrasekaran, 2021).

However, not all studies require participants to wear headphones; some do not include a headphone check or instructions to wear headphones (e.g., Denby & Goldrick, 2021; D’Onofrio, 2018; Kato & Baese-Berk, 2022; Vujović, Ramscar, & Wonnacott, 2021; Williams, Panayotov, & Kempe, 2021). It is important to note that the lack of consistent headphone use in these studies does not seem to be an issue in finding meaningful results.

The use of headphone checks is aimed at concerns about controlling the experimental setup (Milne et al., 2021; Woods et al., 2017). It is often assumed that headphones will produce a better listening setup, more comparable to an in-person study, and that this will result in stronger or more reliable results (e.g., Brown et al., 2018; Geller et al., 2021; Seow & Hauser, 2022). However, it is often not made explicit what benefit headphones are assumed to provide. Many studies just say that the check was done to ensure that participants were wearing headphones, without a specific description of why headphones were important for the task (e.g., Beier & Ferreira, 2022; Bieber & Gordon-Salant, 2022; Brekelmans et al., 2022; Giovannone & Theodore, 2021; Krumbiegel et al., 2022; Lavan et al., 2019; Luthra et al., 2021; Mepham et al., 2022; Merritt & Bent, 2022; Mills et al., 2022; Ringer et al., 2022; Saltzman & Myers, 2021; Wu & Holt, 2022; A. C. L. Yu, 2022; M. Yu, Schertz, & Johnson, 2022). Some studies describe the headphone check as ensuring better audio conditions by improving the sound quality and decreasing back ground noise (e.g., Brown et al., 2018; Geller et al., 2021; McPherson et al., 2022; Nayak et al., 2022; Seow & Hauser, 2022). Both of the studies which provide the commonly-used headphone check methods primarily describe attenuation of environmental noise as the way that headphones are likely to improve audio conditions (Milne et al., 2021; Woods et al., 2017).

Previous work has indeed shown that headphones block out some ambient noise, though the extent of this noise attenuation varies by frequency and differs across different headphones (Ang, Koh, & Lee, 2017; Liang, Zhao, French, & Zheng, 2012; Shalool, Zainal, Gan, Umat, & Mukari, 2017). It is not clear if this is a major factor in perception studies. In a word identification task with background noise played over loudspeakers, Molesworth and Burgess (2013) found that listeners had only slightly higher accuracy when the stimuli were played over headphones with the noise cancelling function turned off than when the stimuli were played over loudspeakers, though accuracy was substantially higher with noise-cancellation turned on. The potential role of noise-cancelling headphones in online research is unclear, both because this factor has not been examined but also because the degree of noise-cancellation depends on the type of noise.

It is not clear that headphones produce higher fidelity audio than other devices. For example, low frequencies are not well represented by low quality or even average headphones, though high quality headphones perform well (Breebaart, 2017; Olive, Khonsaripour, & Welti, 2018; Wycisk et al., 2022); similar differences in the relative intensity of low frequencies are found for built-in laptop speakers as compared to loudspeakers (Wycisk et al., 2022). Whether due to differences in frequency response or other factors, the quality of headphones also impacts perceptual clarity (Cooke & García Lecumberri, 2021).

The headphone checks provided by Milne et al. (2021) and Woods et al. (2017) are both based on testing whether the audio is presented in stereo at close enough distance that phase-shifting effects are not overly attenuated. These studies demonstrate that their methods are indeed predictive of headphone use; the relationship is not absolute, but the majority of participants who are wearing headphones pass these checks and the majority of participants who are not wearing headphones fail. It is important to note that the goal of these studies is just to demonstrate that these methods can be used to check for headphone use; they are not testing whether headphone checks improve data quality. Milne et al. (2021) note that stereo audio presentation is necessary for tasks like dichotic listening and spatial manipulations; however, it will not necessarily be valuable in other tasks.

In direct comparisons, is there evidence for headphone checks affecting the results of perception experiments? Differences between online and in-person studies can be found even when the online study included a headphone check (e.g., Elliott et al., 2022), and some studies find no significant difference between online and in-person results despite not using a headphone check (e.g., Denby et al., 2018). A few studies include a headphone check and compare results based on whether it is used to exclude participants; they report that excluding participants based on the headphone check did not change the results (Ringer et al., 2022; Shen & Wu, 2022).

Headphone checks might alter the demographics of which participants are included in experiments. Even if the checks are functioning as intended and identifying participants who are using headphones, this may have unintentional side effects. Device choice is predicted by a range of demographic factors, including age, gender, educational background, and income (Haan, Lugtig, & Toepoel, 2019; Lambert & Miller, 2015; Passell et al., 2021), so checking for headphones could systematically exclude more participants from certain groups. Headphone checks might also impact participant demographics based on additional factors that influence perception; for example, hearing loss can decrease accuracy in Huggins pitch detection (Santurette & Dau, 2007). In some studies, excluding listeners with hearing loss may be desirable, whereas in others it may be undesirable (e.g., in studies looking at age-related effects).

In addition to the ways that headphone checks might impact the data, it is important to consider the ways that they are not impacting the data. As described above, there are many factors that might vary among online participants. Headphone checks do not control everything about the audio presentation or the environment; decreased variability in one aspect of audio presentation does not mean that the audio or the listening environment will be uniform in other respects. It is perhaps because of these other sources of variability that both headphone check methods are failed by a substantial number of participants who report using headphones (Milne et al., 2021; Woods et al., 2017).

1.3. This study

This article presents an online study aimed at testing whether excluding participants based on headphone checks improve the results in three perceptual studies testing effects that depend on different aspects of the acoustic signal: Vowel duration, F0, F1, and spectral tilt. All of the effects being tested have been observed previously in in-person studies, so there are strongly expected results that should be present if the data is reliable.

The impact of the headphone checks on several demographic variables is also examined, to evaluate whether headphone checks are substantially changing the set of participants who are included in experiments.

2. Methods

The study consists of three phonological perception tasks replicating previous in-person work, two headphone checks, and a brief questionnaire.1

Stimuli for the three speech perception tasks were produced by two female American English speakers (one for Tasks 1 and 2, one for Task 3), elicited individually in randomized order with PsychoPy (Peirce, 2007), recorded in a quiet room with a stand-mounted Blue Yeti microphone in the Audacity software program, and digitized at a 44.1 kHz sampling rate with 16-bit quantization. The characteristics of the stimuli for each task are described in the following subsections.

Stimuli for the two headphone checks came from recent studies testing each method: Milne et al’s (2021) stimuli for the Huggins pitch perception check, and Woods et al’s (2017) stimuli for the dichotic loudness perception check.

The study was run online, with participants recruited and paid through Prolific and the experiment presented through Qualtrics. Participants were 120 monolingual native speakers of American English (40 male, 75 female, five nonbinary/no response; mean age 33.6).

Prolific allows filtering based on participants’ overall approval percentage in previous studies, as participants who have done well on prior tasks are likely to also do well on new tasks (Peer, Vosgerau, & Acquisti, 2014). In order to get a range of participants with different levels of attention and effort while mostly collecting higher-quality data, thirty participants were recruited with this filter set at 90%, and ninety participants were recruited with this filter set at 95%.

On Prolific, the description of a study can indicate that participants should use a particular device (though this does not do any testing to ensure that participants are following the recommendations). In order to check the effect of these recommendations, thirty of the participants recruited at the 95% approval level saw a restriction that they should be using a computer or tablet (not a phone), and sixty of them did not see any restriction about what device they should use.

Prolific also allows filtering based on nationality and location. Participants were restricted to being from the United States and currently residing in the United States.

Participants were instructed that they would hear words and sounds and make decisions about what they heard. There was a different task in each block. Within a block, the order of presentation of items was randomized.

After completing the listening tasks, participants completed a brief questionnaire, which included questions about age (free response), gender (free response), highest level of education (middle school or less, some high school, high school, some college [undergraduate], college [undergraduate], some graduate/professional school, graduate/professional school), state or region where they spent most of their childhood (free response), state or region where they currently live (free response), and what device they used to listen to the stimuli (earbuds/in-ear headphones, full-size/over-the-ear headphones, built-in phone speaker, built-in tablet speaker, built-in computer speaker, external speakers). They were permitted to skip any of these questions that they preferred not to answer.

Each task contained a small number of trials, in order to ensure that the study was brief; the median completion time for the full study was slightly over 10 minutes. The duration of online tasks is important for two main reasons. First, long tasks can have high dropout rates; almost half of respondents report that they would quit an experiment if it took over 15 minutes (Sauter, Draschkow, & Mack, 2020). Second, attention to the task decreases with longer online studies, producing a corresponding drop in data quality (Yentes, 2015). Longer studies result in larger differences between the attention of in-person and online participants, with online participants paying less attention (Goodman, Cryder, & Cheema, 2013), even when performance may be similar for short tasks (Paolacci, Chandler, & Ipeirotis, 2010).

2.1. Task 1: F0 and onset stop laryngeal contrasts

Previous work has found that a higher F0 following an onset stop increases the perception that it is voiceless (and aspirated) rather than voiced (Haggard, Ambler, & Callow, 1970). This task aims at replicating that result, providing a test of pitch perception.

Stimuli were produced by a trained phonetician targeting a stop ambiguous between the voiced and voiceless categories; the VOT of each item was then manipulated to be as close as possible to the category boundary between the voiced and voiceless stop for each item (mean 27 ms for bilabials, 31 for alveolars). There were two F0 manipulations for each item. F0 was manipulated using the Change pitch median function in the Vocal Toolkit (Corretge, 2020) in Praat. In the low F0 condition, the mean was 216 Hz and in the high F0 condition, the mean was 264 Hz; these values were chosen based on being equally distant from the speaker’s natural mean F0, while still being within her natural range. There were six of these ambiguous items (e.g., b/pest). This produced a total of 12 stimuli.

Mixed with these test stimuli, there were also 24 filler stimuli: 12 items with onset fricative place contrasts (e.g., heft, theft) and 12 items with coda stop place contrasts (e.g., bud, bug). These served to provide a test of listeners’ accuracy in identifying unambiguous items.

This produced a total of 36 items, each of which was heard a single time by each listener. The intensity of all items was normalized so that they had the same mean intensity. A list of these words is given in the appendix.

The items were presented as a list; listeners clicked on each item to hear the stimulus. Responses were given by clicking on one of two written response options. For the F0-manipulated test items, these were the respective voiced and voiceless options (e.g., best, pest). For the filler items, the response options were the two contrasting words, one of which was a clear match for the stimulus. The order of the two response options was balanced across participants.

All 36 items in this task had the vowel /ɛ/ or /ʌ/, and they served as the exposure condition for Task 2, which tested listeners’ category boundaries between high and mid vowels. Half of the participants heard these items with raised F1 and half of the participants heard them with lowered F1 (described in more detail below).

2.2. Task 2: Vowel height category boundaries

Previous work has demonstrated that vowel category boundaries can be shifted based on exposure to manipulated vowels; exposure to a higher F1 makes listeners expect vowels in that category to have a higher F1 (Ladefoged & Broadbent, 1957), so exposure to raised/lowered F1 in /ɛ, ʌ/ should produce a corresponding shift in subsequent perception of ambiguous items on /ɛ-ɪ/ continua. This provides a test of formant perception.

As mentioned above, all of the items in Task 1 had manipulated F1, to set up the exposure conditions of raised or lowered F1 in mid vowels. The response options for each exposure item differed in the onset or the coda but had the same vowel (e.g., best, pest), so it was unambiguous how each vowel should be categorized. Half of the participants heard those vowels in Task 1 with raised F1 (mean 918 Hz), and half heard them with lowered F1 (mean 673 Hz), equally distant in Bark from the speaker’s naturally produced mean F1 for these vowels. Manipulations for these training stimuli and for the testing stimuli were done in Praat using the Change formants function in the Vocal Toolkit (Corretge, 2020), and were manually checked to ensure that the manipulation was successful. The only change was in F1, in order to focus on a single characteristic and avoid potential confounds. Stimuli in both conditions were made from the same recordings; thus, the stimuli in the two conditions differed only in F1.

The testing stimuli were continua of ambiguous vowels based on three pairs of monosyllabic English words (itch-etch, pit-pet, tick-tech), differing only in the vowel, /i-ɛ/. All words had four F1 manipulations: F1 matching the speaker’s mean F1 for each of the two vowel qualities, and two intermediate values, equally spaced in the Bark scale. The intensity of the resulting items was then normalized so that they all had the same mean intensity. Both vowels for each contrast were used to create stimuli, producing two continua (eight items): For example, the pit, pet items included four manipulations made from naturally produced pit and four manipulations made from naturally produced pet, with the same F1 values in both continua. No other characteristics were altered, so the two continua for each pair often differed in F2, duration, and other characteristics. Identifications of items in these vowel continua served to test the effect of the manipulations that listeners were exposed to in the training block.

The items were presented as a list; listeners clicked on each item to hear the stimulus. Responses were given by clicking on one of the written words, which differed only in the vowel (e.g., pit, pet). There were three pairs of words and eight items for each pair, for a total of 24 items, each of which was heard a single time by each listener. The order of the two response options was balanced across participants.

2.3. Task 3: Spectral tilt and perceived vowel duration

Sanker (2020) demonstrated that lower spectral tilt increases perceived vowel duration; this task uses a subset of the same stimuli used in that study. While this effect is not as well-established as the previous two, it is included in order to examine an expected effect that is based on spectral tilt and thus might be particularly sensitive to device, as frequency response differs across devices. This task also tests perception of vowel duration and coda voicing.

Stimuli were based on recordings of a female American English speaker producing the words cub and cup: /kʌ/ followed by a voiced or voiceless labial stop. The words were cut at the end of the vowel, and each beginning (originally produced with /p/ versus /b/) was spliced with two different endings: /p/ and /b/. The vowel duration was manipulated to produce four duration steps, from 146 ms to 218 ms.

Each vowel was manipulated to have two spectral tilts, using the Praat Filter(formula) function to recalculate intensities relative to frequency: High (10.5 dB) and low (3.2 dB). The filter formula used to create high spectral tilt items was: self / (1 + x/100)0.8, and the filter formula used to create low spectral tilt items was: self * (1 + x/100)–0.8. The intensity of the resulting items was then normalized so that they all had the same mean intensity. Note that the manipulation was relative to the original spectral tilt, so it preserved voicing-conditioned spectral tilt differences.

This produced a total of 32 items, each of which was heard a single time by each listener.

The items were presented as a list; listeners clicked on each item to hear the stimulus. Responses were given by clicking on “long” or “short” as the answer to the question “Was the vowel long or short?” The order of the two response options was balanced across participants.

2.4. Headphone checks

Two headphone checks were completed after the phonological tasks. They were not described as headphone checks; the instructions merely asked participants to evaluate the given characteristic.

One headphone check was Huggins pitch perception, based on broadband noise that is phase-shifted over a narrow band by 180 degrees between stereo channels (Milne et al., 2021); stimuli came from the supplementary materials provided with that paper. The phase-shift produces an apparent pitch at the frequency of the band with the phase-shift when the channels are independent, as in headphones; each stimulus contained three sections of noise, one of which contained this phase-shift and thus should sound like it contains a tone. Listeners were asked which of the three sections of noise contained a tone. Responses were given by selecting a button with the corresponding number (1, 2, 3). The numbers were always listed in ascending order.

The other headphone check was based on loudness perception (Woods et al., 2017); stimuli came from the supplementary materials provided with that paper. In one of the items, the sound was presented phase-shifted by 180 degrees between the stereo channels, which should make that item sound quieter than the others when presented over headphones. When presented over speakers, the phase difference is less likely to align consistently. Listeners were asked which of three sounds was the quietest. Responses were given by selecting a button with the corresponding number (1, 2, 3). The numbers were always listed in ascending order.

Audio quality and attention was also checked based on accuracy of identifications of the unambiguous items in Task 1 (e.g., bud, bug; theft, heft); the threshold for accuracy was 85%.

3. Results

3.1. Exclusions by device and Prolific parameters

First, recall that there were different conditions in recruitment: Thirty participants were recruited with a 90% threshold for their overall approval rate and no suggestions about what device they should use. Sixty participants were recruited with a 95% threshold for their overall approval rate and no suggestions about what device they should use. Thirty participants were recruited with a 95% threshold for their overall approval rate and a suggestion that the task should be completed on a computer or tablet (and not a phone). The incomplete balancing of participants across these conditions was done in order to reduce the number of participants with very low-effort submissions, as the main goal of the experiment was to establish effects of headphone checks, not the effect of approval rate, which has been established in previous work.

The filter based on approval rating is here considered just among participants with no suggestions about what device they should use, as no device suggestions were given to participants recruited with the lower approval rating threshold. Setting higher filters improves performance for identifying clear stimuli: 4/30 (13%) of participants recruited at the 90% approval threshold had accuracy below 85% for consonant decisions, compared to 3/60 (5%) of participants at the 95% threshold. However, the filter based on approval rating did not impact exclusions based on the headphone checks. 19/30 (63%) of participants at the 90% approval threshold failed the Huggins check, compared to 39/60 (65%) of participants at the 95% threshold. 17/30 (57%) of participants at the 90% approval threshold failed the dichotic loudness check, compared to 34/60 (57%) of participants at the 95% threshold. This seems to suggest that accuracy for clear stimuli is capturing something about attention or similar behaviors that impact a range of tasks, while the headphone checks are not related to the typical quality of a participant’s engagement in studies.

The effect of instructing participants on what device they should use is considered here among participants at the 95% threshold. Telling participants that they should not use their phones did impact reported device usage. Among participants without any device restriction instructions, 18/60 (30%) reported using their phones to listen to stimuli, while none of the participants with the device restriction instructions reported using their phones. Of course, this might in part reflect a difference in reporting rather than actual usage, but it also impacted how many participants passed the headphone checks, which suggests that participants were actually less likely to use phones in this condition. Among participants who saw that the task should not be completed on a phone, 13/30 (43%) failed the Huggins check (versus 65% among those without this instruction). Among participants who saw that the task should not be completed on a phone, 14/30 (47%) failed the dichotic loudness check (versus 57% among those without this instruction).

The next consideration is how many participants would be excluded by each headphone check and by the accuracy-based audio/attention check. Table 1 shows how these exclusions aligned. Most of the participants who had low accuracy in the consonant identification task also failed the headphone checks, but there were two participants with low accuracy on these items who passed one or both of the headphone checks. Performance in one headphone check was predictive of performance in the other, but the relationship was not absolute: 26 participants (22%) passed one headphone check but failed the other, which makes it important to evaluate the effects of each headphone check separately.

Comparison of exclusions across method.

| Accuracy <85% | Accuracy >85% | |||

| Huggins Fail | Huggins Pass | Huggins Fail | Huggins Pass | |

| Dichotic Loudness Fail | 7 | 0 | 48 | 10 |

| Dichotic Loudness Pass | 1 | 1 | 15 | 38 |

Next, the exclusions will be divided by reported device usage. Table 2 indicates the number of participants with each Huggins score and the device that the participant reported using for the task. Milne et al. (2021) recommend a cutoff of 6/6 accurate responses, but 5/6 is also considered here.

Number of participants by Huggins score and reported device.

| <5 | 5 | 6 | |

| built-in computer speaker | 21 | 1 | 5 |

| built-in tablet speaker | 2 | 0 | 0 |

| built-in phone speaker | 24 | 0 | 1 |

| external speakers | 3 | 0 | 0 |

| over-the-ear headphones | 7 | 1 | 21 |

| in-the-ear headphones | 12 | 2 | 18 |

| device not reported | 2 | 0 | 0 |

Even using the threshold of 5/6 correct, the Huggins headphone check excluded 71 of the 120 participants (59%); this is the threshold used for the rest of the analyses. It does generally seem to capture headphone use, though as noted above, it is not clear whether this restriction will improve the results. There is a noteworthy divide between over-the-ear headphones and in-the-ear headphones; more participants who reported using in-the-ear headphones were excluded.

Table 3 indicates the number of participants with each score on the dichotic loudness headphone check (see Woods et al., 2017) and the device that they reported using. The recommended threshold is 5/6 correct.

Number of participants by dichotic loudness score and reported device.

| <5 | 5 | 6 | |

| built-in computer speaker | 21 | 1 | 5 |

| built-in tablet speaker | 2 | 0 | 0 |

| built-in phone speaker | 20 | 4 | 1 |

| external speakers | 3 | 0 | 0 |

| over-the-ear headphones | 8 | 2 | 19 |

| in-the-ear headphones | 9 | 0 | 23 |

| device not reported | 2 | 0 | 0 |

The dichotic loudness headphone check excluded 65 of the 120 participants (54%). Like the Huggins check, it seems to generally capture headphone use. Unlike the Huggins check, there was no apparent difference between over-the-ear headphones and in-the-ear headphones.

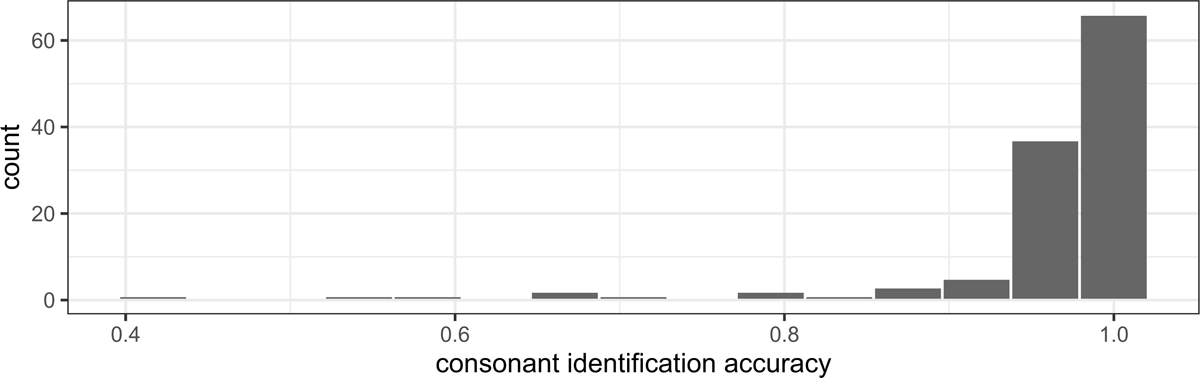

We can also consider exclusions based on how listeners identify items that should be unambiguous, to exclude participants who cannot hear the stimuli or are not paying attention to the task. Figure 1 shows the distribution of accuracy by participant for identifying the unambiguous items in Task 1; recall that these were binary response options differing only in one consonant, with naturally produced consonants (e.g., heft, theft). It is clear that there are some outliers that should be excluded, but the vast majority of participants had very high accuracy; they were paying attention to the task and could hear the stimuli.

Table 4 indicates the number of participants with each percentage of accurate identifications of the clear consonant stimuli (24 items).

Number of participants by consonant identification accuracy and reported device.

| <75% | >75%, <85% | >85% | |

| built-in computer speaker | 1 | 1 | 25 |

| built-in tablet speaker | 0 | 0 | 2 |

| built-in phone speaker | 2 | 1 | 22 |

| external speakers | 1 | 0 | 2 |

| over-the-ear headphones | 0 | 1 | 28 |

| in-the-ear headphones | 2 | 0 | 30 |

| device not reported | 0 | 0 | 2 |

Using a threshold of 85% accuracy for these clear items excluded nine participants: Making the threshold higher or lower does not have a large effect on how many participants are excluded. There was no apparent relationship between accuracy and the device that the participant used.

3.2. Exclusions by demographic characteristics

Who is being excluded by the headphone checks? Tables 5 and 6 summarize the demographic characteristics (age, gender, education, and childhood location) of participants who passed and failed each headphone check.

Demographics by Huggins results.

| mean age (SD) | gender | education | childhood location | |

| Huggins Fail | 34.8 (12.8) | 50 f | 12 high school | 22 midwest |

| 17 m | 46 undergrad | 9 northeast | ||

| 4 nb/no response | 13 grad/professional | 23 southeast | ||

| 7 southwest | ||||

| 9 west | ||||

| Huggins Pass | 31.8 (10.7) | 25 f | 13 high school | 9 midwest |

| 23 m | 32 undergrad | 15 northeast | ||

| 1 nb/no response | 4 grad/professional | 11 southeast | ||

| 6 southwest | ||||

| 7 west |

Demographics by Dichotic Loudness results.

| mean age (SD) | gender | education | childhood location | |

| Dichotic Loudness Fail | 34.8 (13.6) | 40 f | 13 high school | 16 midwest |

| 20 m | 41 undergrad | 13 northeast | ||

| 5 nb/no response | 11 grad/professional | 22 southeast | ||

| 7 southwest | ||||

| 6 west | ||||

| Dichotic Loudness Pass | 32.1 (9.6) | 35 f | 12 high school | 15 midwest |

| 20 m | 37 undergrad | 11 northeast | ||

| 6 grad/professional | 12 southeast | |||

| 6 southwest | ||||

| 10 west |

In the categories for highest level of education, “some” and “completed” are merged; for example, participants who reported that they finished an undergraduate degree and participants who reported that they spent some time in an undergraduate program are reported the same way. This was done because there was little evidence for a difference between each pair of categories, and many of the people reporting “some” of a particular level of education are likely to still be in that program.

Childhood location (where the participant reported spending most of their childhood) is organized into five broad regions: Northeast (CT, DE, MA, MD, ME, NH, NJ, NY, PA, RI, VT), Southeast (AL, AR, FL, GA, KY, LA, MS, NC, SC, TN, VA, WV), Midwest (IA, IL, IN, KS, MI, MN, MO, ND, NE, OH, SD, WI), Southwest (AZ, OK, NM, TX), and West (AK, CA, CO, HI, ID, MT, NV, OR, UT, WA, WY). Two participants just put “United States” and are not reported for the childhood location column in these tables.

Both headphone checks exhibited several similar demographic patterns in who was excluded. Older participants were more likely to fail both headphone checks. Women, nonbinary people, and participants who declined to answer the question about gender were more likely to fail the headphone checks, with a particularly large difference for the Huggins check. People who had entered college or graduate/professional school were more likely to fail the headphone checks than participants whose highest level of education was high school. Participants from the west and northeast were most likely to pass the headphone checks, and participants from the southeast were least likely to pass the headphone checks.

Some of these results may reflect inherent characteristics of the participants, such as hearing loss among older participants. Other results may reflect choices about device. For example, women were more likely to complete the task on a phone (25%) than men (10%), and people who had entered a graduate or professional school were more likely to listen to the stimuli on computer speakers (47%) than people whose highest level of education was high school (12%).

The following sections present the results for the phonological/phonetic effects being examined. Statistical results are from mixed effects models, calculated with the lme4 package in R (Bates, Mächler, Bolker, & Walker, 2015), with p-values calculated by the lmerTest package (Kuznetsova, Bruun Brockhoff, & Haubo Bojesen Christensen, 2015).

3.3. Task 1: F0 and onset stop laryngeal contrasts

Task 1 tests the effect of F0 on perception of onset stop voicing. Based on previous work (e.g., Haggard et al., 1970), it is expected that listeners will be more likely to identify an onset stop as voiceless and aspirated when the following F0 is higher.

Table 7 presents the summary of a mixed effects logistic regression model for responses of the voiced option (e.g., best rather than pest) for the data subsetted to only include participants with at least 85% accuracy identifying unambiguous consonants. The fixed effects were Pitch (High, Low), Huggins result (Fail, Pass), Dichotic Loudness result (Fail, Pass), the interaction between Pitch and Huggins, and the interaction between Pitch and Loudness. There was a random intercept for participant and for word pair. Random slopes were not included because they were highly correlated with the corresponding intercepts and prevented the model from converging.

Regression model for voiced responses, Task 1. Reference Levels: Pitch = High, Huggins = Fail, Loudness = Fail.

| β | SE | z value | p value | |

| (Intercept) | –0.508 | 0.513 | –0.989 | 0.322 |

| Pitch Low | 0.593 | 0.194 | 3.06 | 0.00225 |

| Huggins Pass | –0.343 | 0.356 | –0.964 | 0.335 |

| Loudness Pass | –0.0877 | 0.353 | –0.249 | 0.804 |

| Pitch Low * Huggins Pass | 0.525 | 0.326 | 1.61 | 0.107 |

| Pitch Low * Loudness Pass | –0.471 | 0.323 | –1.46 | 0.145 |

The expected effect was observed regardless of participants’ performance on the headphone checks; listeners were more likely to perceive an onset stop as voiced (unaspirated) when the following vowel had a lower F0. Note that because of the existence of the interaction terms, the main effect of Pitch is providing the estimate for listeners who failed both headphone checks (even though the interactions are not significant).

There were no significant effects of either headphone check, nor significant interactions between the headphone checks and the effect of pitch.

These results suggest that headphone checks are not providing any benefit beyond the filtering that is already accomplished by excluding participants with below 85% accuracy identifying clear items.

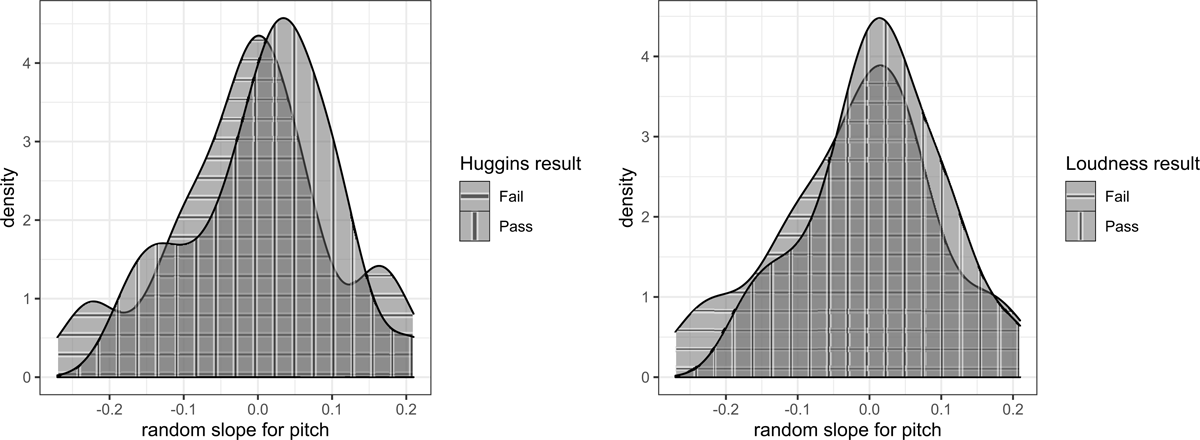

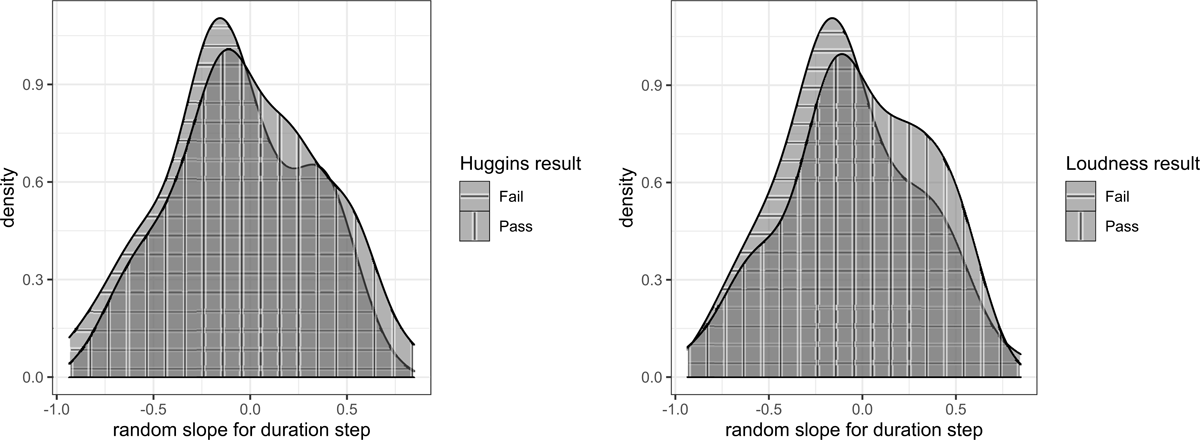

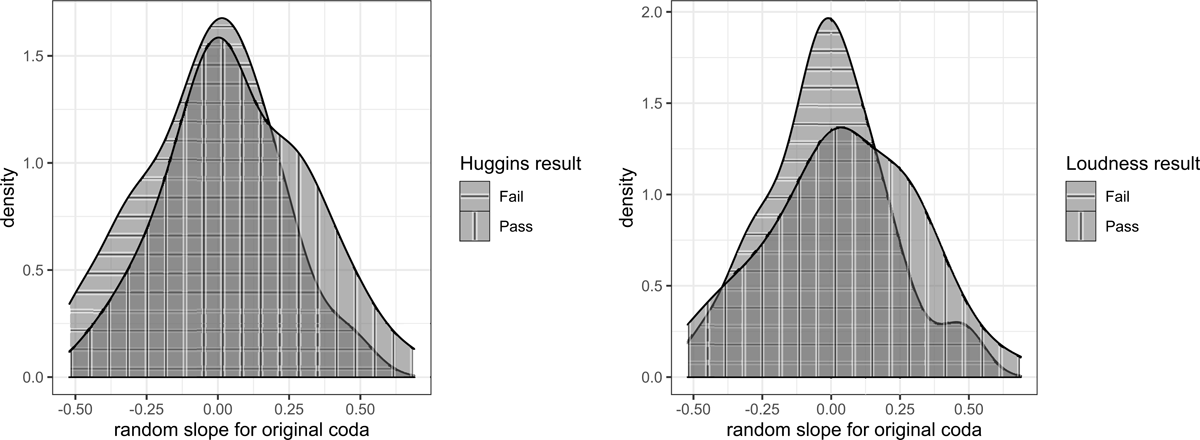

Figure 2 shows random slopes for pitch by participant, in order to visualize potential effects of the headphone checks and evaluate whether variation across participants was decreased by the headphone checks. These slopes come from a model with random slopes by participant and no random intercept by word pair, which excluded headphone checks as factors, both because a model would not converge that included these factors along with random slopes and also so that any effects of headphone checks would be reflected in the by-participant slopes rather than being captured by fixed effects. While the effect of pitch was slightly higher for participants who passed the headphone checks, there was little difference in the degree of variation across participants. Variance tests showed no evidence for a difference either based on the dichotic loudness test (F = 0.692, p = 0.181) or the Huggins test (F = 0.735, p = 0.271).

Since one of the main goals in collecting usable data is to exclude participants who are not paying attention to the task, we might also examine the effect of participants’ approval rate on Prolific. Additionally, if phones are predictive of low attention or poor audio, instructing listeners that the task should only be done on a computer or tablet should increase the size of the effect of pitch.

Table 8 presents the summary of a mixed effects logistic regression model for responses of the voiced option (e.g., best rather than pest) for the full data, with no subsetting based on accuracy for clear items. Using data already subsetted based on accuracy might obscure the effects of these Prolific settings in excluding participants who are inattentive or unable to hear the stimuli. This model is run separately from the preceding model both in order to include the full data and also because there is substantial collinearity between the headphone checks and device restriction instructions as predictors, so a model including both does not converge. The fixed effects were Pitch (High, Low), Device Restrictions (None, NoPhone), Approval Rate (90, 95), the interaction between Pitch and Device Restrictions, and the interaction between Pitch and Approval Rate. There was a random intercept for participant and for word pair.

Regression model for voiced responses, Task 1. Reference Levels: Pitch = High, DeviceRestrictions = NoPhone, ApprovalRate = 95.

| β | SE | z value | p value | |

| (Intercept) | –0.286 | 0.511 | –0.561 | 0.575 |

| Pitch Low | 0.583 | 0.253 | 2.3 | 0.0213 |

| DeviceRestrictions None | –0.319 | 0.328 | –0.972 | 0.331 |

| ApprovalRate 90 | –0.211 | 0.331 | –0.64 | 0.522 |

| Pitch Low * DeviceRestrictions None | –0.0989 | 0.309 | –0.32 | 0.749 |

| Pitch Low * ApprovalRate 90 | –0.052 | 0.311 | –0.167 | 0.867 |

There were no significant effects of either participants’ approval rate on Prolific or the instructions about which devices should be used for the task.

3.4. Task 2: Vowel height category boundaries

Task 2 tests the effect of exposure to shifted F1 on subsequent vowel category boundaries. Based on previous work (e.g., Ladefoged & Broadbent, 1957), listeners should be more likely to identify ambiguous items on /ɛ-i/ continua as being /ɛ/ after exposure to mid vowels with a lowered F1 than after exposure to mid vowels with a raised F1. The F1 of the test item should also predict responses; listeners should be more likely to perceive a vowel as /ɛ/ if it has a higher F1.

Table 9 presents the summary of a mixed effects logistic regression model for responses of the lower vowel (e.g., pet rather than pit) for the data subsetted to only include participants with at least 85% accuracy identifying unambiguous consonants. The fixed effects were F1 step, Exposure Condition (Raised F1, Lowered F1), Huggins result (Fail, Pass), Dichotic Loudness result (Fail, Pass), the interaction between F1 step and Huggins, the interaction between Exposure and Huggins, the interaction between F1 step and Loudness, and the interaction between Exposure and Loudness. There was a random intercept for participant and for word pair. Random slopes were not included because they were highly correlated with the corresponding intercepts and prevented the model from converging.

Regression model for responses of the lower vowel, Task 2. Reference Levels: Exposure Condition = Lowered F1, Huggins = Fail, Loudness = Fail.

| β | SE | z value | p value | |

| (Intercept) | –5.06 | 0.416 | –12.2 | <0.0001 |

| Exposure RaisedF1 | –1.68 | 0.306 | –5.5 | <0.0001 |

| F1step | 1.79 | 0.104 | 17.3 | <0.0001 |

| Huggins Pass | –1.09 | 0.599 | –1.82 | 0.0683 |

| Loudness Pass | 0.217 | 0.576 | 0.377 | 0.706 |

| Exposure RaisedF1 * Huggins Pass | 0.0804 | 0.518 | 0.155 | 0.877 |

| F1step * Huggins Pass | 0.265 | 0.176 | 1.51 | 0.132 |

| Exposure RaisedF1 * LoudnessPass | 0.0748 | 0.512 | 0.146 | 0.884 |

| F1step * LoudnessPass | –0.0807 | 0.17 | –0.475 | 0.635 |

The expected effects were observed regardless of participants’ performance on the headphone checks. A higher F1 made listeners more likely to identify a vowel as being mid rather than high. Prior exposure to mid vowels with a raised F1 (versus lowered F1) resulted in fewer identifications of the testing stimuli as having mid vowels: The category boundary had a higher F1 in the raised F1 exposure condition than in the lowered F1 exposure condition. As before, note that the main effects are for participants who failed both headphone checks, given the presence of the interaction terms.

There was a marginal main effect of the Huggins check; listeners who passed it were more likely to identify vowels as being higher (i.e. /i/ rather than /ɛ/). This might suggest that the Huggins check results in a population with a different category boundary. The demographic patterns described above show regional differences based on the headphone check results; people who pass the Huggins check are more likely to be from the west or northeast, and less likely to be from the southeast or midwest.

There were no significant interactions between either headphone check and either of the experimental variables.

These results suggest that headphone checks are not providing any benefit beyond the filtering that is already accomplished by excluding participants with below 85% accuracy identifying clear items.

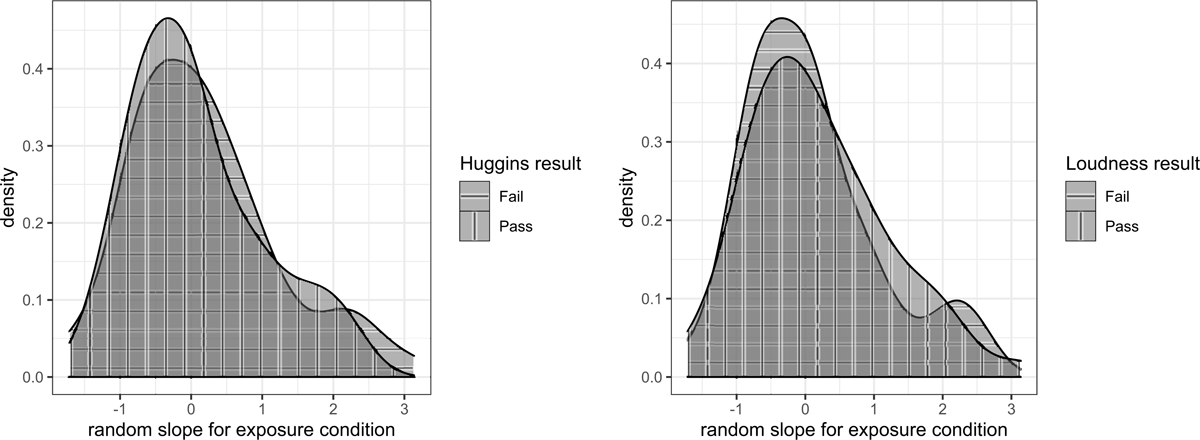

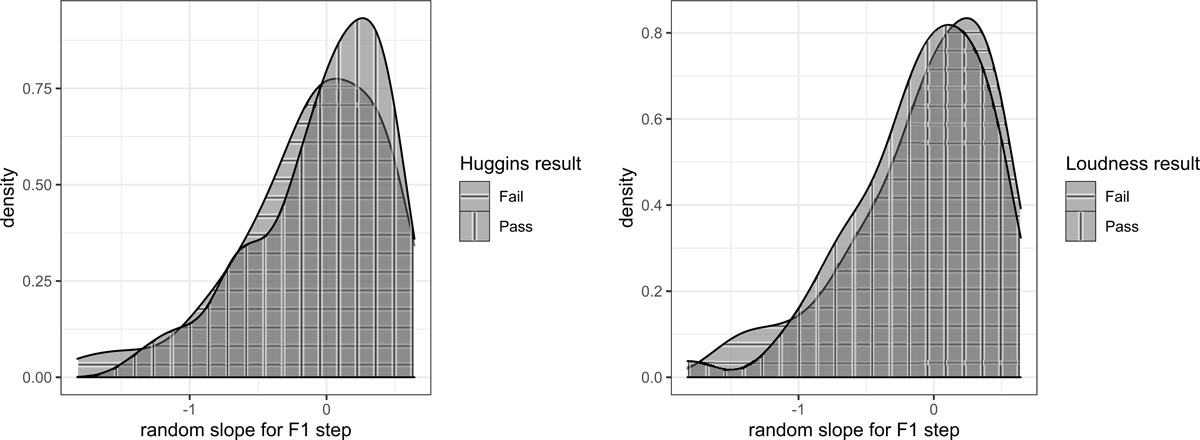

Figures 3 and 4 show random slopes by participant for the exposure condition and F1 step, respectively. These figures visualize the potential effects of headphone checks. The slopes come from a model with random slopes by participant and no random intercept by word pair, which excluded headphone checks as factors. Both factors exhibited little difference in the degree of variation across participants based on headphone check results. Variance tests confirmed the visual patterns; there was no evidence for a difference in exposure condition variance based on dichotic loudness (F = 1.05, p = 0.851) or Huggins (F = 0.775, p = 0.363), nor a difference in F1 step variance based on dichotic loudness (F = 0.89, p = 0.671) or Huggins (F = 0.759, p = 0.325).

The next set of factors to be examined are participants’ approval rate on Prolific and instructions about which devices the task could be done on. Table 10 presents the summary of a mixed effects logistic regression model for responses of the lower vowel (e.g., pet rather than pit) for the full data. The fixed effects were F1 step, Exposure (Raised F1, Lowered F1), Device Restrictions (None, NoPhone), Approval Rate (90, 95), the interaction between F1 step and Device Restrictions, the interaction between Exposure and Device Restrictions, the interaction between F1 step and Approval Rate, and the interaction between Exposure and Approval Rate. There was a random intercept for participant and for word pair.

Regression model for responses of the lower vowel, Task 2. Reference Levels: Exposure Condition = Lowered F1, DeviceRestrictions = NoPhone, ApprovalRate = 95.

| β | SE | z value | p value | |

| (Intercept) | –5.84 | 0.56 | –10.4 | <0.0001 |

| Exposure RaisedF1 | –2.04 | 0.414 | –4.92 | <0.0001 |

| F1step | 1.94 | 0.156 | 12.5 | <0.0001 |

| DeviceRestrictions None | 1.67 | 0.599 | 2.78 | 0.00534 |

| ApprovalRate 90 | –0.791 | 0.53 | –1.49 | 0.136 |

| Exposure RaisedF1 * DeviceRestrictions None | 0.665 | 0.496 | 1.34 | 0.18 |

| F1step * DeviceRestrictions None | –0.479 | 0.174 | –2.75 | 0.00587 |

| Exposure RaisedF1 * ApprovalRate 90 | 0.13 | 0.48 | 0.271 | 0.786 |

| F1step * ApprovalRate 90 | 0.309 | 0.152 | 2.03 | 0.0422 |

There were more responses of /ɛ/ among people with no instructions about device restrictions. This could potentially be an effect of the frequency response produced by phones. Measurements of formants are biased towards harmonics (Chen, Whalen, & Shadle, 2019). Patterns of intensity might similarly affect perception; for example, Lotto, Holt, and Kluender (1997) find that increasing the intensity of lower frequencies increases identifications of vowels as tense. Alternatively, this could be an effect of the dialects of the speakers who were using phones. Only 8% of northeastern participants and 15% of southwestern participants reported using phones, while 29% of midwestern participants, 24% of southeastern participants, and 25% of western participants reported using phones.

There was a smaller effect of F1 step among participants with no device restriction instructions: F1 has less of an impact on what vowel they identify the stimulus as having. This effect is consistent with participants on their phones being less able to hear the stimuli or paying less attention.

However, there was also a larger effect of F1 step among participants recruited with a lower restriction on approval rate. The cause for this small but significant effect is not clear. It might be due to the incomplete balancing of conditions, as only people in the condition with no device restrictions had the 90% approval rate threshold. However, it is worth considering that the approval rate threshold might capture something about the way that participants approach tasks that is not simply low attention; they might be less attentive to the characteristics that are being used for rejections, but might be more attentive to some aspects of the task.

3.5. Task 3: Spectral tilt and perceived vowel duration

Task 3 tests the effect of spectral tilt on perceived vowel duration. Based on previous work (Sanker, 2020), listeners should be more likely to identify vowels as long when they have a lower spectral tilt. Actual duration should also be a predictor of perceived duration, and listeners should compensate for the voicing of the coda, more often identifying vowels as long when they are presented with a voiceless coda.

Table 11 presents the summary of a mixed effects logistic regression model for ‘long’ responses for the data subsetted to only include participants with at least 85% accuracy identifying unambiguous consonants. The fixed effect were Duration Step, Original Coda (p, b), Spliced Coda (p, b), Spectral Tilt (high, low), Huggins result (Fail, Pass), Dichotic Loudness result (Fail, Pass), the interaction between Duration Step and Huggins, the interaction between Original Coda and Huggins, the interaction between Spliced Coda and Huggins, the interaction between Spectral Tilt and Huggins, the interaction between Duration Step and Loudness, the interaction between Original Coda and Loudness, the interaction between Spliced Coda and Loudness, and the interaction between Spectral Tilt and Loudness. There was a random intercept for participant, and a random slope for Duration Step, Original Coda, Spliced Coda, and Spectral Tilt by participant. Note that unlike the previous two studies, including these random slopes was possible, because the correlation between the intercept and the random slopes was low.

Regression model for ‘long’ responses, Task 3. Reference Levels: OrigCoda = b, SplicedCoda = b, Tilt = High, Huggins = Fail, Loudness = Fail.

| β | SE | z value | p value | |

| (Intercept) | –2.8 | 0.325 | –8.63 | <0.0001 |

| DurationStep | 0.894 | 0.0942 | 9.49 | <0.0001 |

| OrigCoda p | –0.448 | 0.138 | –3.25 | 0.00114 |

| SplicedCoda p | 0.603 | 0.164 | 3.68 | 0.000236 |

| Tilt Low | 0.154 | 0.246 | 0.627 | 0.531 |

| Huggins Pass | –1.02 | 0.542 | –1.89 | 0.0591 |

| Loudness Pass | 0.793 | 0.535 | 1.48 | 0.138 |

| DurationStep * Huggins Pass | 0.222 | 0.159 | 1.39 | 0.164 |

| OrigCoda p * Huggins Pass | 0.233 | 0.233 | 1.0 | 0.317 |

| SplicedCoda p * Huggins Pass | –0.633 | 0.276 | –2.29 | 0.0219 |

| Tilt Low * Huggins Pass | 1.11 | 0.412 | 2.7 | 0.00695 |

| DurationStep * Loudness Pass | 0.01 | 0.157 | 0.064 | 0.949 |

| OrigCoda p * Loudness Pass | –0.0123 | 0.23 | –0.054 | 0.957 |

| SplicedCoda p * Loudness Pass | –0.513 | 0.273 | –1.88 | 0.0604 |

| Tilt Low * Loudness Pass | –0.256 | 0.408 | –0.627 | 0.531 |

Most of the same effects found by Sanker (2020) were observed regardless of participants’ performance on the headphone checks. Listeners were more likely to identify vowels with longer duration as being long, less likely to identify vowels as long when they had originally been produced with a voiceless coda, and more likely to identify vowels as long when they were presented with a voiceless coda.

As before, note that the main effects are for participants who failed both headphone checks, given the presence of the interaction terms. Among participants who failed both headphone checks, there was not a significant effect of spectral tilt in predicting identifications of the vowel as long. It is perhaps worthwhile to note that in a model that omits headphone checks as factors, listeners were significantly more likely to identify vowels as long if they had lower spectral tilt: β = 0.509, SE = 0.175, z = 2.91, p = 0.00364.

There was a marginally significant main effect of the Huggins check: Listeners who passed the Huggins check were less likely to identify vowels as being long. There was a trend of the Dichotic Loudness check in the opposite direction, increasing the odds that a vowel would be identified as long. This might be an issue resulting from the moderate correlation of Huggins and Dichotic Loudness. If these results are indeed capturing something real, it is unclear what is driving them.

The interaction between Spliced Coda and Huggins is significant; the effect of the spliced coda was eliminated among participants who passed the Huggins check. This might indicate that the Huggins check has a relationship with how participants are approaching the task; if listeners categorize vowel duration before hearing the coda, they will not exhibit an effect of coda characteristics. The median time spent on the task was almost 20% more for participants who failed the Huggins check than for participants who passed.

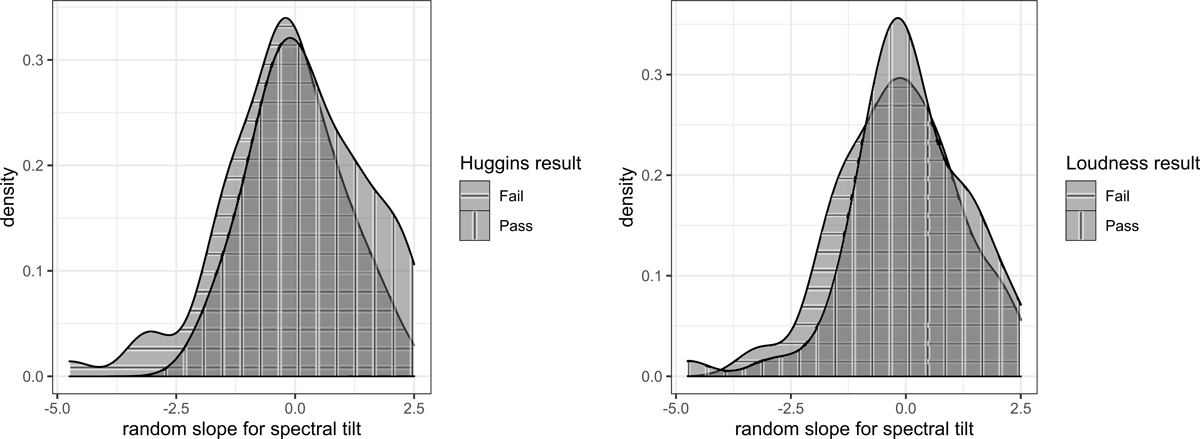

The interaction between Spectral Tilt and Huggins is significant; listeners who passed the Huggins check were more likely to identify vowels with lower spectral tilt as being longer (the effect predicted by previous work).

The interaction between Spliced Coda and Dichotic Loudness is marginally significant; the effect of the spliced coda was eliminated among participants who passed the dichotic loudness check. Similar to the interaction between Spliced Coda and Huggins, this likely reflects time spent on the task: Participants who failed the dichotic loudness check spent 9% longer on the task than participants who passed.

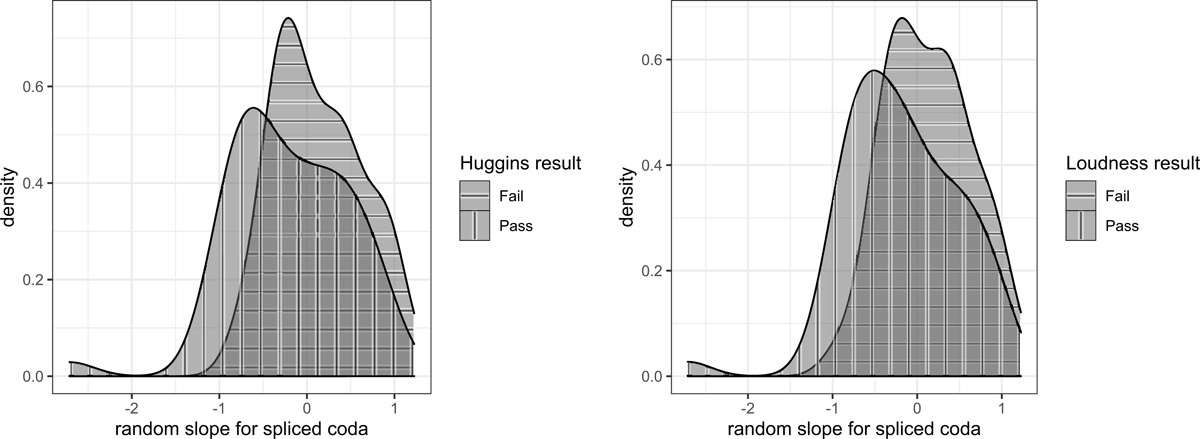

Figures 5, 6, 7, and 8 show random slopes by participant for duration step, original coda, spliced coda, and spectral tilt, respectively. These figures visualize the potential effects of headphone checks. These slopes come from a model that excluded headphone checks as factors. While the center of the distribution differs based on the headphone checks for the effects of spliced coda and spectral tilt (consistent with the model results), all of the factors exhibited relatively little difference in the degree of variation across participants based on headphone check results. Variance tests confirmed the visual patterns; there was no evidence for a difference in duration step variance based on dichotic loudness (F = 1.04, p = 0.89) or Huggins (F = 1.05, p = 0.86), nor a difference in original coda variance based on dichotic loudness (F = 1.53, p = 0.118) or Huggins (F = 1.12, p = 0.675), nor an effect of spectral tilt variance based on dichotic loudness (F = 1.09, p = 0.739) or Huggins (F = 0.779, p = 0.375). However, there was evidence for a small difference in spliced coda variance based on dichotic loudness (F = 1.82, p = 0.0286) and Huggins (F = 1.86, p = 0.0218); in both cases, participants who passed the headphone check are found to have more variance.

The next set of factors to be examined are participants’ approval rate on Prolific and the given instructions about which devices the task could be done on. Table 12 presents the summary of a mixed effects logistic regression model for ‘long’ responses for the full data. The fixed effects were Duration Step, Original Coda (p, b), Spliced Coda (p, b), Spectral Tilt (high, low), Device Restrictions (None, NoPhone), Approval Rate (90, 95), the interaction between Duration Step and Approval Rate, the interaction between Original Coda and Approval Rate, the interaction between Spliced Coda and Approval Rate, the interaction between Spectral Tilt and Approval Rate, the interaction between Duration Step and Device Restrictions, the interaction between Original Coda and Device Restrictions, the interaction between Spliced Coda and Device Restrictions, and the interaction between Spectral Tilt and Device Restrictions. There was a random intercept for participant, and a random slope for Duration Step, Original Coda, Spliced Coda, and Spectral Tilt by participant.

Regression model for ‘long’ responses, Task 3. Reference Levels: OrigCoda = b, SplicedCoda = b, Tilt = High, DeviceRestrictions = NoPhone, ApprovalRate = 95.

| β | SE | z value | p value | |

| (Intercept) | –3.2 | 0.455 | –7.04 | <0.0001 |

| DurationStep | 0.957 | 0.132 | 7.25 | <0.0001 |

| OrigCoda p | –0.29 | 0.183 | –1.59 | 0.113 |

| SplicedCoda p | –0.467 | 0.227 | –2.05 | 0.04 |

| Tilt Low | 0.924 | 0.328 | 2.82 | 0.00482 |

| DeviceRestrictions None | 0.863 | 0.543 | 1.59 | 0.112 |

| ApprovalRate 90 | –0.753 | 0.529 | –1.42 | 0.155 |

| DurationStep * DeviceRestrictions None | –0.141 | 0.158 | –0.893 | 0.372 |

| OrigCoda p * DeviceRestrictions None | –0.114 | 0.219 | –0.522 | 0.602 |

| SplicedCoda p * DeviceRestrictions None | 0.643 | 0.274 | 2.35 | 0.0188 |

| Tilt Low * DeviceRestrictions None | –0.545 | 0.397 | –1.37 | 0.17 |

| DurationStep * ApprovalRate 90 | 0.304 | 0.155 | 1.96 | 0.0504 |

| OrigCoda p * ApprovalRate 90 | 0.103 | 0.209 | 0.492 | 0.623 |

| SplicedCoda p * ApprovalRate 90 | 0.198 | 0.265 | 0.748 | 0.455 |

| Tilt Low * ApprovalRate 90 | –0.091 | 0.389 | –0.234 | 0.815 |

The interaction between Duration Step and Approval Rate was marginally significant. Participants recruited with the lower threshold for approval rate had a slightly larger effect of duration step: Actual duration was a larger predictor of whether the vowel was identified as being long.

The main effect of Spliced Coda (i.e., for participants in the NoPhone condition with the 95% approval rate threshold) is actually the opposite of what was predicted and what is observed in the previous model; participants were less likely to identify vowels as long when they were presented with a voiceless coda, though the effect is relatively small. This might suggest a different task strategy, in which listeners categorize vowel duration in a way that aligns with what is expected based on the coda, rather than compensating for what is expected based on the coda. The two effects of spliced coda voicing might reflect different stages of processing; listeners in the NoPhone condition completed the task more quickly than listeners with no device restrictions.

The interaction between Spliced Coda and Device Restrictions was significant. The effect of the spliced coda is eliminated when participants were given no instructions about what devices could be used.

4. Discussion

The results help demonstrate how headphone checks are functioning, what types of studies they are likely to affect, and why those effects arise. While most phonological and phonetic patterns do not exhibit clear effects of headphone checks, there are some significant effects. In addition to effects of headphone checks that can be explained by acoustic characteristics of the audio produced by headphones, there are some effects that seem to be due to patterns in the demographic characteristics of which participants pass or fail headphone checks. Both types of effects have implications for how experimental design might impact the interpretability and generalizability of results, based on what acoustic characteristics, task strategies, or demographic patterns the examined phenomena are sensitive to.

4.1. Interpretation of results

The results of the perception tasks in this study show limited benefits of headphone checks. For a range of factors, there was no evidence that listeners who passed the headphone checks (Huggins, Milne et al., 2021 and dichotic loudness, Woods et al., 2017) produced different results than listeners who failed these checks. These tests included F0 as a cue to onset voicing, F1 as a cue to vowel height, effect of exposure to manipulated F1 on subsequent category boundaries, and actual vowel duration as a predictor of perceived vowel duration. Headphone checks do have some significant or marginal main effects and interactions with phonological factors; however, it is likely that the effects arise in several different ways.

One factor which was substantially impacted by headphone checks was spectral tilt as a predictor of perceived vowel duration, in Task 3. Participants who passed the Huggins check had a larger effect of spectral tilt on perceived vowel duration, replicating the previously observed effect; participants who failed the Huggins check did not exhibit a significant effect. This expected effect depends on the relationship between frequency and perceived loudness and between loudness and perceived duration; perceived intensity increases with frequency, so a sound with lower spectral tilt is likely to be perceived as louder and subsequently longer (Sanker, 2020). Intensity at different frequencies can vary substantially across devices, which can obscure effects that depend on spectral tilt. The reason why the Huggins check results in a stronger effect of spectral tilt on perceived vowel duration may be that the check is specifically selecting for high-quality headphones, which are better at producing low frequencies and thus will capture the differences in spectral tilt, whereas devices which attenuate low frequencies reduce the difference between the spectral tilt manipulations. The Huggins check is likely to similarly provide a benefit for capturing other effects that also depend on intensity relative to frequency.

Not all effects of headphone checks seems to reflect an improvement in capturing expected effects. The effect of spliced coda voicing on perceived vowel duration in Task 3 was only significant among participants who failed the headphone checks, and was eliminated among participants who passed one or both checks. The by-participant variation was also larger among participants who passed the headphone checks. This might be related to how participants approached the tasks; participants who passed headphone checks completed the study more quickly than participants who failed them. Given the faster responses, it is possible that the effect of coda voicing was absent among these participants because they often responded before hearing the codas. One of the reasons why participants who pass headphone checks might complete the tasks more quickly is that they have more experience in experimental tasks. Participants who have more experience with perception experiments might also be more likely to recognize headphone checks, which could make them less likely to misinterpret the instructions and more likely to know what listening setup will allow them to pass the check. Listeners who have done the headphone check tasks before may also have higher accuracy in them due to practice; Akeroyd, Moore, and Moore (2001) find a significant improvement in identification of melodies produced by Huggins dichotic pitch stimuli from the first block of items to the second block. Another possible reason for the speed difference is that the headphone checks reduce the average age of participants, and younger participants complete the task more quickly (r(109) = 0.27, p = 0.00415), but the difference in task duration based on headphone check results is larger than what would be predicted just based on the relationship between age and task duration.

Headphone checks also produce differences in participants’ regional origins, which can impact the results in ways not related to audio clarity. There was a marginal main effect of Huggins on the category boundary between /i/ and /ɛ/ in Task 2; listeners who passed the Huggins check were more likely to identify ambiguous vowels as being /i/. Participants from the midwest and southeast are more likely to fail the Huggins check, while participants from the northeast and west have better odds of passing the Huggins check. Several known shifts might contribute to the Huggins check producing a different vowel category boundary: /i/ is lowered in the Northern California vowel shift (Eckert, 2008) and raised in the Southern vowel shift (Labov, Ash, & Boberg, 2006); a lower prototypical /i/ would result in more stimuli in the mid to high range being identified as /i/, while a higher prototypical /i/ would result in fewer stimuli in this range being identified as /i/.

The effect of headphone checks varies depending on what is being examined; for example, perception of vowel quality based on F1 versus perception of vowel duration based on spectral tilt or coda environment. For many perceptual effects examined here, headphone checks have no apparent impact. For other patterns, headphone checks might impact results either because headphone use is directly important for capturing the pattern or because headphone checks are selecting for a population that produces different results because of their dialects or approaches to the task. The impact of headphone checks seems to depend on the nature of the particular factor being examined, rather than the robustness of the effect. None of the effects examined here are at ceiling, so there would be room for all of them to be influenced by headphone checks, but most are not. Small effects will be more susceptible to being obscured by variability, but it is not clear that headphone checks are reducing variability across listeners in relevant ways; none of the factors examined showed evidence for participants who passed the headphone checks having less variability than participants who failed them.

4.2. Implications

One benefit of the rather limited effects of headphone checks is that results across studies are likely to be comparable even though some published studies have used headphone checks and others have not. A few studies compare how their results would turn out depending on whether or not they exclude participants who failed the headphone check, and report no difference (Ringer et al., 2022; Shen & Wu, 2022). However, headphone checks can influence the results for some phonological and phonetic effects, for several potential reasons.

What participants are passing headphone checks? One part of what headphone checks are doing is selecting for headphones, as has been demonstrated previously (Milne et al., 2021; Woods et al., 2017). The data presented here shows that there are also several demographic differences between participants who pass and fail headphone checks, including age, gender, education, and geographic region. There is also evidence that the headphone checks select for participants who approach the tasks differently, in particular resulting in faster task completion. While these relationships raise some concerns, knowing more about the factors that predict variation in online studies allows us to better control for them.

Participants who pass headphone checks are more likely to be from the northeast or the west, while participants who fail the headphone checks are more likely to be from the southeast or the midwest. This can produce differences in category boundaries or even what contrasts exist. Online participants come from many different locations; this variability may be desirable in capturing broad patterns among speakers of different dialects of a language, though in other cases it may be desirable to have a more uniform sample or to recruit participants from particular dialect regions. A sample biased towards a particular location might have different results than a more regionally balanced sample, so having regional information can be important in interpreting results and evaluating whether the observed patterns are likely to be generalizable. Regional information can be collected in post-task demographic surveys, as was done in this study. It is also possible to recruit participants using eligibility filters; Prolific includes the option to recruit based on state-level location and state where the participant was born.

Participants who pass the headphone checks are more likely to be men than women. This result may be related to device usage, as women were more likely than men to complete the task using a phone. The small number of participants who were nonbinary or did not identify their gender were also more likely to fail the headphone checks than men were. Differences in participant gender might impact the results for some studies, given gender effects in some linguistic tasks (e.g., Namy, Nygaard, & Sauerteig, 2002).

Participants who pass headphone checks are likely to be younger than participants who fail them. One major source of this effect is likely to be hearing loss among older participants, which makes it more difficult to hear Huggins pitch stimuli (Santurette & Dau, 2007). Older and younger individuals can also differ in phonological patterns based on sound changes in progress (e.g., Harrington, Kleber, & Reubold, 2008), and exhibit differences in linguistic tasks for a range of other reasons (e.g., Scharenborg & Janse, 2013; Shen & Wu, 2022). Given that many in-person studies use university undergraduates as participants (Peterson, 2001), it is possible that younger online participants will produce results more similar to previous in-person results, so it is important to consider how the gold standard for comparison should be determined.